Digital Marketing: Full-Funnel Experimentation Culture

The Growth Engine: Building a Full-Funnel Experimentation Culture for Sustained Market Leadership

The New Competitive Imperative: Integrating Experimentation Across the Customer Journey

In today’s hyper-competitive digital landscape, the traditional paradigms of marketing are no longer sufficient for sustainable growth. Market leaders are distinguished not by the size of their budgets, but by the speed of their learning. This report outlines a strategic framework for building a durable competitive advantage by fusing two powerful concepts: a full-funnel marketing strategy and a deeply embedded culture of experimentation. This synthesis represents a fundamental shift in how businesses operate, transforming marketing from a siloed, campaign-driven function into a core, cross-functional business process for systematically de-risking decisions and accelerating growth. The analysis will demonstrate that moving from intuition-based decision-making to a disciplined, data-driven approach across the entire customer journey is no longer a choice, but a strategic imperative for survival and market leadership.

Deconstructing the Full-Funnel Model: Beyond Awareness to Advocacy

A full-funnel marketing strategy is a holistic and comprehensive approach that engages potential customers at every stage of their journey with a brand. This model moves beyond a singular focus on conversion to encompass the entire customer lifecycle, from the initial moment of awareness through consideration, decision, and extending into post-purchase loyalty and advocacy. The “funnel” itself is a conceptual framework representing the path a customer might take, starting with a broad audience at the top and narrowing as they move closer to a purchase.

The primary strength of this model lies in its recognition that modern customer journeys are rarely linear. A potential customer can enter, exit, and re-enter the funnel at any stage, interacting with a brand across a multitude of channels and touchpoints. A full-funnel strategy ensures that a brand has a cohesive and consistent presence at each of these potential interactions, providing tailored content and messaging that addresses the specific needs, questions, and behaviors of the customer at that particular stage.

The business benefits of adopting this comprehensive view are substantial and interconnected:

- Increased Brand Awareness: By targeting the top of the funnel with content marketing, SEO, and social media, companies can reach a broader audience and build the brand recognition necessary for future engagement.

- Better Lead Nurturing: A full-funnel approach ensures continuous and relevant communication with prospects as they move through the consideration phase. Techniques like email marketing, retargeting, and in-depth content (e.g., whitepapers, webinars) build trust and keep the brand top-of-mind.

- Higher Conversion Rates: By systematically addressing customer needs and objections at each stage, this strategy minimizes friction in the buying process, effectively guiding prospects toward a purchase decision and increasing the likelihood of conversion.

- Improved Customer Insights: Analyzing performance and customer behavior at each stage of the funnel provides invaluable data. These insights allow marketers to refine their strategies, tailor messaging more effectively, and gain a deeper understanding of their audience, creating a virtuous cycle of improvement.

- Enhanced Loyalty and Lifetime Value: The model extends beyond the initial purchase, focusing on retention and turning satisfied customers into loyal advocates. This long-term perspective is critical for sustainable growth and maximizing customer lifetime value.

By treating the customer journey as an integrated whole rather than a series of disconnected campaigns, a full-funnel strategy lays the groundwork for more efficient and effective marketing that drives not just immediate sales, but also long-term brand equity and customer loyalty.

From Guesswork to Growth: The Principles of Data-Driven Marketing Experimentation

Marketing experimentation is the systematic application of the scientific method to marketing strategies, designed to move decision-making from a foundation of intuition and guesswork to one of empirical evidence. It is a disciplined process of forming data-led ideas and testing them to measure their effect on key business variables, such as user acquisition, conversion rates, or customer retention. This approach allows organizations to understand what resonates with their audience, validate their efforts with clarity on incremental impact, and ultimately optimize the allocation of resources to maximize return on investment (ROI).

The core methodology of marketing experimentation follows a structured, repeatable process:

- Define a Goal: The process begins with a clear objective, such as increasing sign-ups on a landing page or improving the open rate of an email campaign.

- Formulate a Hypothesis: Based on data, observation, or prior learnings, a testable hypothesis is created. A strong hypothesis follows a clear structure, such as: “If we change [Independent Variable], then will change, because”. This structure ensures the experiment is designed to answer a specific question.

- Create Variations: At least two versions of an asset are created: the “control” (the original) and the “variation” (the version with the change being tested). This is the foundation of A/B testing, the most common form of marketing experimentation.

- Run the Experiment: The variations are shown to different segments of the audience simultaneously and under controlled conditions to isolate the impact of the tested variable.

- Analyze the Results: Once the experiment has run for a sufficient period to achieve statistical significance, the data is analyzed to determine which version performed better against the predefined goal.

A critical principle that distinguishes a mature experimentation practice is the understanding that its primary value lies in learning, not just “winning.” Many marketers historically focused solely on achieving a positive result, a mindset that can be counterproductive. Even industry leaders in experimentation, such as Netflix and Microsoft, report that only 10% to 33% of their experiments produce a winning outcome. This statistic highlights a profound truth: the real ROI of experimentation comes from the knowledge generated by every test, including those that “fail.” A failed experiment provides valuable information about what doesn’t work, preventing the organization from investing significant resources in a flawed strategy and refining future hypotheses. By embracing this learning mindset, organizations move beyond simple conversion rate optimization (CRO) and build a robust, iterative engine for sustained growth.

Defining the Culture: Shifting from “What We Do” to “Who We Are”

A full-funnel experimentation culture is the organizational environment where the principles of data-driven testing are systematically and holistically applied across every stage of the customer journey. It represents a fundamental shift in corporate mindset, moving experimentation from a sporadic tactic performed by a specialized team to a core philosophy that defines how the entire organization approaches problems, makes decisions, and drives growth. This culture is not merely about running A/B tests; it is about fostering an ecosystem of curiosity, intellectual honesty, and continuous improvement.

This cultural transformation is characterized by several key attributes:

- Data Over Opinions: In an effective test-and-learn culture, decisions are guided by empirical evidence rather than seniority or gut instinct. The “Highest Paid Person’s Opinion” (HiPPO) is replaced by validated data, creating a more meritocratic and effective decision-making process.

- Empowerment and Accessibility: The ability to conduct an experiment is democratized. Any employee with a clearly constructed hypothesis is empowered with the tools and autonomy to test their ideas, minimizing bureaucracy and accelerating the pace of learning.

- Strategic Integration: Experiments are not random or siloed tactical activities. Instead, every test is aligned with and ladders up to overarching business goals and strategic objectives, ensuring that learning is purposeful and contributes directly to the company’s “north star metric”.

- Psychological Safety: The culture actively encourages calculated risk-taking by treating failures as valuable learning opportunities rather than punishable mistakes. This psychological safety is essential for motivating teams to test bold, innovative ideas instead of sticking to safe, incremental changes.

Ultimately, this culture redefines the role of marketing. It is no longer just about executing campaigns and reporting on surface-level metrics. Instead, it becomes a scientific and strategic function responsible for generating validated customer insights that inform not only marketing tactics but also product development, sales strategy, and overall business direction. When experimentation is embedded across the full funnel, it becomes the engine of the business, systematically identifying and eliminating friction in the customer experience and uncovering new pathways for growth.

The Strategic Dividend: Quantifying the Business Case for an Experimentation Culture

Adopting a full-funnel experimentation culture delivers a significant strategic dividend that extends far beyond incremental lifts in marketing campaign metrics. It fundamentally alters an organization’s capacity to innovate, mitigate risk, and achieve sustainable growth, directly connecting marketing efforts to bottom-line business outcomes. The business case is built on four pillars of tangible value. First, it serves as a powerful risk mitigation framework.

In a traditional model, launching a new feature, campaign, or product is often a high-stakes gamble based on assumptions. Experimentation transforms this gamble into a calculated, evidence-based process. By testing ideas on a smaller scale before a full rollout, companies can identify and address potential issues early, preventing costly mistakes and mitigating the risk of launching poorly received initiatives that could harm user experience, lower conversion rates, or even reduce revenue. The cost of inaction—failing to experiment—becomes a significant liability, risking market share and relevance.

Second, this culture acts as an innovation engine. It creates a safe environment for teams to challenge assumptions, explore novel ideas, and iterate rapidly. The quick feedback loops provided by experiments allow for rapid learning, helping teams to “fail fast” and pivot resources toward more promising avenues. This agility is a critical competitive advantage, enabling businesses to respond more effectively to changing customer needs and navigate market uncertainty.

Third, it drives superior resource optimization and ROI. By systematically identifying which strategies, channels, and messages are most effective, experimentation allows businesses to move beyond guesswork and allocate their time, budget, and personnel with precision. This data-driven approach ensures that resources are focused on activities with the highest proven impact, directly improving key financial metrics such as Return on Ad Spend (ROAS), Customer Acquisition Cost (CAC), and overall profitability.

Finally, the cumulative effect of these benefits creates a durable competitive advantage. Companies that embed experimentation into their DNA can learn and adapt faster than their competitors. While many organizations focus on optimizing existing processes for marginal gains, a mature experimentation culture also unlocks the potential for transformative discovery. It encourages teams to make “big bets” and test foundational business assumptions—for instance, by questioning the true value of a major marketing channel. Such bold experiments, while risky, can lead to step-change improvements in strategy and performance, as demonstrated by eBay’s discovery that much of its spending on certain Google ads was ineffective. This ability to not only refine tactics but also validate or invalidate entire strategies provides a level of strategic clarity that is exceptionally difficult for competitors to replicate.

Architecting a Culture of Continuous Learning

Building a full-funnel experimentation culture is not an accident; it is an act of deliberate organizational design. It requires architecting an ecosystem where continuous learning is not just encouraged but systematically enabled through a combination of leadership, strategy, people, processes, and technology. This section provides a blueprint for constructing this culture, detailing the foundational pillars, the critical role of leadership in fostering psychological safety, the optimal structures for agile growth teams, and the operational workflows that turn experimentation into a scalable, repeatable, and high-impact business function.

The Foundational Pillars of a High-Impact Experimentation Program

A successful and scalable experimentation program is built upon five interconnected pillars. Neglecting any one of these can undermine the entire structure, leading to unreliable results, wasted resources, and a failure to achieve cultural adoption. These pillars provide a comprehensive framework for assessing an organization’s current maturity and for building a robust program capable of driving meaningful business impact.

-

Leadership & Strategic Alignment: This is the most critical pillar. It begins with explicit, enthusiastic buy-in from the C-suite. Leadership must not only approve of experimentation but actively champion it as a core business strategy. This involves aligning the program’s goals directly with overarching business objectives (e.g., revenue growth, market share, customer LTV) and creating an environment of psychological safety where teams are empowered to take calculated risks and learn from failures without fear of blame.

-

People & Skills (Human Resources): An experimentation program is only as good as the people who run it. Building this capability requires assembling dedicated, cross-functional teams that bring together a diverse set of skills. A typical high-impact team includes an experimentation lead or product manager to drive strategy, data analysts or scientists for rigorous analysis, software engineers for implementation, and marketers or user researchers to provide customer context and generate hypotheses.

-

Process & Governance: To move from ad-hoc testing to a scalable program, standardized and transparent processes are essential. This includes establishing a clear workflow for the entire experiment lifecycle: ideation from various data sources, a structured hypothesis-framing protocol, a prioritization framework (like the ICE model) to build a strategic roadmap, and rigorous execution and analysis standards. A crucial part of governance is the creation and maintenance of a centralized knowledge management system to document and share all learnings.

-

Technology & Tools: The right technology stack is the engine that powers experimentation at scale. This includes tools for visitor behavior analysis (heatmaps, session recordings), A/B testing platforms (client-side and server-side), data collection and integration tools, and platforms for project management and knowledge sharing. The choice of technology should align with the organization’s maturity and strategic goals.

-

Data & Measurement: The heartbeat of experimentation is trustworthy data. This pillar involves ensuring that data collection is accurate, comprehensive, and accessible. It requires a commitment to clean analytics, clear definitions for key metrics, and the statistical rigor to ensure that experiment results are valid and reliable. Without trust in the underlying data, the entire program loses credibility and stakeholder buy-in will erode.

Leadership as the Catalyst: Modeling Behavior and Fostering Psychological Safety

The transition to an experimentation-led organization is fundamentally a cultural one, and as with any cultural shift, it flows from the top down. Leadership’s role is not merely to approve a budget for testing tools but to act as the primary catalyst, actively modeling the desired behaviors and creating an environment where a test-and-learn mindset can flourish. Without unwavering and visible executive sponsorship, even the most well-designed program is likely to falter.

The first responsibility of leadership is to champion a data-over-opinions approach. This involves a conscious shift in their own decision-making process. When presented with a new idea, the default response from a leader in this culture is not “I think we should…” but rather “That’s an interesting hypothesis. How can we test it?”. By demonstrating this curiosity and vulnerability, leaders grant permission for their teams to do the same, effectively dismantling the hierarchical decision-making that stifles innovation. Leaders like Satya Nadella at Microsoft have demonstrated the power of this approach, fostering a growth mindset that empowers teams to question assumptions.

Equally critical is the creation of psychological safety, which is the bedrock of risk-taking and innovation. Experimentation, by its nature, involves uncertainty and frequent “failure.” If the organizational culture punishes failed experiments, teams will naturally gravitate toward safe, incremental tests with minimal learning potential, avoiding the bold ideas that could lead to breakthroughs. Leaders must therefore redefine what constitutes success. They can achieve this by publicly celebrating the learnings from all well-designed experiments, regardless of whether the outcome was positive or negative. This means rewarding intellectual honesty, rigorous methodology, and valuable insights over simply “winning” tests. When leaders hold regular reviews to analyze both successful and failed experiments, they reinforce the message that the primary goal is learning, not just being right.

Organizational Design for Agility: Structuring Cross-Functional Growth Teams

The structure of the team responsible for experimentation has a profound impact on the program’s velocity, quality, and strategic influence. There is no single “correct” model; the optimal design depends on the organization’s size, goals, and, most importantly, its experimentation maturity. The most successful companies often evolve their structure over time, progressing through different models as their capabilities grow.

Three primary organizational models are commonly observed:

-

Centralized Model: In this structure, a single, dedicated team of specialists (e.g., analysts, developers, CRO leads) is responsible for running experiments across the entire organization. This team acts as an internal agency, taking requests from various departments.

-

Advantages: Ensures high standards of methodological rigor, consistency, and clear accountability. It is an effective model for organizations in the early stages (“Crawl” or “Walk”) of building their experimentation capability, as it concentrates expertise and establishes best practices.

-

Disadvantages: Can become a bottleneck as demand for tests outstrips the team’s capacity. The central team may also lack the deep domain context of individual product or marketing teams.

-

-

Decentralized (or Embedded) Model: Here, experimentation specialists are embedded directly within different business units, product squads, or marketing teams.

Decentralized Model

Each unit has the autonomy to run its own tests.

- Advantages: Fosters high velocity and deep domain expertise, as the people running the tests are closest to the problems. It aligns well with agile methodologies.

- Disadvantages: Can lead to inconsistent quality, duplicated efforts, and siloed learnings if there is no central governance. It requires a high level of data literacy across the organization.

Center of Excellence (CoE) or Hybrid Model

This model combines the strengths of the other two and represents the most mature and scalable structure. A small central team (the CoE) is responsible for strategy, governance, training, and providing the tools and platforms for experimentation. The actual execution of tests is carried out by empowered, decentralized teams.

- Advantages: Balances autonomy with quality control. It enables scale by democratizing experimentation while maintaining high standards through the CoE’s guidance. This structure fosters a true culture of experimentation by making it everyone’s responsibility, supported by expert resources.

Regardless of the model, the core of a successful growth team is its cross-functional nature, bringing together key roles such as an Experimentation Lead (strategy and roadmap), Data Scientist/Analyst (statistical rigor), Software Developer (implementation), Product Manager (product alignment), Marketing Manager (customer journey context), and User Researcher (qualitative insights). This blend of skills ensures that experiments are not only technically sound but also strategically relevant and deeply informed by customer understanding.

The strategic path for an organization is often to begin with a centralized model to build a foundation of expertise and credibility, and then intentionally evolve toward a CoE model as the culture and skills mature across the company.

Establishing the Operating System: A Step-by-Step Process for Scalable Experimentation

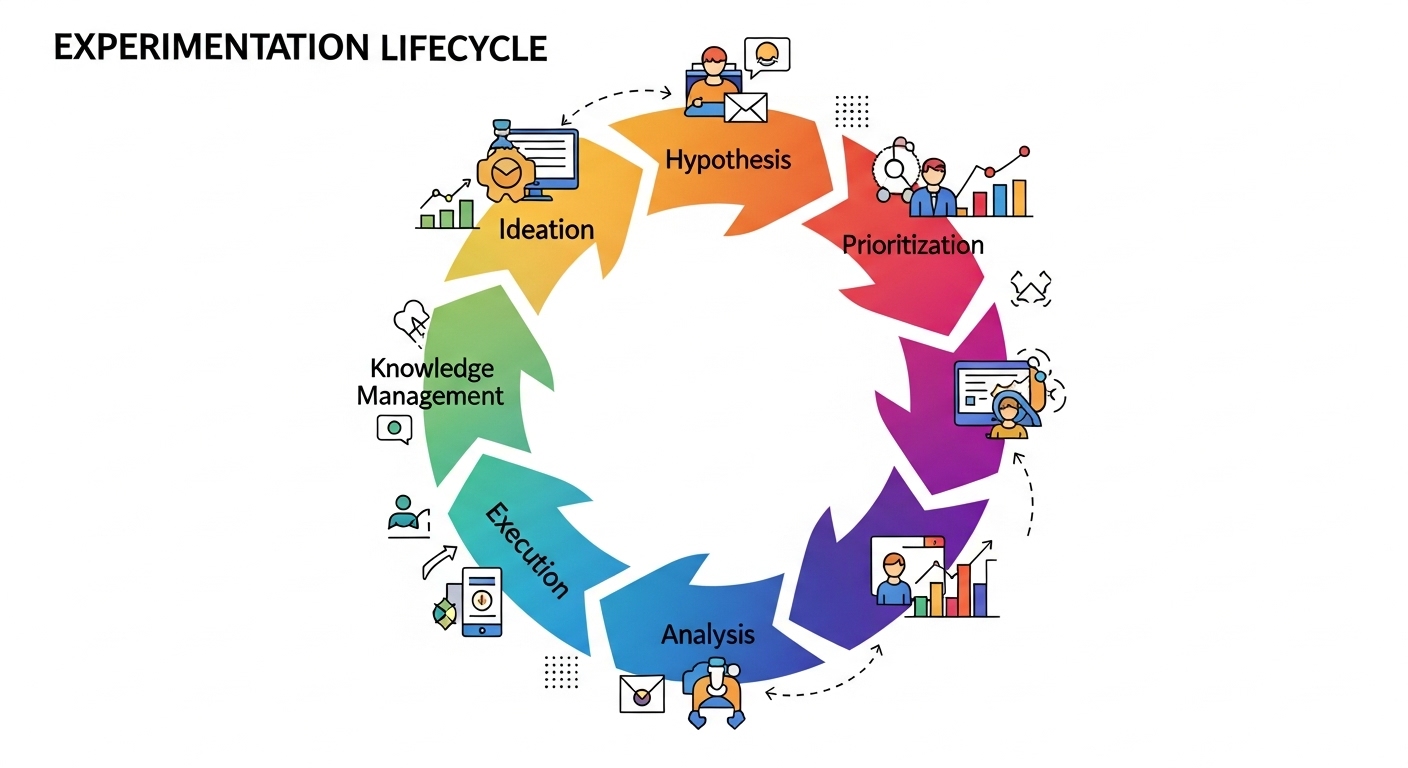

To transform experimentation from a series of random acts into a systematic engine for growth, a clear and repeatable operating system is required. This workflow provides the structure that enables teams to move from idea to insight efficiently and effectively, ensuring that each experiment contributes to a growing body of institutional knowledge.

This process can be broken down into six key stages.

1. Ideation

The process begins with generating high-quality ideas for experiments. This should not be an unstructured brainstorm but a data-informed process. Ideas should be sourced from a variety of quantitative and qualitative inputs, including: funnel data analysis to identify drop-off points, customer feedback from surveys and support tickets, user research and session recordings, competitive analysis, and observations from cross-functional team members. All ideas should be captured in a centralized backlog accessible to the entire organization.

2. Hypothesis Formulation

A vague idea must be translated into a rigorous, testable hypothesis. A strong hypothesis is not a guess; it is a clear statement that articulates a proposed change, a predicted outcome, and the underlying rationale. The “If _____, Then _____, Because _____’” format is a highly effective framework. For example: “If we add trust badges to the checkout page, then the conversion rate will increase, because it will reduce user anxiety about payment security”. This structure forces clarity and ensures the experiment is designed to produce a specific learning.

3. Prioritization

Not all ideas are created equal, and resources are always limited. A prioritization framework is essential for building a strategic experimentation roadmap that focuses on the highest-impact opportunities. A widely used model is ICE, which scores each idea based on three criteria:

- Impact: How much will this experiment move the target metric if it is successful?

- Confidence: How confident are we that this experiment will produce the expected impact, based on prior data or research?

- Effort: How much time and resources (e.g., engineering, design) will it take to launch this experiment?

By scoring and ranking ideas, teams can ensure they are working on the tests most likely to drive meaningful results.

4. Execution

This stage involves designing and launching the experiment. Key considerations include defining the target audience, calculating the necessary sample size to achieve statistical significance, determining the test duration, and implementing the variations with technical precision. Rigor at this stage is critical to ensure the results are trustworthy.

5. Analysis and Learning

Once the experiment concludes, the results are analyzed to determine if the hypothesis was validated or invalidated. This goes beyond simply identifying a “winner.” The most important part of this stage is to understand the why behind the results and to document the learnings. Funnel metrics can be powerful diagnostic tools here, helping to explain how user behavior changed to produce the outcome.

6. Knowledge Management

This final stage is arguably the most critical for scaling the culture. Every learning, whether from a success, failure, or inconclusive test, must be documented in a centralized, easily searchable repository. This knowledge base becomes the organization’s long-term memory, preventing teams from re-running failed tests and allowing new experiments to build upon the insights of previous ones. Without this systematic documentation, learnings remain siloed, and the value of each experiment is lost. As one expert notes, “If you fail to document your findings, it is as if the experiment never took place”. This repository is the mechanism that compounds the organization’s intellectual capital over time, accelerating its overall learning velocity and forming the connective tissue of a true experimentation culture.

A Practitioner’s Playbook for Full-Funnel Experimentation

Applying experimentation across the entire customer journey requires a tailored approach at each stage. The objectives, metrics, and types of tests that are relevant at the top of the funnel are fundamentally different from those at the bottom. This section provides a practical playbook for marketers and growth leaders, detailing how to design and measure experiments at each stage of the digital marketing funnel: Awareness, Consideration, Conversion, and the crucial post-purchase phases of Loyalty and Advocacy. It includes a comprehensive matrix that aligns objectives with key performance indicators (KPIs) and concrete testing examples, transforming the abstract concept of full-funnel experimentation into an actionable framework.

Measuring What Matters: Aligning KPIs with Each Funnel Stage

Effective full-funnel experimentation begins with a clear measurement strategy that distinguishes between high-level business KPIs and stage-specific funnel metrics. While overarching business goals like increasing Customer Lifetime Value or overall Return on Investment (ROI) are the ultimate measures of success, they are lagging indicators. Funnel metrics, on the other hand, are leading indicators that act as powerful diagnostic tools, providing real-time feedback on the health of each stage and helping to explain why the top-level KPIs are moving.

For example, if the primary success metric for an experiment is revenue, a simple analysis might show that it remained flat. However, a deeper look at funnel metrics might reveal that while the experiment increased top-of-funnel traffic, it simultaneously decreased the conversion rate at the checkout stage. This level of granularity is essential for generating actionable insights. Without it, the team would not know which part of the user journey to focus on for the next iteration. Therefore, a successful measurement framework maps specific, quantifiable metrics to each funnel stage, allowing teams to monitor performance, identify bottlenecks, and design targeted experiments to address them.

Top-of-Funnel (Awareness): Experiments in Brand Reach and Audience Discovery

The primary objective at the top of the funnel (TOFU) is to attract a broad, relevant audience and build brand awareness and recognition. At this stage, potential customers are often just beginning to identify a problem or need and are not yet familiar with specific solutions. Marketing efforts are focused on capturing attention and generating initial interest.

Key Performance Indicators (KPIs)

The metrics at this stage measure reach and initial engagement. These include Impressions, Reach, Ad Frequency, Organic Traffic Sessions, SEO Keyword Rankings, Social Media Engagement (likes, shares, comments), Website Bounce Rate, and Time on Page.

Experiment Examples

- Ad Creative and Messaging: A/B test different ad headlines, copy, and imagery to determine which combination drives the highest click-through rate (CTR) and lowest cost-per-click (CPC). For visual ads, this could involve testing static images versus GIFs or short videos. For messaging, experiments can compare different value propositions, such as focusing on product features versus customer benefits, to see what resonates most with a cold audience.

- Channel Effectiveness: For channels where direct A/B testing is difficult (e.g., television, radio, podcasts), quasi-experimental methods like geo-lift studies can be employed. This involves increasing or decreasing ad spend in specific geographic markets and measuring the corresponding lift in brand-related search queries or direct website traffic compared to control markets.

This helps quantify the incremental impact of upper-funnel channels.

- Content Strategy: Test different content formats—such as blog posts, infographics, videos, or interactive quizzes—to identify which types generate the most organic traffic, social shares, and on-page engagement. This helps optimize content production resources toward the most effective formats for audience attraction.

Mid-Funnel (Consideration): Testing for Engagement and Lead Nurturing

Once a potential customer is aware of the brand, they enter the middle of the funnel (MOFU), or the consideration stage. Here, they are actively researching and evaluating different solutions to their problem. The marketing objective shifts from broad reach to targeted engagement: nurturing leads, building trust, and clearly differentiating the brand’s offering from competitors.

Key Performance Indicators (KPIs):

Success in the consideration stage is measured by the ability to convert anonymous visitors into known leads and engage them effectively. Relevant KPIs include Lead Generation Rate, Lead Quality (often measured by a lead scoring system), Email Open and Click-Through Rates, Content Download Rate (for assets like whitepapers or ebooks), and Webinar Attendance Rate.

Experiment Examples:

- Lead Magnets: A/B test different types of gated content to determine which offer generates the highest volume and quality of leads. For example, a company could test a comprehensive whitepaper against a practical, free template or an exclusive webinar to see which is more compelling to their target audience.

- Landing Page Optimization: This is a classic area for A/B testing. Experiments can target numerous elements, including the headline, the length and fields of the submission form, the call-to-action (CTA) button copy (“Download Now” vs. “Get Your Free Guide”), and the use of social proof elements like customer testimonials, logos of well-known clients, or case studies.

- Email Nurturing Sequences: For leads captured via a lead magnet, experiment with different email nurturing campaigns. Tests can compare subject lines to improve open rates, the timing and frequency of emails, the type of content shared (e.g., case studies vs. product tutorials), and the overall tone to see what best moves leads toward a sales-qualified stage.

- Retargeting Campaigns: For users who have visited specific pages (like product or pricing pages) but have not converted, test different retargeting ad creatives and offers across display and social networks. An experiment could compare a generic brand message to an ad featuring a specific customer testimonial or a limited-time offer.

Bottom-of-Funnel (Conversion): Optimizing the Path to Purchase

At the bottom of the funnel (BOFU), the prospect is close to making a decision. They have evaluated their options and are now looking for the final push to choose a specific product or service. The marketing objective is to remove any remaining friction or hesitation and make the purchase process as seamless as possible, thereby maximizing the conversion rate.

Key Performance Indicators (KPIs):

Metrics at this stage are directly tied to revenue and efficiency. They include Conversion Rate, Customer Acquisition Cost (CAC), Average Order Value (AOV), Shopping Cart Abandonment Rate, and Return on Ad Spend (ROAS).

Experiment Examples:

- Pricing and Offers: This is a high-impact area for experimentation. Companies can A/B test different price points, subscription tiers, discount strategies (e.g., a 20% discount vs. a fixed $10 off), and product bundling options to see what combination maximizes both conversion rate and revenue. A famous case study from Electronic Arts revealed that for pre-orders of SimCity 5, removing a discount offer actually increased sales by over 40%, challenging the assumption that discounts always drive conversions.

- Checkout Flow Optimization: To combat cart abandonment, run experiments on the checkout process itself. Test variations in the number of form fields, the inclusion of guest checkout options, the prominence of trust signals like security badges, and the timing of when shipping costs are displayed.

- Sales and Product Pages: On pages where the final decision is made, test elements like the CTA copy (“Buy Now” vs. “Add to Cart” vs. “Start Your Free Trial”), the quality and type of product images or videos, and the placement and format of customer reviews or social proof.

- Urgency and Scarcity: Experiment with psychological triggers to encourage immediate action. Booking.com is a master of this, famously testing elements like “Only 2 rooms left!” or showing how many other people are currently viewing a property. Their test of displaying “Sold out” properties even increased overall bookings by creating a stronger sense of legitimate scarcity.

Post-Funnel (Loyalty & Advocacy): Experiments in Retention, Referral, and Community Building

The customer journey does not end at the purchase. The post-funnel stages are critical for long-term, sustainable growth. The objectives here are to retain existing customers, increase their lifetime value, and transform them into passionate advocates who actively promote the brand.

Key Performance Indicators (KPIs):

Success in these stages is measured by long-term value and advocacy. Key metrics include Customer Retention Rate, Churn Rate, Customer Lifetime Value, Repeat Purchase Rate, Net Promoter Score (NPS), Referral Rate, and the volume of User-Generated Content (UGC).

Experiment Examples:

- Customer Onboarding: For SaaS products or services with a learning curve, A/B test the customer onboarding experience. This can include testing different automated email sequences, in-app product tours, or proactive customer support check-ins to see which approach leads to higher user activation and lower churn in the first 30 days.

- Loyalty and Rewards Programs: Experiment with the structure of loyalty programs. For example, test a points-based system against a tiered VIP program, or experiment with different types of rewards (e.g., discounts vs. exclusive access to products). Incorporating gamification elements like badges, leaderboards, and progress bars can also be tested to see if they increase engagement and repeat purchases.

- Referral Programs: A/B testing is crucial for optimizing referral programs. Test the incentive structure for both the referrer and the referred friend (e.g., “Give $10, Get $10” vs. “Give 20%, Get 20%”). Also, test the messaging, the ease of sharing, and the placement of the referral CTA within the product or website to maximize the program’s viral coefficient.

- User-Generated Content (UGC) and Advocacy Campaigns: To turn customers into advocates, experiment with different campaigns to encourage UGC. This could involve testing different types of contests (e.g., photo contest vs. story contest), branded hashtags, and incentives (e.g., a chance to be featured on the brand’s social media vs. a discount coupon) to see what generates the most high-quality content and social proof.

The following table, The Full-Funnel Experimentation Matrix, consolidates these concepts into a single, actionable reference for practitioners.

| Funnel Stage | Primary Objective | Key Performance Indicators (KPIs) | Example Hypothesis | A/B Testing Examples |

|---|---|---|---|---|

| Awareness (TOFU) | Attract new, relevant audiences and build brand recognition. | Impressions, Reach, Organic Traffic, SEO Rankings, Social Engagement, Bounce Rate, Time on Page. | “If we use a video ad instead of a static image on Facebook, our click-through rate will increase because video is more engaging for our target demographic.” | Test ad creatives (video vs. image), headlines, audience targeting parameters, and content formats (blog vs. infographic). |

| Consideration (MOFU) | Nurture leads, build trust, and differentiate the brand. | Lead Generation Rate, Lead Score, Email Open/Click Rate, Content Download Rate. | “If we reduce the number of fields in our lead magnet form from seven to four, the form submission rate will increase because it reduces friction for the user.” | Test landing page layouts, form lengths, CTA copy (“Download” vs. “Get”), social proof (testimonials vs. logos), and lead magnet offers (ebook vs. webinar). |

| Conversion (BOFU) | Drive purchase decisions and remove final barriers. | Conversion Rate, Customer Acquisition Cost (CAC), Average Order Value (AOV), Cart Abandonment Rate. | “If we offer a ‘Buy Now, Pay Later’ option at checkout, the conversion rate will increase because it addresses budget concerns for high-ticket items.” | Test pricing points, discount offers (% vs. $), checkout flow designs, payment options, and urgency messaging (e.g., “limited stock”). |

| Loyalty | Retain existing customers and increase their lifetime value. | Retention Rate, Churn Rate, Customer Lifetime Value, Repeat Purchase Rate. | “If we implement a tiered loyalty program with exclusive perks for top spenders, the repeat purchase rate will increase because it provides a stronger incentive for continued engagement.” | Test onboarding email sequences, loyalty program structures (points vs. |

- tiers), reward types, and personalized post-purchase offers.

Advocacy

Turn satisfied customers into active brand promoters.

- Net Promoter Score (NPS)

- Referral Rate

- Volume of User-Generated Content (UGC)

- Number of Reviews

“If we change our referral offer from ‘Get $10’ to ‘Give $10, Get $10’, the referral rate will increase because the altruistic framing is more motivating for our customer base.”

Test referral program incentives, UGC contest rules and prizes, and the timing and messaging of requests for reviews or NPS feedback.

Navigating the Path to Maturity: Common Challenges and Strategic Solutions

The journey to establishing a mature, full-funnel experimentation culture is fraught with challenges. These obstacles are rarely technical in nature; rather, they are deeply rooted in human behavior, organizational inertia, and long-standing corporate norms. Recognizing these roadblocks is the first step toward overcoming them. This section identifies the most common pitfalls that organizations face and provides strategic, actionable solutions to navigate the path toward a culture of continuous learning and data-driven growth.

Identifying the Roadblocks: From Data Distrust to “HiPPO” Dominance

Organizations attempting to foster an experimentation culture typically encounter a consistent set of challenges that can stall or derail progress. These can be categorized into five main areas:

- Cultural Resistance: The most significant barrier is often a deep-seated resistance to change. This manifests as a fear of failure, where experiments that don’t produce a “win” are viewed as wasted resources rather than valuable learning opportunities. This risk aversion is frequently compounded by the dominance of the “HiPPO” (Highest Paid Person’s Opinion), where leadership intuition or authority consistently overrides data-driven insights, rendering experimentation theater rather than a genuine decision-making tool.

- Lack of Leadership Buy-In: Without active and vocal support from the executive level, experimentation programs are often perceived as a low-priority, tactical exercise. Leaders may pay lip service to being “data-driven” but fail to allocate the necessary budget, empower teams with autonomy, or model the desired behaviors themselves. This lack of genuine commitment trickles down, signaling to the rest of the organization that experimentation is not a strategic imperative.

- Organizational Silos: In many companies, departments like marketing, product, engineering, and data operate in isolation. When experimentation is confined to one of these silos, it leads to inconsistent methodologies, duplicated efforts, and a failure to capitalize on cross-functional insights. A test run by the marketing team to optimize a landing page may miss critical insights that the product team has about user behavior, and the learnings from that test may never be shared back to inform product development.

- Process and Resource Constraints: Even with willing participants, programs can be stifled by bureaucracy. Cumbersome approval chains for launching even minor tests can slow velocity to a crawl, killing momentum and frustrating teams. This is often coupled with a lack of dedicated resources—insufficient budget for tools, a shortage of personnel with analytical skills, or a lack of authority for teams to implement changes based on test results.

- Data and Technical Issues: The foundation of any experimentation program is trustworthy data. However, many organizations suffer from poor data quality, incomplete tracking, or a disorganized data infrastructure. When teams cannot trust the numbers they are seeing, they lose confidence in the results of experiments, and the entire program’s credibility is undermined.

Strategic Solutions for Building Momentum

Overcoming these challenges requires a deliberate and strategic change management approach. Simply buying a tool or hiring an analyst is not enough; the solutions must address the underlying cultural and organizational systems.

- To Secure Buy-In, Speak the Language of Business: Frame the value of experimentation in terms that resonate with leadership: ROI, risk mitigation, and competitive advantage. Start small to generate “quick wins”—high-impact, low-effort experiments that demonstrate tangible value early on. Share these success stories widely to build credibility and create a groundswell of support for the program.

- To Foster Psychological Safety, Redefine “Failure”: Leadership must spearhead the cultural shift from punishing failure to celebrating learning. This can be operationalized by publicly recognizing teams for running well-designed experiments, regardless of the outcome, and by changing the language in reviews from “Did it work?” to “What did we learn?”. When leaders openly discuss their own failed hypotheses, it normalizes the process and encourages others to take calculated risks.

- To Scale Learning, Democratize Experimentation: Make testing accessible to everyone, not just a select group of experts. This involves providing standardized templates for hypotheses, offering training on basic statistical concepts, and investing in user-friendly tools. For low-risk experiments, remove bureaucratic hurdles and empower teams with the autonomy to “test first, review later”.

- To Break Down Silos, Formalize Collaboration: The most effective solution to siloed efforts is the creation of dedicated, cross-functional “growth” or “experimentation” teams that bring together members from marketing, product, engineering, and data. Establishing shared goals and regular, cross-functional rituals—such as a weekly or bi-weekly “Experiment Readout” meeting where all teams share their results and learnings—is critical for disseminating knowledge across the organization.

- To Build Data Trust, Invest in a Solid Foundation: Treat data infrastructure as a first-class business priority. Appoint a Directly Responsible Individual (DRI) or team to own the analytics stack, ensuring data is clean, accurate, and reliable. Create a culture of transparency around data by making dashboards and reports widely accessible and by clearly documenting how key metrics are defined and calculated. This investment is a “slow down to speed up” step that is non-negotiable for long-term success.

The common thread among these challenges and solutions is that the primary obstacles are organizational and human-centric. The core task is to guide the organization through a cultural transformation—from a culture that values “being right” and executing top-down decisions to one that values “learning fast” and making evidence-based choices. This requires treating the initiative as a strategic change management program, not merely a technical or marketing project.

Enabling the Ecosystem: The Technology Stack for Scalable Experimentation

A mature full-funnel experimentation culture cannot operate at scale without a robust and integrated technology stack. These tools are the ecosystem that enables teams to move from hypothesis to insight with speed, rigor, and confidence. However, the selection and implementation of this stack must be a strategic decision, aligned with the organization’s current capabilities and maturity level. Adopting an overly complex enterprise suite too early can create friction and hinder adoption, while failing to invest in necessary tools can cap the program’s potential and lead to unreliable results.

Core Components of the Experimentation Stack

Every effective experimentation program is built upon a foundation of three core technology categories. These tools provide the essential capabilities for understanding user behavior, running controlled tests, and managing the data that fuels the entire process.

- Visitor Behavior & Analytics Tools: This category forms the starting point for any experiment by answering the question, “What are our users doing and where are the opportunities?”

- Web and Product Analytics Platforms (e.g., Google Analytics, Amplitude, Mixpanel): These are fundamental for tracking user flows, measuring conversion rates at each step of the funnel, and segmenting users based on behavior or demographics. They provide the quantitative data needed to identify problem areas (e.g., a high drop-off rate on a specific page).

- Qualitative Behavior Analysis Tools (e.g., Hotjar, Contentsquare, Microsoft Clarity): These tools provide the “why” behind the “what.” Heatmaps visualize where users click and scroll, session recordings allow teams to watch individual user journeys to identify points of friction or confusion, and on-page surveys gather direct user feedback. This qualitative insight is invaluable for generating strong, user-centric hypotheses.

- A/B Testing & Experimentation Platforms: These are the engines that execute the experiments. They handle the technical aspects of splitting traffic, serving variations, and collecting performance data. The market includes a wide range of solutions:

- Client-Side Platforms (e.g., VWO, Optimizely, AB Tasty, Google Optimize [sunset]): These tools use JavaScript to modify the user’s browser experience. They are generally easier for marketing teams to implement without heavy engineering involvement and are ideal for testing changes to the user interface, copy, and layout.

- Server-Side Platforms (e.g., Statsig, LaunchDarkly, Optimizely Full Stack): These tools run experiments on the server before the page is rendered to the user. They are more powerful for testing complex product logic, algorithms (like search or recommendation engines), and multi-step flows.

They require more engineering integration but offer greater flexibility and performance.

- Data Management & Integration: To get a unified view of the customer and the true impact of experiments, data must be consolidated.

- Customer Data Platforms (CDPs) and Integration Tools (e.g., Segment, Workato): These platforms collect event data from all customer touchpoints (website, mobile app, CRM) and route it to a centralized location, ensuring consistency.

- Data Warehouses (e.g., Snowflake, BigQuery): This central repository stores all raw data, allowing data scientists to perform deep, custom analyses of experiment results that may not be possible within the A/B testing tool itself.

Advanced Tooling for Mature Programs

As an organization’s experimentation program matures and moves from “Walk” to “Run” and “Fly,” its needs become more sophisticated. The technology stack must evolve to support higher velocity, greater complexity, and deeper integration with product development processes.

- Feature Flagging & Management Platforms (e.g., LaunchDarkly, Statsig, Split.io): These tools are essential for modern, agile development and mature experimentation programs. Feature flags decouple code deployment from feature release, allowing teams to turn features on or off for specific user segments without a new code push. This enables controlled rollouts (e.g., releasing a new feature to 1% of users, then 10%, then 50%), instant rollbacks if a problem is detected, and the ability to run complex, server-side experiments on core product functionality.

- Personalization Engines (e.g., Adobe Target, Kameleoon): While distinct from pure A/B testing, personalization is its close cousin. These engines use machine learning and user data to deliver tailored experiences to different audience segments. Mature organizations use their experimentation platforms to test the effectiveness of different personalization strategies, ensuring that these complex algorithms are actually driving positive business outcomes.

- Project Management & Knowledge Sharing Platforms (e.g., Coda, Notion, Confluence, Airtable): While simple spreadsheets can suffice for early-stage programs, mature programs running hundreds or thousands of tests a year require a dedicated system for managing the entire experimentation lifecycle. These platforms serve as the central nervous system for the program, housing the idea backlog, the prioritized roadmap, detailed specifications for each experiment, and, most importantly, the searchable repository of all past results and learnings.

The strategic selection of these tools should follow the organization’s cultural roadmap. A “Crawl” stage team might succeed with a simple combination of Google Analytics and a free A/B testing tool. A “Fly” stage organization, however, will require a deeply integrated stack that includes server-side feature flagging, a customer data platform, and a powerful data warehouse to support its high-velocity, full-funnel experimentation engine. The technology should always be in service of the culture and process, not the other way around.

Case Studies & Strategic Recommendations

Theory and frameworks provide the blueprint, but real-world examples and a clear, actionable roadmap are what translate strategy into execution. This final section grounds the principles of a full-funnel experimentation culture in the practices of market-leading companies that have successfully embedded this DNA into their operations. By examining how organizations like Booking.com, Netflix, Amazon, and Microsoft leverage experimentation, we can distill their successes into a phased implementation plan and a set of direct recommendations for business leaders poised to begin or accelerate their own transformation.

Learning from the Leaders: Case Studies in Full-Funnel Experimentation

The world’s most innovative digital companies are not just users of experimentation; they are defined by it. Their success stories offer powerful validation of the principles outlined in this report.

- Booking.com: The Science of High-Velocity Optimization: Booking.com is legendary for its culture of running thousands of experiments simultaneously across its platform. Their approach demonstrates a mastery of testing psychological principles to influence user behavior at the bottom of the funnel. They systematically test every detail of the booking experience, from seemingly minor details like reassuring hostel guests that “Toilet paper included” to powerful risk-reversal mechanisms like “Free cancellation”. A hallmark of their strategy is the use of social proof and scarcity, famously testing variations of messages like “13 people are looking at this hotel” and even displaying sold-out properties to create a credible sense of urgency that drives conversions. Their “Booking.yeah” campaign, which leveraged a Super Bowl ad for top-of-funnel awareness and YouTube for mid-funnel engagement, illustrates a cohesive full-funnel strategy that delivered a 22% lift in brand awareness and a 6% lift in site visitors, demonstrating how they connect broad-reach initiatives to performance outcomes.

- Netflix: Personalization at Unprecedented Scale: Netflix’s dominance is built on a foundation of data-driven personalization, powered by relentless A/B testing. The company treats every aspect of the user experience as a hypothesis to be tested. This extends far beyond content recommendations; Netflix famously experiments with the thumbnail artwork for its shows and movies, showing different images to different user segments to see which one drives more clicks and engagement. They test user interface layouts, preview autoplay settings, and content ranking algorithms to continuously refine the experience and reduce churn. This culture of “informed risk-taking” is so ingrained that any employee with a strong hypothesis is empowered to run a test, letting real user behavior—not executive opinion—dictate product decisions.

- Amazon: A Self-Contained Full-Funnel Ecosystem: Amazon leverages its vast ecosystem of consumer touchpoints—from streaming video and music to e-commerce—to execute and measure full-funnel marketing strategies with unparalleled precision. A brand can use Amazon Ads to run a complete, testable campaign within a closed loop. For example, a company like chemical manufacturer Sika used Amazon’s video production services and Streaming TV ads to build top-of-funnel awareness among DIY customers. They then used Sponsored Display ads for mid-funnel consideration and retargeting, and Sponsored Products ads to capture bottom-of-funnel purchase intent. By using Amazon’s measurement tools, Sika was able to track a 5% increase in aided awareness and a 9% lift in purchase intent, directly connecting their upper-funnel investments to lower-funnel results.

- Microsoft: Experimentation as a Corporate Operating System: Under the leadership of Satya Nadella, Microsoft has undergone a profound cultural transformation, embracing a “growth mindset” with experimentation at its core. This extends far beyond marketing. The company’s internal Experimentation Platform (ExP) powers thousands of A/B tests every month across products like Bing, Office, and Windows. This platform-driven approach allows product teams to test new features, measure their impact on user engagement and satisfaction, and make data-backed decisions before rolling them out globally. The classic example of Bing generating an additional $100 million in annual revenue from a single A/B test on headline formatting illustrates the immense financial impact of a mature experimentation culture. Microsoft’s model shows how experimentation can evolve from a marketing tactic to a fundamental part of the entire software development lifecycle.

A Phased Roadmap for Implementation: Crawl, Walk, Run, Fly

Building a mature experimentation culture is a multi-year journey, not an overnight project. Organizations should adopt a phased approach, building capabilities and expanding the program’s scope incrementally. The “Crawl, Walk, Run, Fly” model provides a useful framework for this evolution.

- Phase 1: Crawl (Months 0-6): The goal is to establish a foundation and prove value.

- Actions: Appoint a single owner or a small, centralized team. Focus on “low-hanging fruit”—high-impact, low-effort tests in a single area of the funnel (often conversion). Run a handful of well-designed experiments and widely socialize any wins to build credibility and secure buy-in. Establish a basic process for hypothesis generation and a simple spreadsheet for tracking results.

- Focus: Proving the concept and building momentum.

- Phase 2: Walk (Months 6-18): The goal is to formalize the process and expand the program’s reach.

- Actions: Grow the centralized team with dedicated roles (e.g., analyst, developer). Standardize the experimentation workflow from ideation to knowledge sharing. Invest in a dedicated A/B testing platform and begin building a more robust knowledge repository. Start expanding tests to other parts of the funnel (e.g., consideration).

- Focus: Establishing a repeatable, scalable process.

- Phase 3: Run (Months 18-36): The goal is to democratize experimentation and scale its impact.

- Actions: Evolve the centralized team into a Center of Excellence (CoE) that focuses on governance, training, and strategy. Begin embedding experimentation capabilities within key product and marketing teams. Invest in more advanced technology, such as server-side testing or feature flagging platforms.

The volume of experiments should increase significantly.

- Focus: Scaling the culture and capability across the organization.

- Phase 4: Fly (Year 3+): The goal is for experimentation to be fully integrated into the corporate DNA.

- Actions: Experimentation is the default method for decision-making across most departments. The CoE provides high-level strategic guidance, and autonomous, cross-functional teams run hundreds or thousands of tests per year. Experiment results directly inform high-level business and product strategy. The organization is a true learning machine.

- Focus: Using experimentation to drive strategic innovation and maintain market leadership.

Key Actionable Recommendations for Leadership

For executives seeking to initiate or accelerate this transformation, the path forward requires decisive action and sustained commitment. The following recommendations represent the most critical leadership levers for building a successful full-funnel experimentation culture:

- Publicly Commit and Model the Mindset: Declare experimentation a top-tier strategic priority. Personally champion the “learning from failure” philosophy in all-hands meetings and executive reviews. When a test fails, be the first to ask, “What did we learn, and what will we test next?”

- Appoint a Clear and Empowered Owner: Designate a single, senior leader as the Directly Responsible Individual (DRI) for the entire experimentation program. Provide this leader with the budget, resources, and—most importantly—the political authority to drive change across departmental silos.

- Restructure Incentives to Reward Learning: Work with HR to modify performance review criteria and bonus structures. Shift the focus from rewarding only successful outcomes to rewarding well-designed experiments, valuable insights, and intellectual honesty. Recognize teams that invalidate a major assumption and save the company from a costly mistake.

- Mandate and Invest in a Centralized Knowledge System: Make the creation and maintenance of a single, accessible repository for all experiment results a non-negotiable requirement. Invest in the necessary tools and personnel to make this system the “source of truth” for customer insights, and make its use a standard part of project planning and review processes.

The Future of Experimentation: The Role of AI and Machine Learning

Looking ahead, the pace and sophistication of experimentation will only accelerate, driven largely by advancements in Artificial Intelligence (AI) and Machine Learning. These technologies are not a replacement for the cultural foundations of experimentation but rather a powerful amplifier. AI can be leveraged to automate and enhance several stages of the experimentation lifecycle: generating hypotheses by analyzing vast datasets for patterns in user behavior, creating variations in ad copy and design at scale, and powering the personalization engines that deliver tailored experiences to millions of individual users. As these technologies mature, they will enable organizations to run more complex experiments, analyze results with greater depth, and shorten the learning cycle from weeks to days or even hours. The companies that have already built a strong cultural and procedural foundation for experimentation will be best positioned to harness the power of AI, further widening the gap between themselves and their less agile competitors. The future belongs to the fastest learners.