Optimizing Content for LLM Search & AI-Driven Web

Section 1: The Paradigm Shift from Search to Synthesis

1.1. Introduction: Beyond Ten Blue Links

The landscape of digital information discovery is undergoing its most profound transformation since the advent of the search engine. For decades, the dominant paradigm has been the Search Engine Results Page (SERP)—a list of ten blue links presented in response to a user’s keyword query. Success in this environment was defined by achieving a high rank to attract user clicks. Today, this model is being fundamentally disrupted by the rise of Large Language Models (LLMs) and generative AI, which are shifting the primary mode of interaction from search to synthesis.

Platforms like OpenAI’s ChatGPT, Google’s Gemini, and Perplexity are not merely new search tools; they are answer engines. They respond to conversational queries by synthesizing information from a multitude of sources into a single, coherent, and direct answer. This evolution gives rise to the “zero-click” environment, where users receive the information they need without ever clicking through to a source website. For businesses and content creators, this represents a seismic shift. The traditional goal of ranking a webpage is being superseded by a new imperative: becoming a trusted, citable source for the AI itself.

This report will detail the strategic and tactical adjustments required to thrive in this new ecosystem. It is no longer sufficient to optimize for search engine crawlers and human readers alone. The new audience is the AI—a sophisticated, data-driven consumer of information that evaluates content based on a different set of criteria. Visibility in this new era is not about being remembered by users, but about being “remembered by AI“. This is not an incremental change but an evolutionary leap. The strategies outlined herein are designed not just to adapt to the current environment but to secure a brand’s relevance and authority in an increasingly AI-native internet.

1.2. Deconstructing the New Optimization Lexicon

The rapid evolution of AI-driven search has given rise to a new vocabulary of optimization disciplines. While often used interchangeably, these terms represent distinct but related strategic functions. A precise understanding of each is critical for developing a coherent and effective strategy.

LLM Seeding

LLM Seeding is the broad, strategic practice of creating and distributing content with the specific intention of having it ingested into the training datasets and retrieval databases that Large Language Models utilize. The fundamental goal is to proactively “teach” the AI about a brand, its products, and its domain expertise. It is not about ranking a specific page but about embedding the brand as a known, citable entity within the AI’s foundational knowledge and logic. This involves placing highly informative, structured, and authoritative content across a wide range of digital channels that are frequently crawled and referenced by AI systems. The objective is to increase the probability that when an LLM generates a response related to a specific industry or topic, it will mention, cite, or recommend the seeded brand.

Large Language Model Optimization (LLMO)

Large Language Model Optimization (LLMO) is the more technical and structural discipline of formatting digital content to ensure it is machine-readable, semantically clear, and easily extractable by AI systems. While LLM Seeding is concerned with the distribution of content, LLMO is focused on the construction of that content. Its primary emphasis is on comprehension and reusability at a granular, snippet-level, rather than on page-level rankings. LLMO involves optimizing sentence structure, using clear and unambiguous language, employing semantic HTML, and leveraging structured data to make content as digestible as possible for an AI parser. The success of LLMO is measured not in clicks, but in the accuracy and frequency of citations and the correct extraction of information fragments.

Generative Engine Optimization (GEO)

Generative Engine Optimization (GEO) is the practice of adapting and optimizing digital content to improve its visibility specifically within the AI-generated answers of search engines, such as Google’s AI Overviews. First defined in an academic paper in late 2023, GEO represents the application of LLMO principles to the specific, high-stakes environment of a generative search interface. The primary goal of GEO is to “dominate AI-generated answers” by ensuring that a brand’s content is not only retrieved by the underlying LLM but is also selected and prominently featured in the final synthesized response presented to the user. It focuses intensely on format clarity, AI parsing, and satisfying the contextual and intent-based algorithms that drive these new search experiences.

1.3. A Comparative Analysis: SEO vs. LLM Seeding vs. LLMO vs. GEO

The distinctions between these new disciplines and traditional Search Engine Optimization (SEO) are fundamental. SEO was developed for a world of indexed web pages and user clicks, whereas the new trinity of LLM Seeding, LLMO, and GEO is designed for a world of synthesized answers and machine comprehension. The following table provides a detailed comparative analysis, synthesizing data from multiple industry reports and technical analyses to clarify the strategic differences.

| Dimension | Traditional SEO | LLM Seeding | Large Language Model Optimization (LLMO) | Generative Engine Optimization (GEO) |

|---|---|---|---|---|

| Primary Goal | Achieve high rankings on SERPs for “blue links” to drive clicks. | Get a brand’s information cited, mentioned, or recommended in LLM responses across various platforms. | Ensure content is accurately understood, extracted, and reused by AI systems at a granular level. | Dominate the AI-generated answer space within search engines (e.g., Google AI Overviews). |

| Core Focus | Keywords and backlinks as primary signals of relevance and authority. | Entities and repetition of brand association with core topics across multiple channels. | Semantic structure, machine readability, and precision of information for easy parsing. | AI parsing effectiveness, format clarity, and alignment with generative engine output patterns. |

| Key Tactics | On-page optimization, technical SEO for crawlers, and external link building. | Multi-channel content distribution on platforms like Reddit, Quora, and industry publications. | Schema markup, structured writing (answer-first), semantic HTML, and concise language. | Content structuring for direct answers, demonstrating E-E-A-T signals, and intent-specific optimization. |

| Success Metrics | Organic traffic, click-through rate (CTR), keyword rankings, and conversions from clicks. | Frequency of citations and brand mentions, rise in no-click impressions, and growth in direct/branded search. | Context accuracy, extractability of content fragments, and reuse in AI-generated outputs. | Inclusion in AI-generated answers, visibility in zero-click environments, and share of voice in AI responses. |

| Primary Audience | Human readers and traditional search engine crawlers (e.g., Googlebot). | LLM training processes and real-time retrieval systems (RAG). | AI parsers and language models processing content for comprehension. | AI-powered generative search interfaces and the users interacting with them. |

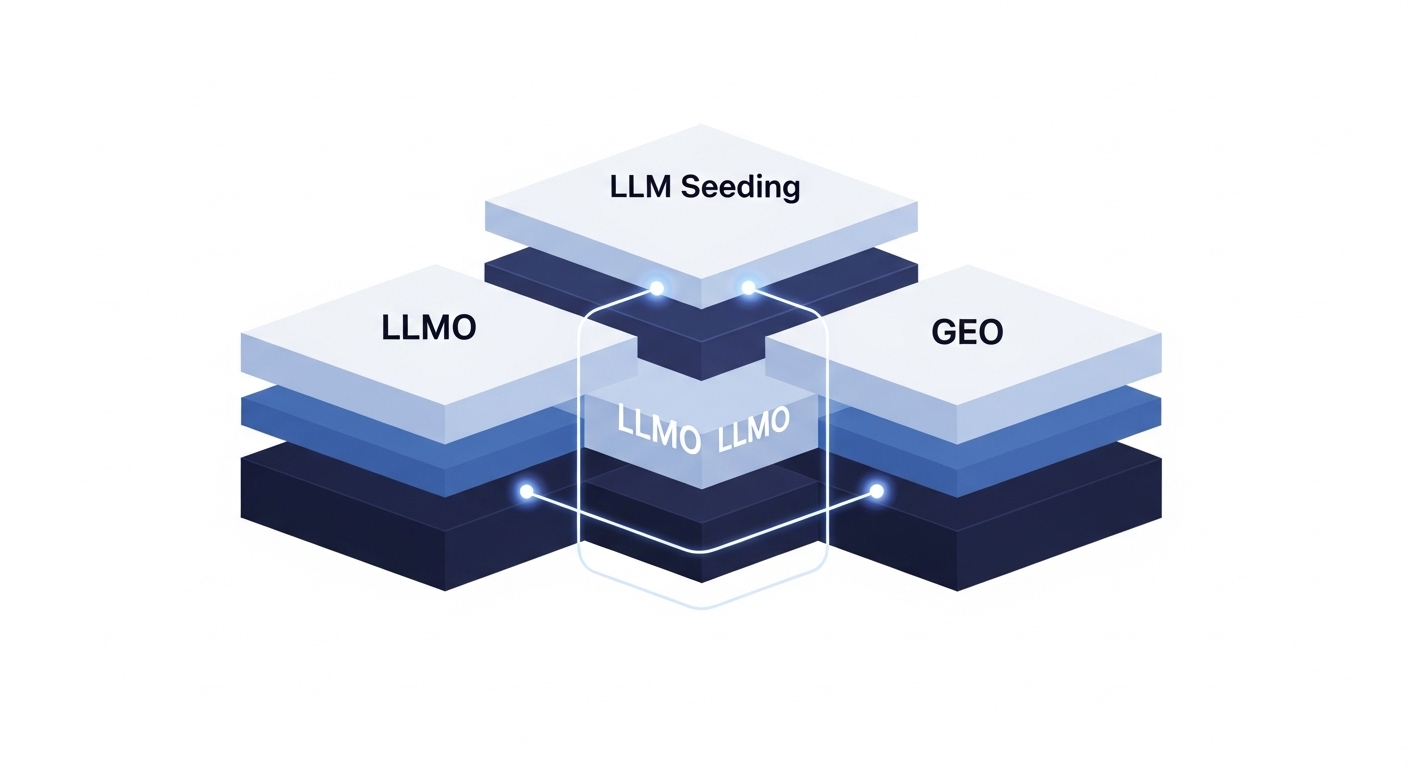

A deeper analysis reveals that these new disciplines are not competing strategies but rather form an interdependent, strategic stack. LLM Seeding, LLMO, and GEO are layers of a single, unified approach to achieving visibility in the AI era. An effective program must follow a logical sequence. It begins with a broad LLM Seeding strategy to establish a widespread presence and build brand-entity associations across the web. Without this foundational step, even perfectly optimized content on a corporate website may remain undiscovered by AI systems that value consensus from diverse sources.

Simultaneously, the principles of LLMO must be applied to all content created, both on-site and for off-site distribution. Placing content on a high-value channel like Reddit (a seeding tactic) is ineffective if that content is a wall of unstructured text that an AI cannot parse. LLMO provides the structural integrity needed for the seeding strategy to succeed.

Finally, GEO represents the desired outcome within the most prominent channel: search engines. Achieving consistent visibility in Google’s AI Overviews is the result of successful seeding (building authority across the web) and meticulous LLMO (making the content perfectly structured for extraction). Therefore, a successful strategy does not choose between these approaches but executes them in concert: Seeding to build presence, LLMO to ensure comprehension, and GEO as the measured result.

Section 2: The Mechanics of AI Information Retrieval and Generation

To effectively optimize content for Large Language Models, it is essential to first understand the fundamental mechanics of how these systems discover, process, and generate information. This is not a “black box” but a complex yet comprehensible engineering system. Understanding its architecture and processes reveals precisely why the optimization strategies detailed in subsequent sections are effective.

2.1. Inside the Black Box: How LLMs Learn and Reason

At the heart of modern LLMs is a neural network architecture known as the Transformer, first introduced by Google researchers in 2017. The Transformer architecture was a significant breakthrough because it replaced the sequential processing of earlier models with a mechanism called “self-attention,” allowing for the parallel processing of text.

This innovation enables models to handle long sequences of words and capture complex, long-range dependencies and patterns within language, which is crucial for understanding context.

The process by which an LLM interacts with text begins with Tokenization. Text is broken down into smaller units, or “tokens,” which can be words, parts of words, or characters. These tokens are then converted into a numerical format that the machine learning model can process.

Following tokenization, these numerical representations are mapped to Word Embeddings. These are not simple numerical IDs but multi-dimensional vectors in a high-dimensional space. The key property of word embeddings is that words with similar contextual meanings are located close to each other in this vector space. This allows the model to grasp semantic relationships—for example, understanding that “king” is to “queen” as “man” is to “woman”—and to process nuance far beyond simple keyword matching.

It is critical to distinguish between the two primary phases of an LLM’s lifecycle: training and inference.

- Pre-training: In this initial phase, the LLM is trained on a massive, static corpus of text and data from the internet, books, and other sources. This is typically done using “self-supervised learning,” where the model learns to predict the next word in a sequence or fill in missing words. This process is what endows the model with its general knowledge of grammar, facts, and reasoning abilities. However, because the training data is static, the model’s knowledge has a specific cut-off date.

- Inference: This is the operational phase where the trained model responds to a user’s prompt. It does this by tokenizing the prompt, converting it into embeddings, and then using its Transformer architecture to generate a response one token at a time. At each step, it calculates the probabilities for all potential next tokens and outputs the most likely one, effectively functioning as a highly sophisticated statistical prediction machine.

2.2. Retrieval-Augmented Generation (RAG): The Bridge to Real-Time Relevance

The static nature of pre-trained LLMs presents a significant limitation: their knowledge quickly becomes outdated, and they lack domain-specific information not present in their training data. Retrieval-Augmented Generation (RAG) is the critical technology that bridges this gap, enabling LLMs to access and incorporate real-time, external information into their responses without the need for costly and time-consuming retraining. RAG transforms a static knowledge model into a dynamic answer engine.

The RAG process can be broken down into four distinct stages, which together allow an LLM to ground its responses in current and authoritative data:

- Indexing: A corpus of external data—such as a company’s internal documentation, a set of product manuals, or a curated collection of web pages—is processed. The content is chunked into manageable pieces, and each chunk is converted into a numerical vector embedding. These embeddings are then stored in a specialized vector database, creating a searchable knowledge library.

- Retrieval: When a user submits a query, the query itself is converted into a vector embedding using the same model. The system then performs a relevancy search within the vector database, identifying and retrieving the document chunks whose embeddings are mathematically closest to the query’s embedding.

- Augmentation: The retrieved document chunks, which represent the most relevant external information, are then combined with the user’s original prompt. This augmented prompt provides the LLM with fresh, specific context that was not part of its original training.

- Generation: Finally, the LLM generates a response based on the rich context provided by the augmented prompt. This allows the model to synthesize an answer that incorporates both its general pre-trained knowledge and the specific, up-to-date information from the retrieved documents. Crucially, this process also enables the LLM to cite its sources, as it knows precisely which documents were used to formulate the answer.

The advent of RAG fundamentally changes the optimization landscape. The efficiency and accuracy of the retrieval step are paramount. If a system cannot effectively discover and ingest high-quality external content, the quality of the final generated answer will be severely compromised. This places a new and significant premium on content that is structured for machine readability. Strong technical SEO practices are no longer merely “ranking factors” for a single search engine; they are foundational prerequisites for being a reliable data source for any RAG-powered AI system. A technically sound website, with clean HTML, fast load times, and clear architecture, is more likely to be successfully crawled, indexed, and retrieved by the RAG systems of ChatGPT, Perplexity, and Gemini, making technical health a universal asset in the AI era.

2.3. How Different LLMs Access Web Data

While the RAG framework is a general model, the specific methods used by major AI platforms to access web data vary, reflecting their unique architectures and business strategies.

- OpenAI (ChatGPT/SearchGPT): OpenAI’s models, including GPT-4, leverage partnerships with search providers like Microsoft Bing to access real-time web data via APIs. The introduction of SearchGPT represents a more integrated approach, designed to pull up-to-date information directly from the web and provide clear citations in its responses. When a query requires current information, the system particulates a search query, sends it to the search API, receives the results, and then uses the content from the top-ranking pages as the external knowledge base for its RAG process.

- Google (Gemini): Gemini is unique in its deep, native integration with Google’s own colossal search index. It does not need to rely on third-party search APIs. Instead, it can directly leverage Google’s decades of crawling and indexing infrastructure, including sitemaps, internal links, and backlinks, to discover and prioritize web content. This gives Gemini access not only to the raw text of web pages but also to Google’s structured knowledge bases, such as the Knowledge Graph, providing it with a rich, pre-processed understanding of entities and their relationships.

- Perplexity & Claude: Perplexity positions itself as a “conversational answer engine” that orchestrates multiple technologies to deliver sourced answers. It utilizes a range of LLMs, including models from Anthropic (Claude) and fine-tuned open-source models, often accessed via services like Amazon Bedrock. To access real-time web data, Perplexity’s system likely executes live searches using APIs from major search engines. Its proprietary models are specifically designed to prioritize knowledge retrieved from the internet over their static training data, making them particularly effective for time-sensitive queries. This architecture allows it to provide answers with a curated list of sources, giving users visibility into the information’s origin.

Section 3: The Content Pillar: Architecting for Machine Comprehension and Credibility

With a clear understanding of the technical mechanics driving LLMs, the focus now shifts to the practical application of this knowledge in content strategy. Optimizing content for AI is not about tricking an algorithm; it is about creating information that is fundamentally clear, credible, and structured for machine comprehension. The goal is to make your content the most reliable, efficient, and useful source for an AI system tasked with synthesizing an answer.

3.1. The E-E-A-T Framework in the Age of AI

The principles of Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T), originally developed by Google to assess content quality for its search rankings, remain profoundly relevant in the age of AI, though they are interpreted through a different lens. While traditional search engines evaluate these signals structurally—counting backlinks, verifying author credentials, and checking for technical trust signals like HTTPS—LLMs evaluate them linguistically and contextually.

An LLM functions as a probability machine, assessing authority based on patterns it has learned from its vast training data. It doesn’t “check” a backlink profile; instead, it recognizes the linguistic markers of expertise, such as the correct and confident use of technical terminology, logical and structured explanations, and a clear, authoritative tone. Most importantly, LLMs establish trust and authority through consistency across multiple, diverse sources. If numerous independent documents corroborate a piece of information or associate a specific brand with a particular topic, the AI perceives this consensus as a powerful signal of reliability.

To optimize for an LLM’s interpretation of E-E-A-T, content strategies must focus on:

- Demonstrating Experience: Incorporate firsthand knowledge that cannot be easily replicated. This includes personal anecdotes, real-world case studies, and specific insights derived from direct use of a product or service.

- Showcasing Expertise: Content should be created or reviewed by verified experts. Including detailed author biographies with credentials and qualifications provides explicit signals of expertise that can be parsed by AI systems.

- Building Authoritativeness: This is achieved through the digital consensus mentioned above. It involves not just on-site content but a broader strategy of getting the brand mentioned consistently in connection with its core topics across the web.

- Establishing Trustworthiness: Factual accuracy is paramount.

All claims should be meticulously researched and, where appropriate, supported by citations to credible, primary sources like academic studies or government data. Transparency, through clear contact information and a detailed “About Us” page, also contributes to this signal.

Principles of Extraction-Friendly Content Design

The central principle of content design for LLMs is modularity. An AI does not consume an article as a linear narrative; it dissects it into its constituent parts, extracting the most relevant fragments to assemble a new answer. Therefore, content must be architected for this process of “Lego-like” disassembly and reassembly. Each piece of information should be a self-contained, context-rich “block” that can be lifted and used independently without losing its meaning.

This modular approach is achieved through several key design principles:

- Semantic Chunking: This is the practice of breaking content into small, focused, and logically distinct sections. The “one idea per paragraph” rule is a fundamental tenet. Paragraphs should be kept short, ideally between three and five sentences, to ensure that each chunk has a clear and consistent meaning, which is essential for the embedding algorithms used in RAG systems.

- Structural Clarity: A clear and logical heading hierarchy (H1 for the main title, H2 for major sections, H3 for sub-points) is not merely a stylistic choice; it serves as a critical roadmap for AI parsers, allowing them to understand the structure and topical boundaries of the content. The use of bulleted and numbered lists is highly encouraged, as they create discrete, modular items of information that are exceptionally easy for an LLM to extract and present in its own generated lists. Tables serve a similar function for comparative data, providing a highly structured format that machines can parse with great accuracy.

- Linguistic Precision: The language used must be direct, clear, and unambiguous. Jargon, metaphors, and overly complex or “lyrical” sentence structures should be avoided, as they can confuse parsing algorithms. A particularly effective writing style is the “answer-first” or “inverted pyramid” model, where a section begins with a direct, one-sentence answer to a potential question, followed by supporting details. This format aligns perfectly with how LLMs are designed to retrieve and present information, making it highly likely that the initial sentence will be extracted as a direct answer or featured snippet.

High-Impact Content Formats for LLM Citation

While the principles of extraction-friendly design should be applied to all content, certain formats are inherently better suited for LLM consumption and are disproportionately likely to be cited. A strategic content plan should prioritize the creation of these high-impact assets.

- FAQs and Q&A Formats: This is the most natural format for an answer engine. Content structured as a series of questions and direct answers directly mirrors the conversational interaction model of LLMs. Headings should be phrased as complete, natural-language questions that users are likely to ask (e.g., “How does LLM Seeding work?”). The answer should immediately follow the heading, with the most critical information front-loaded in the first sentence.

- “Best Of” Lists and Comparison Tables: LLMs are frequently used for decision-making and comparative queries (e.g., “best CRM for small businesses”). Structured lists and tables that compare products or services on clear, consistent criteria provide immense value to these queries. It is crucial to be transparent about the selection and rating criteria used in these lists to build trust and authority.

- Glossaries and Definitions: By creating a dedicated glossary or a series of articles that provide clear, concise, and standalone definitions for core industry terminology, a brand can establish itself as the go-to authority for foundational knowledge. LLMs often seek out simple, direct definitions to include in their responses, making this a highly effective format for citation.

- First-Person Reviews and Case Studies: These formats are powerful because they provide unique, experience-based information (the “E” in E-E-A-T) that an LLM cannot generate from its training data. To be effective, these pieces must go beyond generic praise and include specific, measurable outcomes, a clear methodology, and a balanced discussion of pros and cons. This provides the kind of original, data-driven insight that LLMs are designed to seek out and reference.

The Technical Pillar: Building a Foundation for AI Crawlers

While content quality and structure are paramount, they are ineffective if AI systems cannot efficiently find, access, and interpret the information on a website. The technical foundation of a site is a non-negotiable prerequisite for success in the AI era. This section details the essential technical optimizations required to ensure maximum visibility and readability for AI crawlers and parsers.

Schema Markup: The Language of AI

Schema markup is a standardized vocabulary of structured data that can be added to a website’s HTML. Its purpose is to provide explicit, machine-readable context about the content on a page, moving beyond ambiguity to provide clear labels for AI systems. For an LLM, which must parse unstructured text and infer meaning, schema acts as a set of clear instructions. It is akin to creating a read-only API for a website’s content, allowing an AI to “query” a page and receive perfectly structured, unambiguous data. This dramatically reduces the AI’s processing load and significantly increases the accuracy of the information it extracts and uses in its responses.

A practical, phased framework for implementing schema markup is essential for maximizing impact without overwhelming resources:

- Benchmark and Audit: Begin by conducting a full crawl of the website to identify pages with missing, invalid, or outdated schema markup. This creates a baseline for measuring improvement.

- Prioritize High-Value Entities: Focus initial efforts on pages and schema types that have the most direct impact on business goals. This typically includes Organization or LocalBusiness schema on the homepage to establish brand entity and trust, Product schema on e-commerce pages to detail price and availability, and FAQPage schema on informational content to target direct answers.

- Choose an Implementation Method: For organizations without dedicated developer resources, many modern CMS platforms offer plugins (like Yoast or Rank Math for WordPress) that simplify schema implementation through user-friendly interfaces. For those with technical support, directly embedding JSON-LD code into the page’s HTML offers the most control and is the format preferred by Google.

- Validate Rigorously: Before deployment, all schema markup must be tested using tools like Google’s Rich Results Test and the Schema Markup Validator. These tools will identify errors that could prevent the schema from being read correctly.

- Monitor and Iterate: After implementation, use tools like Google Search Console’s Enhancements report to monitor the impressions and performance of rich results powered by the new schema. Schema is not a “set-it-and-forget-it” task; it should be reviewed and updated quarterly to align with new content, product launches, and updates to the schema.org vocabulary itself.

While there are hundreds of schema types, a select few offer disproportionate value for LLM optimization. The following table outlines these high-impact types, their purpose for AI systems, and a recommended implementation priority.

Table: High-Impact Schema Markup for LLM Optimization

| Schema Type | Purpose for LLMs | Implementation Priority |

|---|---|---|

| Organization / LocalBusiness | Establishes the core brand entity, providing unambiguous information about the company’s name, logo, address, and contact details. This is a foundational trust signal. | High |

| FAQPage | Provides clear, discrete question-and-answer pairs that are ideal for direct extraction by LLMs to answer conversational queries. | High |

| Article | Defines a piece of content as an authoritative article, specifying crucial metadata like the author, publication date, and headline, which helps AI assess credibility and recency. | High |

| Product | Structures key commercial data such as price, availability, ratings, and specifications, enabling LLMs to perform accurate product comparisons and recommendations. | High (for e-commerce) |

| HowTo | Outlines a step-by-step process for completing a task. This highly structured format is easily parsed and can be directly surfaced in instructional or procedural queries. | Medium |

| Person | Connects content to a specific, verified expert by marking up author pages with their credentials, affiliations, and areas of expertise, directly supporting the “E” and “E” in E-E-A-T. | Medium |

| Course / Event | Structures information about educational offerings or events, including dates, locations, and providers, allowing AI to accurately represent and recommend them. | Low (Niche-dependent) |

Core Technical Health for LLM Visibility

Beyond schema markup, the overall technical health of a website plays a critical role in its accessibility to AI systems. Foundational technical SEO practices must be maintained and, in some cases, re-contextualized for an AI-first world.

- Site Speed and Performance: AI systems, particularly those performing real-time retrieval via RAG, may deploy high-volume crawlers.

A fast and robust server infrastructure is necessary to handle these requests without performance degradation. Core Web Vitals, such as a Largest Contentful Paint (LCP) under 2.5 seconds, remain a critical benchmark for ensuring that content can be accessed and rendered quickly by both human and machine visitors.

- Clean and Semantic HTML: The underlying code of a webpage should be clean and use semantic HTML tags (<article>, <section>, <nav>, etc.) appropriately. This provides a clear, logical structure that AI parsers can navigate more efficiently than a document composed entirely of generic <div> tags. Core text content should be in raw HTML, not rendered via complex JavaScript that may be difficult for some crawlers to execute.

- Crawlability and Indexability: The fundamentals of making a site discoverable remain unchanged. A logical URL structure, a comprehensive and regularly updated XML sitemap submitted to search engines, and a correctly configured robots.txt file are essential for ensuring that AI crawlers can find and access all important content. Any barriers, such as paywalls or login requirements, will render that content invisible to most LLMs.

- Strategic Internal Linking: A well-planned internal linking structure does more than guide users through a site; it creates a semantic map of the content. By linking related articles together using descriptive anchor text, a website can demonstrate its topical authority and the relationships between different concepts. This helps LLMs understand the full breadth and depth of a site’s expertise on a given subject, making it a more authoritative source for the entire topic cluster, not just a single page.

Section 5: The Distribution Pillar: Seeding Content Across the Digital Ecosystem

Optimizing on-site content and technical architecture is a necessary but insufficient condition for success in an AI-driven world. Large Language Models build their understanding and determine authority based on a broad consensus of information gathered from across the entire web. Therefore, a proactive, multi-channel distribution strategy—the core of LLM Seeding—is required to build the necessary signals of credibility and relevance.

5.1. Beyond the Corporate Blog: Identifying High-Value Seeding Channels

Relying solely on a brand’s own website is a critical strategic error in the context of LLM Seeding. LLMs are trained on and retrieve information from a diverse corpus that includes forums, review sites, news outlets, and community platforms. To establish authority, a brand’s message and expertise must be present on these third-party channels. The goal is to create a digital echo chamber where the brand’s association with its core topics is consistently reinforced across multiple, independent domains. This pattern of repetition and consensus is a powerful signal that LLMs are designed to detect.

Different channels are suited for different types of content and strategic goals. The following matrix provides a framework for aligning content formats with the most effective distribution channels for LLM ingestion.

| Content Format | Corporate Website/Blog | Industry Publications (Guest Posts) | Forums (Reddit/Quora) | Review Sites (G2/Capterra) | Authoritative Platforms (Medium/Substack) | Digital PR (News Wires) |

|---|---|---|---|---|---|---|

| In-depth Guides / Pillar Pages | High | High | Medium | Low | High | Low |

| Original Research / Data Reports | High | High | Medium | Low | Medium | High |

| Expert Q&As / Interviews | High | High | High | Low | High | Medium |

| Product Comparisons / “Best Of” Lists | High | High | High | High | Medium | Low |

| Opinion / Thought Leadership | High | High | Medium | Low | High | Medium |

| Case Studies / First-Person Reviews | High | Medium | High | High | Medium | Low |

Analysis of these channels reveals key strategic priorities:

- Forums & Communities (Reddit, Quora): These platforms are invaluable as they are heavily crawled and contain a massive repository of content in a natural, conversational, question-and-answer format that closely aligns with how LLMs are queried. Posting structured, helpful responses that subtly mention the brand can directly influence AI-generated answers.

- Review & Industry Sites (G2, Capterra, Clutch): These sites provide structured, third-party validation. A comprehensive and positive profile on these platforms reinforces the brand as a legitimate entity and a credible solution in its category, strengthening its association with relevant keywords and concepts.

- Authoritative Third-Party Platforms (Medium, Substack, LinkedIn Articles): Publishing content on these platforms leverages their high domain authority, clean formatting, and association with individual authors. LLMs often treat content from these sites as high-quality, increasing the likelihood of it being ingested and cited.

5.2. Digital PR as a Primary Seeding Mechanism

In the context of LLM optimization, the role of Digital Public Relations (PR) evolves from a brand-building and link-acquisition function to a primary mechanism for generating high-authority, third-party citations that LLMs are programmed to trust. An AI citation is a powerful digital endorsement, and a proactive Digital PR strategy is the most effective way to earn these endorsements at scale.

The objective is to create a trail of evidence across the web that establishes the brand as a thought leader and a reliable source of information. Effective tactics include:

- Publishing Original, Data-Driven Research: Creating and promoting proprietary industry reports, surveys, or data analyses is a highly effective way to generate citations. When high-authority news outlets and industry blogs reference this original research, it creates a powerful and credible signal for LLMs.

- Securing Expert Quotes and Media Coverage: Positioning company experts for inclusion in news articles and industry trend pieces associates the brand’s name with expertise. The show notes, transcripts, and articles resulting from these appearances create additional citable sources.

- Strategic Press Release Distribution: Publishing well-structured, data-backed press releases on reputable news wires ensures the information is indexed and available for retrieval. These releases act as a durable, factual record of company news and milestones.

Ultimately, the strategy is not to acquire a single high-value backlink but to orchestrate a consistent narrative across a diverse portfolio of credible, third-party channels. This creates an undeniable pattern of authority and relevance. When an LLM’s RAG system repeatedly encounters the same brand being discussed as a leader in its field across news sites, industry publications, and user forums, it statistically concludes that mentioning this brand is a core component of a correct and comprehensive answer.

Section 6: Measurement, Risks, and the Road Ahead

As organizations adapt to this new paradigm, they must also adopt new methods for measuring success, develop a keen awareness of the associated risks, and maintain a forward-looking perspective on the future of information discovery. The shift from a click-based to a citation-based economy requires a fundamental change in how performance is evaluated and how strategy is formulated.

6.1. Measuring Success in a Zero-Click World

Traditional SEO metrics such as organic traffic, click-through rate, and keyword rankings are becoming insufficient indicators of visibility in an AI-driven landscape. Success is no longer measured by how many users visit a website, but by how often a brand’s information is surfaced and trusted by AI systems. This necessitates a new measurement framework focused on influence and citation rather than direct traffic.

Key performance indicators for LLM optimization include:

- Manual Prompt Testing: This is the most direct method of measurement. It involves regularly and systematically querying various LLMs (ChatGPT, Gemini, Perplexity, etc.) with a curated set of commercial, informational, and navigational queries relevant to the business. The results—whether the brand is mentioned, the context of the mention, and the sources cited—should be documented in a tracking spreadsheet to monitor changes over time. To avoid personalization bias, these tests should be conducted in private or incognito browser sessions.

- Brand Mention Monitoring: An increase in unlinked brand mentions across the web is a strong leading indicator of growing authority. Tools like Google Alerts, Semrush Brand Monitoring, or Ahrefs Brand Radar can be configured to track these mentions across forums, blogs, and news sites, providing a quantitative measure of the brand’s expanding “semantic footprint”.

- Analysis of Referral and Direct Traffic: While the goal is not primarily to drive clicks, some AI platforms do provide citations with links. Monitoring referral traffic from these tools in analytics platforms like Google Analytics 4 can provide direct evidence of citation. Furthermore, a significant increase in direct traffic or branded search queries (e.g., users searching directly for “”) can be a powerful lagging indicator that users are seeing the brand mentioned in AI responses and are subsequently seeking it out directly.

- No-Click Impressions: In Google Search Console, an increase in impressions for queries where the brand’s content is visible in an AI Overview but does not receive a click is another sign of successful GEO. It indicates that the brand is part of the AI-generated answer, achieving visibility even without a traditional click.

6.2. Challenges and Ethical Considerations

The transition to an AI-first information ecosystem is not without significant challenges and profound ethical implications.

Organizations must navigate these risks to protect their brand and act as responsible digital citizens.

Content and Brand Risks:

- AI Hallucinations and Misinformation: LLMs are prone to generating factually incorrect or misleading information, a phenomenon known as “hallucination”. There is a significant risk that an AI could misrepresent a brand’s products, services, or statements, potentially causing reputational damage. Lack of transparency in sourcing for some models makes it difficult to trace and correct these errors.

- Plagiarism and Loss of Attribution: As AI models scrape and synthesize content, there is a risk of unattributed use of proprietary information, effectively a form of plagiarism. This devalues the work of original content creators and can lead to copyright infringement issues.

- Generic Content and Brand Dilution: An over-reliance on AI for content generation can lead to outputs that are generic, lack a distinct brand voice, and fail to provide the unique, human elements of humor, empathy, or creativity that resonate with audiences.

Broader Ethical Imperatives:

- Data Bias: LLMs are trained on vast datasets sourced from the internet, which reflect existing societal biases. Consequently, models can perpetuate and amplify harmful stereotypes related to race, gender, culture, and socioeconomic status. Optimizing content for these systems without being mindful of this reality risks contributing to the spread of biased information.

- Privacy and Data Security: LLMs have been shown to “memorize” and regurgitate sensitive personal information contained within their training data, posing significant privacy risks. The use of user prompts to further train models also raises concerns about the confidentiality of data submitted to these platforms.

- Environmental Impact: The training and operation of large-scale language models require immense computational power, leading to substantial energy consumption and a significant carbon footprint. The push for ever-larger and more powerful models carries a real environmental cost that must be considered.

The Future of Content Discovery and its Economic Impact

The rise of generative AI marks a permanent inflection point for the digital information economy. The future of search will likely be a hybrid model, where traditional keyword-based results coexist with integrated, AI-synthesized answers. While Google’s dominance is being challenged for the first time in decades, it is also aggressively integrating its own AI, Gemini, into its products to adapt to this new reality.

This transformation will have profound economic consequences, particularly for publishers and the advertising industry that underpins the free and open web.

- Impact on Advertising: The traditional online advertising model is predicated on driving traffic to websites where ads can be displayed. As AI answer engines provide direct responses, they reduce the need for users to click through to external sites, threatening to sever the connection between search and ad revenue. Brands may need to find new ways to influence AI recommendations, shifting budget from search ads to content and PR strategies designed for LLM seeding.

- Impact on Publishers: For content publishers, the threat is existential. If AI systems can scrape, summarize, and present their content without sending traffic back to the original source, their primary monetization model—on-site advertising and subscriptions—collapses. This may force a strategic pivot towards licensing content directly to AI companies, as some major publishers have already begun to do, or developing content so unique and valuable that users are compelled to seek it out directly.

This dynamic creates a bifurcated future for content. On one hand, there will be a “Great Flattening,” where generic, informational, top-of-funnel content is commoditized. AI will absorb and summarize this content, capturing its value and making the original sources largely invisible. On the other hand, this same dynamic creates a “Flight to Quality.” Because AI systems cannot create net-new knowledge, firsthand experiences, or original data, they are desperately dependent on high-quality, authoritative, and unique sources to fuel their answers.

This forces a strategic choice for every organization. There will be little room for content that is merely “good enough.” The future of content is binary: it will either be so generic that it is absorbed and anonymized by AI, or it will be so unique, authoritative, and well-structured that it becomes an indispensable, citable source for the entire AI ecosystem. The imperative is to invest in becoming a primary source of unimpeachable authority and unique insight.

Strategic Recommendations and Implementation Roadmap

The transition to an AI-first information landscape requires a deliberate and integrated strategic response. The concepts of LLM Seeding, LLMO, and GEO are not isolated tactics but interconnected components of a new, holistic approach to digital visibility. This concluding section synthesizes the preceding analysis into a unified framework and a practical, phased implementation plan designed for strategic leaders.

A Unified Optimization Framework

A successful strategy for the AI era does not abandon traditional SEO but rather builds upon it, layering new disciplines to create a comprehensive visibility engine. This unified framework recognizes the distinct but complementary roles of each component:

- Traditional SEO as the Foundation: Core technical SEO remains the bedrock of digital presence. A fast, secure, crawlable, and mobile-friendly website is the price of entry. It ensures that all machine crawlers—whether from Google or OpenAI—can efficiently access and index content. Keyword research continues to provide insights into user intent, which is crucial for both human- and AI-facing content.

- LLM Seeding as the Authority Builder: This is the expansive, off-site layer of the strategy. Its purpose is to build a broad consensus of authority and relevance across the web. Through Digital PR, community engagement, and distribution on authoritative third-party platforms, this layer creates the “digital echo chamber” that signals to LLMs that a brand is a trusted entity on its core topics.

- LLMO as the Universal Translator: This is the content and structural layer that ensures all information, wherever it is published, is optimized for machine comprehension. LLMO principles—clarity, structure, modularity, and semantic markup—act as a universal translator, making content legible and valuable to any AI system that encounters it. It is the discipline that ensures the efforts of SEO and LLM Seeding are not wasted due to poor readability.

- GEO as the Performance Outcome: This is the specific application and measurement layer within search engines. Success in GEO—appearing prominently in Google’s AI Overviews and other generative search features—is the direct result of a well-executed foundational SEO program, a robust LLM Seeding strategy, and meticulous adherence to LLMO principles. It is not a separate activity but the desired outcome of the integrated whole.

Phased Implementation Plan

Adopting this unified framework requires a structured, phased approach. The following roadmap provides a timeline for businesses to systematically build their capabilities and adapt their operations over a 12-month period.

Phase 1: Audit and Foundation (Months 1-3)

This initial phase focuses on establishing a baseline and securing the technical prerequisites for success.

Objectives: Understand the current visibility landscape and ensure the technical foundation is sound.

Key Actions:

- Conduct a Comprehensive Technical SEO Audit: Evaluate site speed, mobile-friendliness, crawlability, and indexability. Remediate all critical issues.

- Perform a Schema Markup Audit: Identify all pages lacking necessary structured data. Prioritize and implement foundational schema types (Organization, Article, Product) on high-value pages.

- Establish a Baseline for LLM Visibility: Conduct and document the first round of manual prompt testing across major LLMs (ChatGPT, Gemini, Perplexity) for a core set of 20-30 strategic queries.

- Set Up Brand Mention Monitoring: Implement tools to begin tracking unlinked brand mentions across the web.

Phase 2: Content Re-architecting and Seeding (Months 4-9)

This phase shifts focus to content optimization and the initiation of off-site distribution efforts.

Objectives: Begin aligning content with LLMO principles and start building off-site authority signals.

Key Actions:

- Re-architect High-Value Content: Identify the top 20% of existing content assets that drive business value. Systematically revise them to be LLMO-compliant, focusing on semantic chunking, structural clarity (headings, lists), and the “answer-first” writing style.

- Develop an LLM-Centric Content Calendar: Shift new content production towards high-impact formats identified in this report, such as comprehensive FAQs, detailed comparison guides, and original data reports.

- Launch a Multi-Channel Seeding Program: Initiate a pilot program for content distribution. Identify 2-3 key channels (e.g., a specific subreddit, an industry publication for guest posts, Quora) and begin consistently contributing valuable, structured content.

Integrate Digital PR: Launch the first data-driven Digital PR campaign with the explicit goal of earning citations and mentions in authoritative, third-party media.

Phase 3: Scale and Optimization (Months 10+)

The final phase focuses on expanding successful initiatives, building deep topical authority, and establishing a continuous improvement loop.

- Objectives: Achieve domain-level authority, scale successful seeding channels, and integrate LLM optimization into standard business operations.

- Key Actions:

- Expand Topic Clusters: Based on the performance of initial content, build out comprehensive topic clusters with detailed “spoke” pages to establish deep expertise in core subject areas.

- Scale Seeding Efforts: Double down on the distribution channels that have proven most effective in generating citations and brand mentions, while continuing to experiment with new platforms.

- Establish a Continuous Monitoring Loop: Formalize the process of monthly prompt testing and brand mention analysis. Use the insights gathered to inform and refine the content and distribution strategy on an ongoing basis.

- Educate and Train: Provide training to all content, marketing, and SEO teams on the principles of the Unified Optimization Framework to ensure that LLM-readiness becomes an integral part of all future digital marketing activities.