Google AI Studio: Comprehensive Guide to Gemini & AI Prototyping

Executive Summary

Google AI Studio is a free, web-based integrated development environment (IDE) that serves as the most direct and accessible on-ramp to Google’s cutting-edge Gemini family of generative AI models. It is strategically positioned as a powerful tool for rapid prototyping, experimentation, and developer onboarding, effectively bridging the gap between casual exploration and enterprise-grade deployment. The platform’s core proposition is to democratize access to advanced AI, allowing users of all skill levels—from marketers and writers to seasoned developers—to harness the power of state-of-the-art models without financial or technical barriers.

The platform’s key capabilities are extensive, excelling in its native multimodal functionality that allows for the seamless processing of text, images, audio, and video within a single interface. It features a streamlined workflow that takes users from an initial prompt to production-ready code with remarkable efficiency. Users have access to advanced features such as function calling, which connects models to external APIs; model tuning for custom applications; and emerging agentic AI capabilities that can automate complex digital tasks. While accessible to a broad audience, its primary value is realized by developers and technical users who leverage it as a sophisticated testing ground before integrating the Gemini API into their applications or scaling up to the more robust, enterprise-focused Vertex AI platform.

Google AI Studio is a critical component of Google’s broader strategy to dominate the AI development landscape. It functions as a powerful, zero-cost entry point into the Google Cloud ecosystem, designed to attract a wide user base and funnel promising projects toward the monetized, enterprise-level services offered by Vertex AI. This approach allows Google to capture developers at the earliest stages of their AI journey, fostering a community and building a pipeline of future cloud customers.

This report concludes that Google AI Studio is a formidable and highly versatile tool, distinguished by its deep integration with Google services, advanced multimodal features, and a clear, secure path to enterprise scalability. It provides developers with “raw” access to the Gemini models, offering a more powerful and unrestricted experience compared to consumer-facing products. Its primary challenge is not a limitation of the platform itself, but the inherent complexity of the rapidly evolving generative AI field—a complexity this comprehensive guide aims to demystify.

Deconstructing Google AI Studio: Architecture and Market Position

To fully grasp the capabilities and strategic importance of Google AI Studio, it is essential to define its role, understand its relationship with the underlying Gemini models, and situate it within Google’s broader AI ecosystem. The platform is not an isolated product but a carefully designed entry point that serves distinct purposes for different user segments, from individual creators to large enterprises.

Defining AI Studio: More Than a Playground

Google AI Studio is a web-based IDE engineered for prototyping and building with Google’s generative models. It transcends the concept of a simple chatbot interface, offering a comprehensive and customizable test environment where developers can experiment with the newest and most powerful AI models from Google, with as many as 13 unique models available at a given time. The platform is fundamentally designed to be the fastest path from an initial idea to a functional, AI-powered application. It achieves this by streamlining the entire prototyping workflow, from prompt design and parameter tuning to the generation of production-ready code snippets.

A defining characteristic of AI Studio is its accessibility. The platform is completely free to use, requiring only a standard Google account. This deliberate removal of financial barriers to entry makes cutting-edge AI technology available to a global audience, including students, hobbyists, and professionals, without the need for a credit card or a trial period. This zero-cost model is a cornerstone of its design, encouraging widespread experimentation and innovation.

The Gemini Connection: Raw vs. Refined Model Access

The core of AI Studio is its direct access to the Gemini family of models, Google’s state-of-the-art, multimodal generative AI. A critical distinction exists between how these models are presented in AI Studio versus in Google’s consumer-facing products like the Gemini App (formerly Bard). AI Studio provides what can be described as “raw” access to the models, meaning users interact with them with minimal pre-configured system instructions or safety guardrails. This unfiltered environment is ideal for developers, as it allows for precise control over the model’s behavior and provides a more accurate representation of the model’s core capabilities.

In stark contrast, the Gemini App is a “refined” product. It layers a heavy, pre-defined system prompt onto the core model, instructing it to behave as a “helpful, friendly, engaging personal assistant” and to avoid a long list of sensitive topics. This curation creates a safer and more predictable experience for the general public but can also make the model’s responses feel more muted or “dumbed down” compared to its performance in AI Studio.

This duality is not an accident but a reflection of a deliberate market segmentation strategy. The performance gap that users consistently report between the two platforms stems directly from the “wrapper” of instructions and guardrails applied to the consumer product. For developers, this means that the model’s behavior in AI Studio is not directly representative of its behavior within the consumer Gemini app. To replicate that curated experience, a developer would need to engineer a similarly detailed system prompt and implement comparable safety controls in their own application. This distinction is crucial for managing expectations when moving from a prototype in AI Studio to a public-facing application.

| Feature | Google AI Studio | Gemini App |

|---|---|---|

| Target Audience | Developers, Researchers, Marketers, Technical Users | General Public |

| Primary Purpose | Prototyping, API Testing, App Development | Personal Assistant, Information Retrieval, Content Creation |

| Model Access | Raw, Unfiltered access to core models | Refined, Curated experience with layered instructions |

| System Prompt | Minimal or entirely user-defined | Heavy, pre-defined prompt for a “helpful assistant” persona |

| Customization | High (model parameters, safety settings, tools) | Low (limited settings available to the user) |

| Cost | Free to use, with API rate limits for developers | Free, with optional paid tiers for advanced models |

The Google AI Ecosystem: The Path from AI Studio to Vertex AI

Google AI Studio is explicitly designed as the initial stage of a comprehensive development journey. While it is a powerful tool in its own right, the intended destination for mature, scalable, and enterprise-ready applications is Vertex AI, Google Cloud’s end-to-end Machine Learning Operations (MLOps) platform. The platform serves as a “prosumer” bridge, targeting a vast audience of power users, individual developers, and small businesses with a free, high-capability tool to foster adoption and innovation.

The migration path from AI Studio to Vertex AI is well-documented and seamlessly integrated. A developer can begin by prototyping prompts and building a proof-of-concept in AI Studio. As the application’s requirements for security, scalability, and governance mature, the entire project can be migrated to Vertex AI Studio. This transition unlocks a suite of enterprise-grade features, including full MLOps capabilities for managing the model lifecycle, robust data governance, advanced security controls, comprehensive model evaluation tools, and deep integration with the entire suite of Google Cloud services.

This strategic pathway reveals AI Studio’s role as a sophisticated customer acquisition funnel for Google Cloud. By lowering the barrier to entry to its most powerful AI models, Google builds a large and engaged developer community. It allows innovators to experiment and validate their ideas at no cost, and once a project demonstrates commercial potential, Google provides a clear, compelling, and technically straightforward path to monetize that application on its enterprise cloud platform. This strategy effectively captures users at the very beginning of their AI development lifecycle and nurtures them into long-term, high-value cloud customers.

Getting Started: A Guided Tour of the Platform

Access and Onboarding

Accessing Google AI Studio is exceptionally straightforward. Users can navigate to aistudio.google.com and sign in with any standard Google account.

There is no requirement to provide a credit card, sign up for a trial, or complete a complex onboarding process. The platform is entirely web-based, eliminating the need for any software installation or environment configuration, and is optimized to run in all major modern browsers. This frictionless onboarding process is a key element of its accessibility.

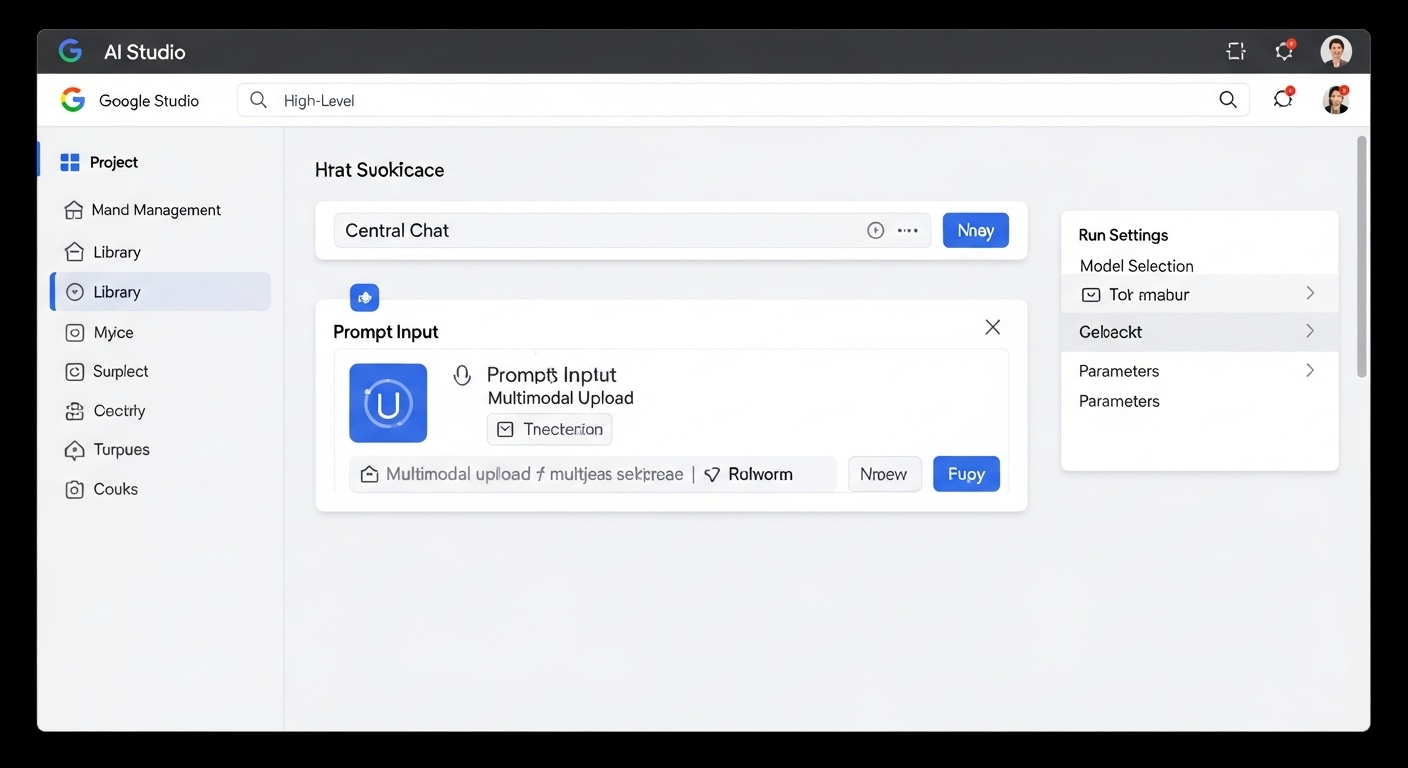

Navigating the Interface: A Component-by-Component Walkthrough

The AI Studio interface is logically divided into several key areas, each serving a specific function in the workflow of creating and testing AI prompts.

- Main Workspace: The central focus of the UI is the main chat window, which serves as the primary area for interacting with the selected Gemini model. This familiar conversational interface is where users input their prompts and view the model’s generated responses.

- Left Sidebar (Workflow Organization): This sidebar is the hub for managing projects. It contains the “Create new” button to start new prompts and provides access to the “Library,” which houses the user’s saved chat history. This allows for easy organization and retrieval of past work.

- Top Bar (High-Level Navigation): Positioned at the top of the screen, this bar provides access to essential administrative and learning resources. It includes a link to the “Dashboard,” where users can manage their API keys, associated Google Cloud projects, and view billing information. It also contains direct links to official documentation and case studies.

- Center Pane (The Prompting Interface): This is the core interactive zone. It is dominated by the chat input field where users type their prompts. Above this field are buttons for multimodal uploads, allowing users to add images, audio files, or connect directly to their Google Drive to use files as part of the prompt context. The “Run” button (or Ctrl+Enter) sends the prompt to the model for processing.

- Right Sidebar (Run Settings): This is arguably the most critical panel for developers and advanced users. It contains all the controls for fine-tuning the model’s behavior. This includes the model selection dropdown, sliders for adjusting parameters like Temperature, and toggles for enabling powerful tools such as Grounding with Google Search, Function Calling, and Code Execution.

Managing Your Workspace: Prompts, History, and the Starter App Gallery

Effective workspace management is crucial for a productive experience in AI Studio. The platform provides several tools to help users organize their work and find inspiration.

- Creating and Naming Prompts: New interactions are initiated using the “Create new” button. AI Studio automatically assigns a name to each new chat based on its content, but users are encouraged to provide their own descriptive names and descriptions for better organization, much like managing files in a traditional file system.

- Chat History/Library: All conversations are automatically saved in the user’s Library. This feature allows for easy revisiting of past experiments, enabling users to continue a line of inquiry or compare the results of different prompting strategies over time.

- Prompt Gallery: For users who are new to generative AI or seeking inspiration, Google provides a comprehensive Prompt Gallery. This gallery contains dozens of pre-built prompts that showcase the diverse capabilities of the Gemini models, covering a wide range of use cases from creative writing and image generation to technical problem-solving and data extraction.

- Starter Apps: A significant evolution beyond simple prompts is the introduction of “Starter Apps.” These are fully functional, open-source sample applications that come with a built-in code editor directly within the AI Studio interface. They serve as powerful springboards for developers, providing a solid foundation for advanced applications like video analysis, spatial understanding with 3D bounding boxes, and intelligent map exploration. Developers can use, learn from, fork, and modify these apps, dramatically accelerating the development of sophisticated, AI-powered projects.

Mastering the Core Engine: Prompting and Parameter Control

The effectiveness of any generative AI model is fundamentally tied to the quality of the prompts it receives and the fine-tuning of its generation parameters. Google AI Studio provides a powerful and increasingly streamlined environment for mastering this process, known as prompt engineering.

The Unified Prompt Experience: From Freeform to Chat

The user experience within AI Studio has undergone rapid evolution and consolidation. Early iterations of the platform offered users three distinct prompt types: “Freeform” for single-turn requests, “Structured” for providing few-shot examples, and “Chat” for multi-turn conversations. However, based on user feedback and a drive for simplification, Google has since moved to a “new unified prompt UX”.

This update consolidates all interactions into the multi-turn “Chat” prompt interface. This is a significant product decision that prioritizes the more flexible and powerful conversational paradigm. While the underlying concepts of providing structured examples (few-shot prompting) or making a single request (zero-shot) remain critical to prompt design, the interface for inputting them is now unified within the chat window. A user wishing to make a single, “freeform” request now simply starts a new chat and sends a single message. This change simplifies the user journey but requires an understanding of the platform’s history to correctly interpret older tutorials and documentation.

System Instructions: Setting the Stage for Model Behavior

A cornerstone of controlling model output in the unified chat interface is the “System Instructions” field. This dedicated input area allows the user to provide a high-level directive that governs the model’s behavior for the entire duration of a conversation. These instructions can be used to define a specific role (e.g., “You are a senior software architect”), a persona (“You are a witty pirate”), a desired tone, or a set of constraints and rules for the model to follow.

The impact of well-crafted system instructions is profound. For example, a simple instruction like, “You are an alien that lives on Europa, one of Jupiter’s moons,” will produce a basic chatbot. However, refining this instruction to, “You are Tim, an alien that lives on Europa, one of Jupiter’s moons. Keep your answers under 3 paragraphs long, and use an upbeat, chipper tone in your answers,” results in a dramatically more consistent, higher-quality, and more engaging conversational experience. System instructions are modifiable even after a chat session has begun, allowing for iterative refinement.

Fine-Tuning Model Output: A Deep Dive into Temperature, Top-P, and Top-K

The “Run settings” panel on the right side of the interface provides users with granular control over the model’s token selection process. These parameters directly influence the creativity, randomness, and predictability of the generated output.

- Temperature: This parameter controls the degree of randomness in the model’s output. A low temperature (e.g., 0.1 to 0.3) makes the model more deterministic; it will consistently select the most probable next token, making it ideal for factual recall, summarization, or code generation where accuracy is paramount. A high temperature (e.g., 0.8 to 1.0+) increases randomness, encouraging the model to explore less likely word choices. This is best suited for creative tasks like brainstorming, writing poetry, or generating diverse ideas.

- Top-K: This parameter constrains the model’s choices to the K most likely next tokens. For example, a topK of 3 means the next token will be selected only from the three most probable options. A topK of 1 represents a “greedy decoding” strategy, where the model always picks the single most likely token.

- Top-P (Nucleus Sampling): This parameter offers a more dynamic approach to token selection. It defines a cumulative probability threshold P and instructs the model to consider the smallest possible set of tokens whose combined probability meets or exceeds this threshold. For instance, if topP is set to 0.9, the model will only consider the most likely tokens that together account for 90% of the probability mass, discarding the rest.

Understanding the interplay between these parameters is crucial. Setting the Temperature to 0 renders Top-K and Top-P irrelevant, as the output will always be deterministic. Conversely, a very high temperature can make the filtering from Top-K or Top-P less meaningful. Effective prompt engineering involves experimenting with these settings to find the “Goldilocks zone” that produces the desired output for a specific task.

| Parameter | Description | Range | Use Case (Low Value) | Use Case (High Value) | Critical Interactions |

|---|---|---|---|---|---|

| Temperature | Controls the randomness of the output. Higher values lead to more creative and diverse responses. | 0.0 – 2.0 | Factual answers, code generation, summarization (e.g., 0.2) | Brainstorming, creative writing, generating novel ideas (e.g., 0.9) | A temperature of 0.0 makes the output deterministic, overriding Top-K and Top-P. |

| Top-P | Nucleus sampling. Constrains the selection to a cumulative probability mass of P. | 0.0 – 1.0 | Less random, more focused responses. | More random, diverse responses. | A more dynamic alternative to Top-K. |

| Top-K | Constrains the selection to the K most probable tokens. | 1 – 40 (typical) | Less random responses. A value of 1 is “greedy decoding.” | More random responses. | Can be less effective than Top-P for distributions with a long tail. |

| Max Output Tokens | Sets the maximum number of tokens (approx. |

- Varies by model

- Short, concise answers.

- Long-form content like articles or stories.

- Prevents overly long or runaway responses.

Stop Sequences

A set of character sequences that, when generated, will cause the model to stop.

Any string

- Controlling structured output, ending lists, or preventing boilerplate text.

- N/A

- Useful for ensuring responses end at a specific point.

Implementing Guardrails: Configuring Safety Settings

As part of Google’s commitment to responsible AI, AI Studio includes built-in, configurable safety settings to prevent the generation of harmful content. These filters can be adjusted within the “Run settings” panel for several distinct categories:

- Harassment

- Hate Speech

- Sexually Explicit

- Dangerous Content

For each category, users can select a blocking threshold: “Block few,” “Block some,” or “Block most.” These settings correspond directly to the API’s HARM_BLOCK_THRESHOLD values of BLOCK_ONLY_HIGH, BLOCK_MEDIUM_AND_ABOVE, and BLOCK_LOW_AND_ABOVE, respectively. The default setting is typically “Block some,” which is designed to be a balanced starting point for most use cases. It is important to note that while these thresholds can be adjusted to be more or less restrictive, they cannot be turned off entirely within the Google AI Studio interface. This ensures a baseline level of safety for all experimentation conducted on the platform.

From Prototype to Production: API Integration and Development

Google AI Studio excels as a prototyping environment, but its ultimate purpose is to serve as a launchpad for building real-world applications. The platform provides a seamless and efficient pathway for developers to transition their perfected prompts and configurations from the web-based playground into functional code using the Gemini API.

Generating and Managing API Keys: A Secure Workflow

To programmatically interact with the Gemini models, a developer must first obtain an API key. This process is managed within Google AI Studio’s “Dashboard”. An API key is an encrypted string that authenticates requests to the Gemini API.

The workflow for generating a key is straightforward but requires association with a Google Cloud project:

- Navigate to the Dashboard from the left sidebar in AI Studio.

- Select the API Keys section.

- If no Google Cloud project is associated with the account, the user must first Import a project. This links the AI Studio workspace to a specific project in the Google Cloud Console.

- Once a project is selected, the user can click Create API key to generate a new key within that project’s context.

This project-based system allows developers to create and manage multiple keys for different applications, enabling better organization and security.

The “Get Code” Feature: Accelerating Development

One of the most powerful developer-centric features in AI Studio is the “Get code” button. After a user has crafted a prompt, uploaded multimodal content, and fine-tuned the model parameters to achieve the desired output, this feature eliminates the manual work of translating that configuration into an API call.

With a single click, AI Studio generates ready-to-use code snippets that precisely replicate the entire request. This includes the model name, the prompt content, all adjusted parameters (like temperature and safety settings), and a placeholder for the API key. The platform provides these snippets for a wide array of popular programming languages and environments, including:

- Python

- Node.js (JavaScript)

- Java

- Go

- Swift

- cURL/REST

This feature dramatically reduces the friction between prototyping and development, allowing developers to quickly integrate their AI Studio experiments into their applications.

Implementing the Gemini API in Python: A Step-by-Step Example

Integrating the Gemini API into a Python application is a simple process, facilitated by the google-genai SDK.

Step 1: Installation

The first step is to install the necessary Python package using pip.

Bash

pip install -q -U google-genai

Step 2: Authentication

The most secure method for authentication is to set the API key as an environment variable. The SDK will automatically detect and use it. On a Linux or macOS system, this can be done by adding the following line to the shell profile (e.g., ~/.bashrc or ~/.zshrc):

Bash

export GEMINI_API_KEY='YOUR_API_KEY'

For quick, temporary testing, the key can also be passed directly when initializing the client, though this is not recommended for production environments.

Step 3: Making a Request

A basic API call involves importing the library, configuring it with the API key (if not using environment variables), selecting a model, and calling the generate_content method.

Python

import os

from google import genai

# Configure the client with the API key from environment variables

# Ensure the GEMINI_API_KEY environment variable is set

genai.configure(api_key=os.environ)

# Select the model

model = genai.GenerativeModel('gemini-2.5-flash')

# Send the prompt and generate content

response = model.generate_content('Explain how AI works in a few words')

# Print the text part of the response

print(response.text)

Best Practices for API Key Security

The security of API keys is paramount, as a compromised key could lead to unauthorized use of a developer’s quota, unexpected billing charges, and access to private data. The following best practices are critical:

- Never Expose Keys Client-Side: API keys should never be embedded directly in client-side code, such as in JavaScript for a web application or in a mobile app (Android/Swift). Keys in these environments can be easily extracted by malicious actors.

- Use Server-Side Implementation: The most secure architecture involves making all API calls from a server-side backend. The API key can be stored securely on the server as an environment variable, inaccessible to the end-user’s browser or device.

- Do Not Commit Keys to Version Control: API keys must never be committed to source code repositories like GitHub. Use environment variables or secret management systems to handle credentials in development and production environments.

- Use Key Restrictions: When possible, add restrictions to API keys in the Google Cloud Console to limit which services they can access, reducing the potential damage if a key is leaked.

Advanced Capabilities for Next-Generation Applications

Beyond basic text generation, Google AI Studio unlocks a suite of advanced capabilities that enable developers to build sophisticated, dynamic, and truly next-generation AI applications. These features, centered around function calling, multimodality, and agentic behavior, represent the cutting edge of what is possible with generative AI.

Function Calling: Connecting Gemini to the World

Function calling is a transformative feature that allows developers to connect the Gemini models to external tools, databases, and APIs. This gives the model the ability to go beyond its training data to fetch real-time information, interact with proprietary systems, and perform actions in the real world.

The process works through a multi-step interaction between the developer’s application and the model:

- Declaration: The developer defines the structure of an available function in their code, including its name, a clear description of what it does, and the parameters it accepts (with their types and descriptions). This “function declaration” is passed to the model as part of the API request.

- Model Inference: When a user provides a prompt (e.g., “What’s the weather like in Boston?”), the model analyzes the text and the available function declarations. If it determines that calling a function is the best way to answer the request, it does not generate a text response. Instead, it outputs a structured JSON object containing the name of the function to call (get_current_weather) and the arguments to use ({“location”: “Boston, MA”}).

- Application Execution: Crucially, the Gemini model does not execute the function itself. The developer’s application receives the JSON object, parses it, and then calls the actual get_current_weather function in its own code, which might involve making a call to an external weather API.

- Response to Model: The application takes the result from the executed function (e.g., {“temperature”: “72F”, “conditions”: “Sunny”}) and sends it back to the model in a subsequent API call.

- Final Generation: The model receives the function’s output, understands its context, and formulates a final, natural language response for the user, such as, “The current weather in Boston is 72 degrees and sunny”.

This mechanism allows for the creation of powerful AI assistants that can check order statuses, book appointments, query databases, and interact with any system that exposes an API.

Unlocking Multimodality: Working with Images, Video, and Real-Time Streams

Gemini models are natively multimodal, meaning they can understand and process information from multiple formats simultaneously. Google AI Studio provides a seamless and powerful interface to leverage these capabilities.

- Image Understanding and Generation: Users can upload images directly into the chat interface and ask complex questions about their content.

This can range from simple object identification to sophisticated tasks like extracting all the text from a document scan or reverse-engineering a complete brand style guide (color palette, typography, tone) from a single presentation slide. Furthermore, with models like “Nano Banana” (Gemini 2.5 Flash Image), users can generate new images from text prompts and perform complex in-context editing using natural language commands.

- Video and Audio Analysis: The platform’s capabilities extend to time-based media. A user can provide a YouTube video link, and AI Studio can effectively “watch” the video, providing summaries of key insights, describing on-screen visuals, analyzing the presenter’s tone and pacing, and even transcribing spoken content. It can similarly process and analyze uploaded audio files.

- Real-Time Streaming: A truly differentiating feature is the ability to stream a live feed from a user’s camera or desktop directly to the model. This enables powerful, interactive use cases. For example, a user can share their screen while working in a complex application like Figma or Excel and receive real-time, step-by-step coaching from Gemini. Another user could stream their camera feed while practicing a presentation to get immediate feedback on their body language, vocal tone, and delivery.

The Rise of Agentic AI: Automating Tasks with Gemini 2.5 Computer Use

A new, highly specialized model available for preview in AI Studio, known as Gemini 2.5 Computer Use, represents a significant leap towards creating autonomous AI agents. This model is designed to understand and interact with graphical user interfaces, allowing it to control a web browser in a manner that mimics human behavior.

It can perform a sequence of actions—such as clicking buttons, typing into forms, scrolling through pages, and dragging and dropping elements—to complete complex tasks that span multiple websites. For example, it could be tasked with managing a pet spa appointment by navigating the booking website, selecting a service, and filling out the confirmation form. The model operates in a continuous loop: it receives a screenshot of the current screen, analyzes the user’s high-level request, generates the next appropriate UI action, and repeats this process until the task is complete. This capability is already being used internally at Google to automate UI testing and successfully fix over 60% of failed test runs.

Model Customization: An Overview of Tuning

For applications that require a high degree of specialization, Google AI Studio provides capabilities for model tuning (often referred to as fine-tuning). This process allows developers to adapt a foundation model to better suit a specific use case. Users can upload their own datasets, typically in a structured format like a CSV file, containing examples of the desired input-output behavior. The platform then uses this data to train a new, customized version of the model.

Tuning is ideal for creating specialized AI assistants, such as a customer service chatbot that is an expert on a specific company’s products and speaks in the company’s brand voice, or an AI tool tailored to a niche industry’s jargon and knowledge base. While this is a powerful feature for achieving higher quality and consistency, the availability of specific models for tuning can vary as the platform evolves.

A Spectrum of Applications: Real-World Use Cases

The combination of accessibility, power, and advanced features in Google AI Studio enables a vast spectrum of applications, ranging from individual productivity enhancements to complex, enterprise-scale business solutions. The platform serves as a versatile engine for innovation across numerous domains.

Productivity and Creative Acceleration for Individuals

For individual users, writers, students, and content creators, AI Studio acts as a powerful co-pilot, automating time-consuming tasks and augmenting creative processes.

- Writing Assistance: The platform is an invaluable tool for all stages of the writing process, from brainstorming initial ideas and creating detailed outlines to editing and refining final drafts.

- Content Creation: AI Studio can dramatically accelerate content production. A user can generate a complete, SEO-friendly blog post, including headers, sections, and multiple high-resolution, AI-generated images, all from a single, comprehensive prompt. It can also draft professional emails and engaging social media posts tailored to a specific tone and audience.

- Summarization and Research: Leveraging the massive context window of models like Gemini 2.5 Pro (up to 1 million tokens), users can upload or paste hundreds of pages of text—such as lengthy reports, academic papers, or meeting transcripts—and receive a concise summary of the key findings, trends, and actionable insights. This is equivalent to processing roughly five 700-page PDFs at once.

- Personalized Learning: AI Studio can function as a personal tutor. By providing the model with a collection of learning materials on a specific topic—including videos, notes, and articles—a user can have Gemini create a unique, personalized study plan or explain complex concepts in a simplified manner.

Advanced Solutions for Professionals and Businesses

For developers, marketers, and business professionals, AI Studio provides tools to build sophisticated solutions and streamline complex workflows.

- Data Analysis: The long context window is a game-changer for business intelligence. A company could feed the model hundreds of pages of customer feedback surveys or internal communications and ask it to identify recurring themes, sentiment trends, risks, and strategic opportunities, a task that would traditionally require significant manual effort.

- No-Code App Development: In one of its most impressive demonstrations of capability, AI Studio can generate a fully functional, multi-page web application from a simple natural language description. A prompt like, “Create a personal productivity app with a savings tracker, task list, and private journal,” can result in a working app with UI elements, inputs, and local storage, all generated in under a minute.

- Marketing and Branding: The platform’s multimodal capabilities offer powerful tools for marketers. A user can upload a competitor’s slide deck and instruct Gemini to reverse-engineer their brand identity, producing a complete style guide with color codes, typography hierarchy, and an analysis of their narrative style. The integrated Veo video generation model can create short, cinematic clips suitable for social media campaigns or website banners.

- Software Development and UI Testing: The agentic capabilities of the Gemini 2.5 Computer Use model are being applied to solve real-world software engineering challenges. As demonstrated by Google’s own payments team, the model can be used to automate the testing of user interfaces, identify the cause of broken test runs, and successfully recover from failures, significantly improving development efficiency.

Industry Spotlights: Applications in Logistics, Automotive, and More

The capabilities prototyped in AI Studio and deployed via Vertex AI are already driving significant value in various industries.

- Supply Chain and Logistics: Domina, a Colombian logistics company, uses Gemini to predict package returns and automate delivery validation, resulting in an 80% improvement in real-time data access and a 15% increase in delivery effectiveness. Similarly, Moglix, an Indian supply chain platform, achieved a 4x improvement in sourcing team efficiency by deploying a generative AI vendor discovery solution.

- Automotive: The automotive industry is leveraging these technologies for both manufacturing and in-car experiences. BMW Group uses AI to create digital twins of its assets to simulate and optimize supply chain efficiency. Mercedes-Benz is developing vehicles with conversational AI capabilities, allowing drivers to talk to their cars in a natural way.

- Customer Service: The Taiwanese electric vehicle brand LUXGEN built an AI agent using Vertex AI to handle customer questions on its official LINE account. This chatbot successfully reduced the workload of human customer service agents by 30%, allowing them to focus on more complex issues.

Competitive Landscape: Google AI Studio vs. OpenAI Playground

To make an informed decision about adopting Google AI Studio, it is essential to compare it rigorously against its primary competitor in the developer-focused AI experimentation space: the OpenAI Playground. While both platforms serve a similar purpose—providing access to state-of-the-art language models for prototyping—they have distinct philosophies, feature sets, and strategic advantages.

A Head-to-Head Comparison

The following table provides a detailed, feature-by-feature analysis of Google AI Studio and the OpenAI Playground, reflecting the state of the platforms in 2025. This comparison is based on a synthesis of technical documentation, industry analysis, and user reports.

| Feature/Capability | Google AI Studio | OpenAI Playground | Analyst’s Verdict |

|---|---|---|---|

| Core Models | Direct access to the Gemini family (e.g., 2.5 Pro, 2.5 Flash, Veo 3, Imagen). | Direct access to the GPT family (e.g., GPT-4.1, GPT-4.1 mini, GPT-4.1 nano). | Parity. Both platforms provide access to their respective flagship models. The choice depends on which model family’s performance characteristics best suit the specific use case. |

| Multimodality | Native, deeply integrated processing of text, images, video, and audio. Features real-time screen/camera streaming. | Strong image understanding (vision). Audio processing is handled by integrating separate services like Whisper (speech-to-text) and TTS. | Google Advantage. |

AI Studio’s native, all-in-one handling of diverse media types, especially video and real-time streams, offers a more seamless and powerful multimodal development experience.

Function/Tool Calling

Supports both OpenAI-compatible function calling schemas and native structured output for flexibility.

Features a mature and well-established ecosystem for agents and tool use, with extensive community support and examples.

Slight OpenAI Advantage. While Google offers compatibility, OpenAI’s ecosystem is more mature and widely adopted, with a larger volume of third-party libraries and community-driven examples.

Grounding

Native, first-party integration with Google Search for real-time fact-checking and providing citations. Reduces hallucinations.

Relies on Retrieval-Augmented Generation (RAG) patterns implemented through agent/tool setups to access web information.

Google Advantage. The built-in, one-click grounding feature is a significant differentiator, simplifying the process of building factually accurate applications and providing verifiable sources.

Context Window

Up to 2 million tokens with Gemini 2.5 Pro, enabling analysis of extremely large documents.

Up to 1 million tokens with GPT-4.1.

Google Advantage. The larger context window provides a distinct advantage for use cases involving the analysis of massive datasets, codebases, or extensive documentation.

Developer Experience

Features an integrated code editor (“Starter Apps”), a “Get Code” button for multiple languages, and a clean UI.

Benefits from a very mature API, extensive and high-quality documentation, and a vast, active developer community.

Competitive. Google excels in lowering the initial friction from idea to code. OpenAI excels in the breadth and depth of its surrounding ecosystem and community knowledge base.

Deployment Path

Provides a clear, documented, and secure migration path to the enterprise-grade Google Cloud Vertex AI platform.

The production path is via the OpenAI API and its hosted services, with enterprise controls available.

Google Advantage. The seamless on-ramp to a full-fledged MLOps platform (Vertex AI) offers a more comprehensive and strategically integrated path to enterprise scale, security, and governance.

Pricing & Free Tier

AI Studio playground is completely free to use. A generous free tier is also available for the Gemini API.

Playground usage is tied to an API key and incurs costs based on token consumption.

Google Advantage. The zero-cost barrier to entry for the AI Studio playground makes it significantly more accessible for learning, experimentation, and early-stage prototyping, especially for individuals and teams with limited budgets.

Strategic Differentiators: Where Google Excels

Based on the head-to-head comparison, several key strategic differentiators emerge for Google AI Studio:

- Deep Ecosystem Integration: AI Studio’s most significant advantage is its native and seamless integration with the broader Google ecosystem. The ability to pull files directly from Google Drive, ground responses with the unparalleled index of Google Search, and migrate projects to Google Cloud’s Vertex AI for enterprise deployment creates a cohesive and powerful development environment that is difficult for competitors to replicate.

- Superior Native Multimodality: While OpenAI has strong vision capabilities, Google’s native, all-in-one approach to handling diverse data types—especially video, audio, and real-time screen streaming—is a clear advantage. This allows developers to build more complex and interactive multimodal applications with less friction and fewer dependencies on separate services.

- Unmatched Accessibility and Cost-Effectiveness: The decision to make the AI Studio playground completely free is a powerful strategic move. It removes all barriers to entry for experimentation, making it the default choice for students, individual developers, and teams operating on a tight budget. This fosters a large and diverse user base, creating a strong funnel for future adoption of Google’s paid AI services.

Making the Choice: Guidance for Developers and Organizations

The decision between Google AI Studio and the OpenAI Playground should be driven by the specific requirements of the project and the strategic orientation of the organization.

- Choose Google AI Studio if:

- The project heavily relies on multimodal inputs, particularly video, audio, or real-time screen sharing.

- Native, verifiable grounding against real-time web search with citations is a critical requirement for accuracy and trust.

- The organization is already invested in or plans to use the Google Cloud ecosystem, making the migration path to Vertex AI a significant advantage for scalability and governance.

- A robust free tier for extensive prototyping and experimentation is a primary consideration.

- Choose OpenAI Playground if:

- The development team is already heavily invested in OpenAI’s SDKs, libraries (like LangChain, which has deep roots in the OpenAI ecosystem), and the agentic programming paradigm.

- The project requires access to a specific model from the OpenAI catalog that has demonstrated superior performance on a particular benchmark relevant to the use case.

- Leveraging the broadest possible ecosystem of community support, third-party tooling, and pre-existing code examples is a top priority.

Conclusion and Future Outlook

Google AI Studio has firmly established itself as more than just a developer playground; it is a strategic, powerful, and exceptionally accessible gateway to the entire Google AI ecosystem. It successfully democratizes access to some of the world’s most advanced generative AI models, providing a low-risk, high-reward environment for innovation. The platform’s strengths in native multimodality, deep integration with Google services, and a clear, secure path to enterprise-grade deployment on Vertex AI make it a compelling choice for a wide range of users, from individual creators to large corporations.

Strategic Recommendations for Adoption

For developers, researchers, and business professionals looking to explore the frontiers of generative AI, Google AI Studio is an essential tool. The recommended approach for adoption is a phased strategy:

- Experiment and Prototype: Use the free, unrestricted environment of AI Studio for rapid, iterative prototyping and the development of proofs-of-concept. Leverage the full suite of tools—from parameter tuning to multimodal inputs—to validate ideas and refine application logic.

- Transition to Code: Once a use case is validated, utilize the “Get Code” feature to accelerate the transition from the playground to a standalone application.

- Secure and Scale: For production needs, plan a migration strategy away from client-side API keys. Implement a secure server-side backend to manage API calls or, for applications requiring full scalability and MLOps capabilities, begin the documented migration process to Google Cloud’s Vertex AI platform.

The Trajectory of Development: What to Expect Next

The evolution of Google AI Studio points toward an even more integrated and powerful future. The platform’s increasing focus on providing not just examples but fully functional “Starter Apps” complete with built-in code editors signifies a clear product direction. This “show, don’t just tell” approach to developer onboarding is a powerful strategy. It moves beyond simply demonstrating a model’s capabilities and instead provides developers with tangible, modifiable codebases for complex applications directly within the browser. This trend suggests a future where AI Studio evolves from a “playground” for prompts into a true “studio” for application development, where a significant portion of the development lifecycle can occur without ever leaving the web interface.

Furthermore, the continued advancement of agentic capabilities, exemplified by models like Gemini 2.5 Computer Use, and deeper integrations with productivity suites like Google Workspace will likely be key areas of focus. This trajectory will continue to blur the lines between a specialized developer tool and a universal productivity engine. Google AI Studio is poised to remain at the forefront of applied AI, offering users a direct and immediate connection to Google’s latest and most impactful innovations as they emerge.