Digital Marketing Experiment Velocity: High-Tempo Growth

Section 1: The New Competitive Advantage: Mastering Experiment Velocity

In the contemporary digital landscape, the capacity for an organization to learn and adapt is its primary competitive advantage. The static, long-range marketing plan has been rendered obsolete by an environment of constant change in customer expectations, acquisition channels, and competitive pressures. In this context, the concept of experiment velocity emerges not merely as a measure of activity, but as a core strategic imperative. It represents the momentum of an organization’s learning cycle—its ability to systematically test hypotheses, generate insights, and translate that knowledge into tangible business growth. Mastering this discipline is the foundation of a modern, high-performing marketing function.

1.1. Beyond Speed: Defining True Experiment Velocity

The term “A/B testing velocity” is often defined simply as the number of experiments launched within a given timeframe. For instance, a team running six tests per month is said to have a higher velocity than a team running one. While this quantitative measure is a useful starting point, it is fundamentally incomplete. A more sophisticated and strategically valuable understanding distinguishes between “speed” and “velocity.” Speed is a scalar quantity, measuring only how fast something is moving, whereas velocity is a vector, measuring how fast something is moving in a specific, intended direction.

This distinction is critical in the context of digital marketing. A team can achieve high speed by running a large volume of low-quality tests—for example, launching 50 minor button color tests in a single month. While the activity level is high, the strategic direction is absent. This often leads to what Eric Ries terms “achieving failure“: the successful execution of a bad plan, where a campaign is delivered on time and on budget but fails to produce any meaningful increase in lead generation or revenue.

True experiment velocity, therefore, is a measure of both the quantity of work performed and its quality, which is defined by its alignment with specific business outcomes. The goal is not simply to be busy, but to be busy making progress toward quantifiable company objectives, such as increasing revenue, reducing operational costs, or improving customer satisfaction. This paradigm shifts the focus of performance measurement away from monitoring outputs like traffic and leads, which are lagging indicators, toward monitoring inputs like the quantity and quality of experiments, which are the controllable levers of growth. The historical growth of companies like Twitter, which accelerated its testing cadence from 0.5 to 10 tests per week during a period of rapid expansion, exemplifies the correlation between high-velocity, directed experimentation and significant business growth.

1.2. The Economics of Learning: Why a Higher Velocity Translates to Compounding Growth

At its core, a high-velocity testing program is built on the philosophy that the more tests you run, the more knowledge you gain about how to grow your business. Each experiment, regardless of whether it “wins” or “loses,” is a transaction that purchases a piece of knowledge. A winning test validates a hypothesis and provides a direct lift to a key metric. A losing test is equally valuable, as it invalidates a hypothesis and prevents the organization from investing significant resources in a flawed strategy, providing crucial data about user behavior and preferences.

This process of knowledge acquisition creates a compounding effect. A higher velocity translates directly to a faster rate of learning. This accelerated learning cycle allows an organization to respond with greater agility to shifts in the market environment, competitive strategy, or customer feedback, transforming its responsiveness into a durable competitive advantage. The data supports this direct relationship, with studies showing a positive correlation between increased testing velocity and improved return on investment (ROI).

The economic imperative for high velocity is further amplified by the inherently low success rate of individual experiments. The average industry win rate for A/B tests hovers between 12% and 15%. This statistical reality means that to discover the handful of winning ideas that will drive substantial growth, a large volume of hypotheses must be tested. An organization that tests slowly is statistically unlikely to uncover these breakthrough insights in a timely manner. High-velocity testing is, therefore, a strategic necessity for systematically navigating the uncertainty of the market and efficiently identifying the most effective paths to growth. Companies like Booking.com, which at one point had 1,000 tests running simultaneously, consider this high-tempo approach a critical component of their growth strategy.

1.3. Quantifying Success: Key Metrics for a High-Impact Experimentation Program

To effectively manage and scale an experimentation program, it is essential to move beyond anecdotal success and implement a rigorous measurement framework. A mature program tracks a balanced scorecard of metrics that assess quantity, quality, and overall business impact.

Core Program Metrics:

- Quantity Metrics: These metrics measure the throughput of the experimentation engine.

- Testing Capacity: An assessment of the maximum number of tests the team and its resources can support.

- Testing Velocity: The actual number of experiments launched per month or per quarter.

- Testing Coverage: The percentage of high-value pages (e.g., homepage, product pages, checkout) that have an active test running at all times. Not testing on a critical page represents a wasted learning opportunity.

- Quality & Impact Metrics: These metrics evaluate the effectiveness and business value of the experiments being run.

- Win Rate: The percentage of completed experiments that produce a statistically significant positive lift in the primary metric.

- Lift Amount: The average percentage uplift generated by winning experiments.

- Expected Value: A calculated metric that combines win rate and lift amount to estimate the potential value of the testing program.

- Return on Investment (ROI): The ultimate measure of the program’s financial contribution to the business.

- Efficiency Metrics: This category assesses the speed of the learning cycle.

- Time from Idea to Insight: The average time it takes to move an experiment through the entire workflow, from initial hypothesis to conclusive, documented learning.

As an experimentation program matures, its measurement framework must also evolve. Relying solely on the raw number of experiments—experiment velocity—can create perverse incentives. A team measured only on the quantity of tests may resort to running dozens of low-quality, low-insight experiments, such as minor copy changes or button color tests, simply to inflate their numbers. This increases activity but not necessarily business value.

To counteract this, a more sophisticated metric, Learning Velocity, should be introduced. Learning velocity measures the rate at which a team generates conclusive, validated learnings per week or month. This shifts the focus from the activity (running tests) to the desired outcome (generating knowledge). By tracking both experiment velocity and learning velocity, an organization can calculate an Experiment-to-Learning Ratio. An ideal ratio is 1, indicating a perfect correlation between tests run and learnings generated. A ratio significantly greater than 1 suggests that the team is running many experiments that are failing to produce conclusive results. This may indicate issues with hypothesis quality, test design, or statistical rigor, signaling a need for coaching and process improvement rather than simply a mandate to “run more tests”. For a mature organization, this ratio serves as a critical diagnostic tool for assessing the health and efficiency of its entire experimentation culture.

Section 2: The MVT Philosophy: De-Risking Marketing and Maximizing Insight

The engine that powers high-velocity experimentation is a fundamental philosophical shift in how marketing initiatives are conceived and executed. This shift is away from large-scale, high-risk “big bang” campaigns and toward a disciplined approach of small, focused, learning-oriented experiments. This philosophy is embodied in the concept of the Minimum Viable Test (MVT), a powerful tool for de-risking innovation and maximizing the rate of insight generation.

2.1. From Waterfall Campaigns to Agile Tests: The MVT Paradigm Shift

The Minimum Viable Test (MVT) stands as the direct antithesis to the traditional “waterfall” methodology of project management. The waterfall approach, which follows a rigid, linear sequence of stages—Planning, Design, Implementation, Testing, and Launch—is well-suited for predictable, frequently recurring projects where both the problem and the solution are clearly understood. However, it is fundamentally ill-equipped to handle the conditions of extreme uncertainty that define modern digital marketing, where customer preferences, channel effectiveness, and competitive dynamics are in constant flux.

Under these conditions, the most common outcome of a waterfall approach is the successful execution of a flawed plan. The campaign is delivered on time and within budget, with all assets beautifully created, yet it fails to generate the desired business results. This is because the waterfall model postpones all value delivery and learning until the very end of the project. The MVT paradigm inverts this model by embracing an agile, iterative, and incremental approach.

It is predicated on the idea of “trial-and-error marketing, where the team that finds the errors the fastest wins“. The primary goal is to reduce uncertainty by getting early market feedback from customers and prospects, thereby minimizing the risk of investing significant time and resources into campaigns that people do not engage with or content they do not read.

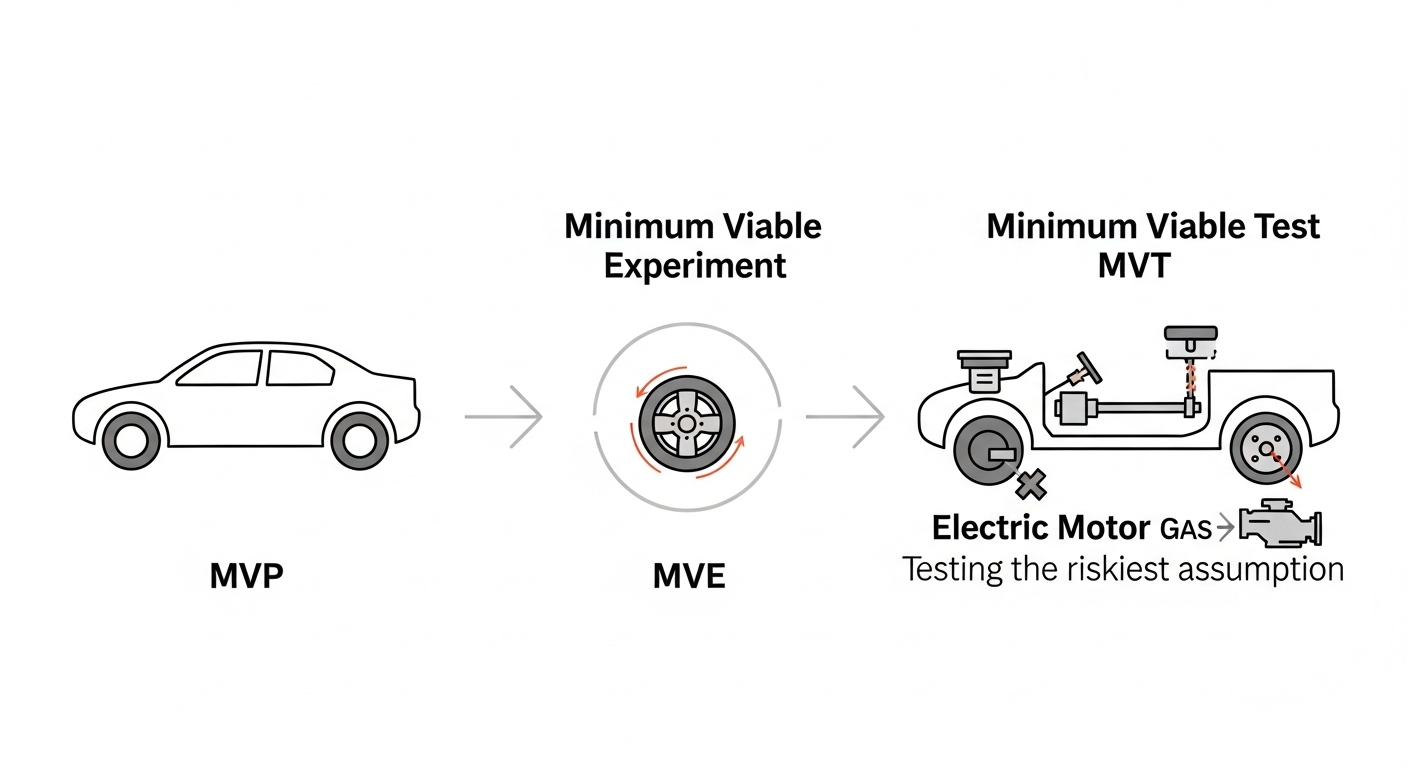

Distinguishing the “Minimums”: MVT vs. MVE vs. MVP

The lexicon of lean methodology is often fraught with confusion, particularly around the terms MVP, MVE, and MVT. Establishing a clear, precise vocabulary is essential for strategic clarity.

- Minimum Viable Product (MVP): An MVP is the smallest, most basic version of a product that can be built to deliver tangible value to an initial set of users. Its purpose is to gather feedback for future product development. It is a functional, usable product, albeit a simplified one.

- Minimum Viable Experiment (MVE): An MVE is defined as the smallest possible experiment that allows a team to validate a specific hypothesis. It is primarily a tool for generating internal feedback and learning for the team and product owner.

- Minimum Viable Test (MVT): An MVT is a highly focused test of a single, essential assumption—a hypothesis that must be true for the broader business idea to succeed. Crucially, an MVT does not attempt to look or feel like the final product; its sole purpose is to test the riskiest assumption in the most efficient way possible.

The distinction between these concepts is not merely semantic; it reflects a fundamental difference in strategic intent. An MVP is a tool for building to learn, requiring a certain level of development effort to create a product that delivers user value. In contrast, an MVT is a tool for learning before you build. The MVT process involves running a series of targeted tests to validate the most critical underlying assumptions of a business idea before any significant resources are committed to building an MVP.

This positions MVTs as a crucial precursor to MVPs within a lean innovation cycle. An excellent analogy clarifies this relationship: “In an MVP, you try to simulate the entire car. In an MVT, you are just testing whether the drivetrain is more powerful with an electric engine or a gas one“. The MVT isolates and tests the most critical component—the riskiest assumption—without the overhead of building the entire vehicle. It is a strategic de-risking instrument, while the MVP is a product development instrument.

The Anatomy of a Minimum Viable Test: From Riskiest Assumption to Actionable Data

The execution of a Minimum Viable Test follows a disciplined, scientific process designed to isolate variables and generate clear, actionable data. The process begins not with a solution, but with the foundational assumption upon which the entire idea rests.

- Identify the Core Promise: The first step is to articulate the core value proposition or “promise” that the proposed product, feature, or campaign intends to deliver to the user. This is the fundamental job-to-be-done that is being addressed.

- Isolate the Riskiest Assumption: The next, most critical step is to identify the single greatest assumption that, if proven false, would cause the entire initiative to fail. This is the linchpin of the idea. For the founders of Amazon, the riskiest assumption was not about logistics or web design; it was the fundamental belief that “people will want to buy books online”. For a new cohort-based course, the riskiest assumption might be that “people will find 10x more value in this format than in a self-directed course”.

- Devise a Focused Test: With the riskiest assumption identified, the team must devise a test that focuses exclusively on that hypothesis. It is crucial to resist the temptation to build out the entire system. If the riskiest assumption is about a user’s willingness to pay, the test must force them to demonstrate that willingness with their time or money—not simply ask them in a survey.

- Execute and Learn: The MVT is then executed to gather real-world data. The insights gained from this test—whether it validates or invalidates the core assumption—provide the necessary intelligence to inform the next strategic decision, which could be to pivot, to conduct another MVT on the next riskiest assumption, or, if the core hypothesis is validated, to proceed with building an MVP.

A Practitioner’s Toolkit: Low-Fidelity and High-Fidelity MVT Techniques

Marketers have a diverse toolkit of MVT techniques at their disposal, which can be broadly categorized by their fidelity—the degree to which they simulate the final user experience.

Low-Fidelity Techniques

These methods require minimal build effort and are primarily designed to gauge initial interest and validate demand.

- Smoke Tests / Fake Doors: This involves creating a landing page, an advertisement, or a button for a product or feature that does not yet exist. User interest is measured by the number of clicks, sign-ups for a waitlist, or attempts to purchase. This is a direct and powerful way to test demand before a single line of code is written.

- Explainer Videos: A short video can be produced to showcase the functionality and value proposition of a potential product. By promoting this video to a target audience and measuring engagement metrics, a team can effectively gauge interest and gather feedback on the concept.

- Email Campaigns: A new product concept or feature can be tested by sending a targeted email campaign to a segment of an existing user base. Engagement metrics such as open rates, click-through rates, and direct replies provide a strong signal of interest.

- Paper Prototypes: Using simple sketches, wireframes, or digital mockups, teams can test user flows and gather qualitative feedback on the usability and logic of a proposed solution at an absolute minimal cost.

High-Fidelity Techniques

These methods involve a greater degree of interaction and are designed to simulate the core experience more closely, allowing for the validation of process and value delivery.

- Concierge MVP: In this approach, the service is delivered entirely manually to the first few customers. For example, a personalized styling service might begin with a stylist manually emailing recommendations to clients. This validates the value proposition and refines the process before any automation is built.

- Wizard of Oz MVP: This technique creates the illusion of a fully automated, sophisticated system, while in reality, all the back-end tasks are being performed by humans. This allows a team to test a complex user experience and gather data on user interactions without the significant upfront investment in technology.

- Piecemeal MVP: This involves assembling the core functionality of a product by integrating and stitching together various existing third-party tools and services. This approach allows for a faster time-to-market for a functional product, enabling the team to test the complete user journey with minimal custom development.

- Feature Flags: For existing products, feature flags allow a new feature to be deployed but only made visible to a small, controlled segment of users. This enables the team to conduct a rigorous A/B test on the feature’s impact on key metrics before deciding on a full rollout to the entire user base.

The Symbiotic Relationship: How MVTs Fuel the Velocity Engine

The concepts of high experiment velocity and Minimum Viable Tests are not independent strategies; they are deeply intertwined components of a single, powerful growth engine. Adopting an MVT philosophy is the primary tactical mechanism that enables an organization to achieve and sustain the high experiment velocity necessary for rapid learning and adaptation. This symbiotic relationship is built on the principle that smaller, more focused bets lead to faster, more frequent learning cycles.

The Core Principle: Smaller Bets, Faster Cycles, More Learning

The most direct way to increase testing velocity is to reduce the size and complexity of the experiments themselves. This is the central tenet of the MVT approach. Smaller experiments, by their very nature, require significantly less time and fewer resources across every stage of the experimentation lifecycle, including research, ideation, design, and development. This reduction in overhead means that a greater number of experiments can be executed within a given period, which directly accelerates the Build-Measure-Learn feedback loop that is fundamental to lean methodology.

By prioritizing Minimum Viable Experiments (MVEs)—defined as the smallest possible experiment required to validate a hypothesis—teams can begin testing immediately at the outset of a new project. This eliminates the long delays often associated with waiting for extensive research and development to be completed before any market feedback is gathered. This immediacy allows for a quicker impact on key metrics like conversion rates and facilitates the rapid accumulation of user insights, which in turn inform the next iteration of experiments. Evidence suggests that this approach can lead to a positive return on investment for clients within the first 12 weeks of a program, a timeline unachievable with larger, more cumbersome testing methodologies.

Furthermore, internal data from experimentation agencies indicates that large, elaborate experiments are often no more likely to produce a winning result than smaller, more focused tweaks.

In some cases, these larger experiments have even shown a slightly smaller average uplift than their more modest counterparts. This finding provides a strong justification for the MVT philosophy: optimizing for velocity through smaller, more frequent tests is not a compromise on quality but rather an efficient strategy for maximizing learning and impact over time.

Breaking Down Silos: How MVT Thinking Shortens the Ideation-to-Insight Loop

The traditional waterfall approach to marketing campaigns often creates functional silos and lengthy, sequential handoffs. A project moves from the strategy team to the creative team, then to the development team, and finally to the analytics team, with significant delays at each stage. The MVT philosophy inherently breaks down these barriers by forcing a more fluid, cross-functional mode of collaboration on a smaller, more manageable scale.

Executing an MVT, such as a simple landing page test, may require input from marketing, design, analytics, and perhaps development, but the scope is contained and the timeline is compressed. This micro-collaboration fosters a more integrated team dynamic, replacing the rigid, linear process with a rapid, cyclical one. The time elapsed from the initial formulation of an idea to the generation of validated learning is dramatically reduced.

This acceleration is further amplified by the modern experimentation technology stack. Integrated platforms that combine customer data collection, behavioral analysis, and experiment execution eliminate the friction and delays that occur when data must be manually moved and reconciled between disparate systems. When a team can seamlessly move from analyzing user behavior in a tool like Amplitude to designing and launching a test to address an observed pain point, the ideation-to-insight loop can shrink from weeks or months to mere days or even hours.

Balancing the Equation: Managing the Tension Between Test Quality and Quantity

A common and valid concern with pursuing high-velocity testing is the potential for a decline in the quality and strategic relevance of the experiments being run. A relentless focus on quantity can lead to a proliferation of trivial tests that, while easy to launch, generate little to no valuable insight. The MVT philosophy, when correctly applied, provides a natural counterbalance to this risk.

A well-conceived MVT is, by its very definition, a high-quality test. Its quality is derived not from its complexity or build effort, but from its strategic focus. The MVT process mandates that teams begin by identifying and targeting the single most critical, riskiest assumption underlying an initiative. Therefore, the objective is not simply to test trivial changes quickly, but to test the most important things as quickly and efficiently as possible. This aligns with the principle of prioritizing tests that are easy to implement but still allow for the validation of a meaningful, high-impact hypothesis.

However, maintaining this balance between speed and strategic relevance as a program scales requires more than just a philosophical commitment; it demands a structured, disciplined process. Without a formal system for evaluating and ranking test ideas, teams under pressure to increase their test count will inevitably gravitate toward the path of least resistance, which often leads to a backlog filled with low-impact experiments like button color changes. This is where the concept of velocity, as distinct from mere speed, becomes critical. Velocity requires direction, and in the context of experimentation, that direction is provided by a robust prioritization framework.

A prioritization framework, such as the ICE or PIE models, acts as the strategic rudder for the experimentation program. It forces teams to systematically evaluate each test idea against predefined criteria like potential business impact and importance before it is approved for development. This ensures that the increased tempo of testing is channeled toward the hypotheses that are most likely to drive meaningful business outcomes. In this way, the prioritization framework is the critical mechanism that transforms undirected speed into purposeful, strategic velocity, ensuring that the organization is not just moving fast, but moving fast in the right direction.

Operationalizing High-Velocity Experimentation: A Strategic Framework

Transitioning from ad-hoc testing to a sustained, high-velocity experimentation program requires the implementation of a strategic framework that governs the entire lifecycle of an experiment. This framework must encompass a repeatable process, a rigorous prioritization methodology, a commitment to statistical certainty, and a modern technology stack. It is the operational blueprint that transforms the philosophy of rapid learning into a scalable, high-impact business function.

The End-to-End Experimentation Workflow: A Repeatable Process for Success

To scale experimentation effectively, the process cannot be left to individual interpretation. A structured, well-documented, and repeatable workflow is essential to ensure consistency, efficiency, and the systematic accumulation of knowledge across the organization. A mature experimentation workflow typically consists of the following six stages:

- Plan & Hypothesize: Every experimentation cycle begins with a clear plan that outlines the overarching objectives and the specific key performance indicators (KPIs) that will be used to measure success. From this plan, individual experiments are born. Each experiment must be rooted in a clear, testable, and data-driven hypothesis. Strong hypotheses are not based on guesswork; they are derived from a deep analysis of quantitative data from analytics platforms or qualitative insights from user behavior tools like heatmaps and session recordings.

- Prioritize: With a backlog of potential test ideas, the next crucial step is prioritization. Not all ideas are created equal, and a systematic approach is needed to rank them based on their potential impact and feasibility. This is where scoring frameworks like ICE or PIE are applied to ensure that the team’s limited resources are focused on the most promising hypotheses.

- Design & Build: Once an idea is prioritized, the variations for the test are designed and built. This can range in complexity from a simple copy change within an A/B testing tool’s visual editor to the development of a completely new landing page or user flow.

- Execute & QA: The experiment is then launched using the appropriate testing platform. This stage requires a meticulous quality assurance (QA) process to ensure that the test is functioning correctly, that traffic is being properly randomized and allocated, and that the tracking of goals and metrics is accurate.

- Analyze & Learn: The experiment is run until it reaches a predetermined sample size and statistical significance. Once the test is concluded, the results are analyzed to determine whether the hypothesis was validated or invalidated. This is the stage where the clear definition of primary and secondary KPIs becomes critical for drawing accurate conclusions.

- Document & Iterate: The final, and perhaps most important, stage is the rigorous documentation of the experiment’s results and learnings, regardless of the outcome. A central, searchable repository of all past experiments—both wins and losses—becomes an invaluable organizational asset. These documented learnings then feed back into the first stage of the process, informing the next iteration of a feature or inspiring a new set of hypotheses.

The Art of Prioritization: Applying ICE and PIE Frameworks to Your Test Roadmap

Effective prioritization is the cornerstone of a high-velocity program, ensuring that the team’s efforts are consistently directed toward the opportunities that matter most. Two of the most widely adopted “minimum viable prioritization frameworks” in growth and marketing are the ICE and PIE models.

Framework 1: ICE (Impact, Confidence, Ease)

The ICE framework, popularized by growth marketing pioneer Sean Ellis, is a quick and simple method for scoring and ranking a backlog of test ideas. Each idea is scored on a scale of 1 to 10 for three criteria:

- Impact: How significant will the positive impact be if this idea is successful? This assesses the potential magnitude of the change on a key business metric.

- Confidence: How confident are we that this idea will produce the expected impact? This score should be based on the strength of the supporting data, such as analytics, user research, or results from previous similar tests.

- Ease: How much effort and resources will be required to implement this test? A higher score indicates greater ease (i.e., less effort).

The final score is calculated by multiplying the three values: .

Framework 2: PIE (Potential, Importance, Ease)

The PIE framework, developed by Chris Goward of Widerfunnel, was originally designed to prioritize which pages or areas of a website to focus optimization efforts on. It uses three criteria, also typically scored on a 1-10 scale:

- Potential: How much room for improvement exists on the page(s) in question? This encourages focusing on the worst-performing pages where the potential for uplift is greatest.

- Importance: How valuable is the traffic to this page? This is determined by factors like traffic volume, conversion rates, and the cost of acquiring the traffic.

A page with high traffic and high value is more important to optimize than a low-traffic page.

- Ease: How difficult will it be to implement a test on this page? This considers both technical complexity and potential organizational or political barriers.

The final score is typically calculated as the average of the three values: .

While similar, these frameworks have distinct applications. The ICE model is generally better suited for the rapid prioritization of a list of specific test ideas or features. The PIE model excels at the more strategic task of identifying which pages or user flows should be the primary focus of the entire optimization program. The choice of framework should align with the specific prioritization challenge the team is facing.

| Framework | Components | Calculation | Best For | Key Considerations |

|---|---|---|---|---|

| ICE | Impact, Confidence, Ease | Prioritizing a backlog of specific test ideas or features. Quick, “good enough” estimations. | Can be subjective; requires team alignment on scoring. May favor “low-hanging fruit.” | |

| PIE | Potential, Importance, Ease | Prioritizing which pages or user flows to focus optimization efforts on. | Importance factor helps ground decisions in business value (traffic, cost). |

4.3. The Science of Certainty: Ensuring Statistical Rigor and Avoiding False Positives

As the volume of experiments increases, so does the risk of making decisions based on statistically flawed results. A commitment to statistical rigor is non-negotiable for a high-impact program. Several key principles must be understood and enforced.

- Statistical Significance and p-value: Statistical significance is a measure of the probability that the observed difference between a control and a variation is the result of random chance rather than the change that was implemented. This is quantified by the p-value. The conventional threshold for declaring a result statistically significant in conversion optimization is a 95% confidence level, which corresponds to a p-value of less than 0.05 (). This means there is less than a 5% probability that the observed result is a random fluke.

- Sample Size and Test Duration: For a test result to be valid, it must be based on a sufficiently large sample size. Furthermore, the test must run for an adequate duration, typically at least one full business cycle (e.g., one or two weeks), to account for natural variations in user behavior between weekdays and weekends.

- Avoiding Common Pitfalls and False Positives: A false positive (a Type I error) occurs when a test declares a winning variation that, in reality, has no positive effect. High-velocity programs must be particularly vigilant against the common causes of false positives:

- The Peeking Problem: One of the most common mistakes is to continuously monitor a test’s results and stop it as soon as a variation appears to be winning. This practice dramatically increases the likelihood of a false positive, as it capitalizes on random fluctuations in the data. A predetermined sample size or test duration must be established and adhered to.

- The Multiple Comparisons Problem: The probability of encountering a false positive increases with the number of comparisons being made. This occurs when testing multiple variations against a control (e.g., an A/B/C/D test) or when analyzing numerous segments after a test has concluded. Each comparison carries its own risk of a Type I error. To mitigate this, statistical corrections such as the Bonferroni correction can be applied. This method adjusts the required significance level by dividing the original alpha (e.g., 0.05) by the number of comparisons, making the threshold for significance more stringent and reducing the overall risk of a false positive.

4.4. The Modern Experimentation Stack: Tools for Testing, Analytics, and Personalization

Sustaining high experiment velocity is impossible without a robust and integrated technology stack. The modern experimentation stack consists of several key categories of tools that work in concert to facilitate the rapid testing and learning cycle.

- A/B Testing & Experimentation Platforms: These are the core engines of the program. Tools such as VWO, Optimizely, AB Tasty, Adobe Target, Convert Experiences, and Statsig provide the functionality to create, manage, deploy, and analyze experiments across web and mobile platforms.

- Product & Behavioral Analytics: These platforms, including Amplitude and Mixpanel, provide the deep, granular insights into user behavior that are essential for forming strong, data-driven hypotheses. They help teams understand what users are doing and where the opportunities for optimization lie.

- Personalization Engines: Many modern experimentation platforms include advanced personalization capabilities. These tools allow teams to move beyond one-size-fits-all tests and deliver tailored experiences to specific, high-value user segments based on their attributes and behaviors.

- Feature Flagging & Management: For organizations with a strong product-led growth motion, tools like LaunchDarkly and Statsig are critical. They enable server-side testing and the controlled rollout of new features, allowing for rigorous experimentation on core product functionality before it is released to the entire user base.

The ultimate goal is to create an integrated ecosystem where data flows seamlessly from analytics platforms (which generate insights) to experimentation platforms (which test hypotheses), creating a virtuous and rapid cycle of continuous improvement.

The Human Element: Cultivating a World-Class Experimentation Culture

While process and technology provide the necessary infrastructure for high-velocity experimentation, they are insufficient on their own. The ultimate determinant of a program’s success is the organization’s culture. A world-class experimentation culture is one that is deeply ingrained in the company’s values and daily operations, empowering teams to be curious, data-informed, and resilient. It is this human element that transforms a mechanical testing process into a dynamic engine of innovation and growth.

5.1. Leadership’s Role: Championing a Data-Informed, Hypothesis-Driven Mindset

The foundation of an experimentation culture is built from the top down. Executive leadership plays an indispensable role in setting the tone and establishing the strategic importance of experimentation. Leaders must actively champion a shift away from decisions based on seniority, opinion, or intuition—the “Highest Paid Person’s Opinion” (HiPPO)—and toward a data-informed, hypothesis-driven mindset. This involves consistently replacing the phrase “because I said so” with “because the evidence shows” in decision-making forums.

Effective leaders in an experimentation culture value testing speed over the pursuit of perfect execution, recognizing that rapid learning is more valuable than flawless delivery of an untested idea. They demonstrate this commitment by allocating sufficient budget and resources to the program, actively participating in experiment reviews, and publicly sharing the results of both successful and failed tests. When leaders themselves model this behavior—proposing hypotheses, running experiments, and embracing the learnings—they create a powerful permission structure that encourages innovation and risk-taking at every level of the organization.

5.2. Fostering Psychological Safety: Normalizing Failure and Rewarding Learning

Perhaps the single greatest cultural impediment to a thriving experimentation program is the fear of failure. In an environment where teams are penalized for experiments that do not produce a positive lift, they will naturally become risk-averse. They will gravitate toward safe, incremental tests with a high probability of a small win, while avoiding the bolder, more ambitious experiments that have the potential to unlock breakthrough growth.

To counteract this, the organization must fundamentally reframe the concept of a “failed” experiment. A well-designed test that invalidates its hypothesis is not a failure; it is a successful learning opportunity. It provides valuable, definitive knowledge about what does not work, thereby preventing the company from investing significant resources in a flawed strategy and challenging incorrect assumptions. This creates an environment of psychological safety, where teams feel secure in exploring new ideas without the fear of negative repercussions.

This mindset must be reinforced through the organization’s incentive and recognition systems. The program should celebrate and reward teams for running well-structured experiments and generating critical learnings, not just for achieving “wins”. When a team is recognized for a “failed” test that saved the company from a costly mistake, it sends a powerful message that the true goal of the program is knowledge acquisition, not just short-term metric optimization.

5.3. Structuring for Success: Centralized, Decentralized, and Hybrid Team Models

The organizational structure of the experimentation function has a direct impact on its velocity, quality, and ability to scale. There are three primary models, each with its own set of advantages and disadvantages.

- Centralized (Center of Excellence): In this model, a single, dedicated team of experts (e.g., data scientists, CRO leads, developers) is responsible for running all experiments for the entire organization. This structure ensures a high degree of statistical rigor, consistency, and quality. However, as the demand for testing grows, the central team can easily become a bottleneck, slowing down the overall velocity of the program.

- Decentralized (Democratized): This model empowers individual product and marketing teams to run their own experiments independently.

It maximizes autonomy, ownership, and testing velocity, as teams do not have to wait in a queue for a central resource. The primary risk of this approach is a potential decline in the quality and consistency of tests, as individual teams may lack deep expertise in experimental design and statistical analysis.

- Hybrid (Federated): The hybrid model seeks to combine the strengths of the other two. It consists of a small central team that acts as a governing body, providing the necessary tools, standardized processes, training, and expert consultation. This central hub then supports embedded experimentation specialists or trained “champions” within the various business units who help execute tests. This structure often represents the optimal balance for scaling a program, as it promotes high velocity and autonomy while maintaining a high standard of quality and rigor.

Regardless of the specific model chosen, effective experimentation relies on the collaboration of diverse skill sets. A well-composed testing team, or “squad,” typically includes a product or marketing manager, an analyst or data scientist, and a designer or developer, ensuring that each experiment is informed by a variety of perspectives.

5.4. Democratizing Data: Empowering Teams with Autonomy and the Right Tools

To achieve and sustain high velocity, teams on the front lines must be empowered with the autonomy and resources to act quickly on their ideas. This requires a concerted effort to democratize access to data and testing tools, cutting through bureaucratic red tape that can stifle innovation.

Practical steps toward democratization include providing teams with pre-approved, discretionary budgets for testing, which allows them to launch experiments without a lengthy approval process. It also involves investing in comprehensive training programs to build foundational skills in hypothesis formulation, experiment design, and results analysis across the marketing and product organizations.

Furthermore, creating standardized assets can significantly lower the barrier to entry and accelerate the testing process. A company-wide experiment template, for example, can simplify the process of proposing a test by ensuring that all necessary components—hypothesis, success metrics, target audience—are clearly defined upfront. Similarly, building a central, easily searchable library or database of all past experiments is crucial. This repository of institutional knowledge prevents teams from re-running tests that have already been done and allows them to build upon the learnings of others, creating a compounding effect on the organization’s collective intelligence.

Section 6: High-Velocity in Action: Lessons from Industry Leaders

The principles of high-velocity experimentation and Minimum Viable Tests are not merely theoretical constructs; they are the proven, practical foundations upon which many of the world’s leading digital companies have built their growth engines. By examining the cultures and specific tactics of these industry leaders, the abstract concepts of velocity and MVT can be grounded in tangible, real-world examples.

6.1. The “Always-On” Approach: Deconstructing the Experimentation Cultures of Booking.com and Netflix

Companies like Booking.com and Netflix represent the pinnacle of high-velocity experimentation culture. For these organizations, testing is not a periodic activity or the responsibility of a single department; it is the fundamental, “always-on” methodology for all product and marketing development. Booking.com, for example, is reported to run more than 24,000 tests annually, with as many as 1,000 experiments running concurrently at any given time.

The key to this extraordinary scale is the radical democratization and decentralization of the experimentation process. At these companies, any employee with a well-formed hypothesis is empowered to design and launch a test. This approach is supported by a robust internal platform and a deeply ingrained culture that values data-driven decision-making above all else. This “always-on” philosophy ensures that there are no sacred cows; every feature, every page, and every user flow is considered a candidate for continuous optimization. This maximizes the number of learning opportunities, ensuring that the user experience is constantly being refined and improved based on real customer behavior.

6.2. Channel-Specific Applications: High-Impact A/B Testing Case Studies

The power of the MVT approach is evident in its application across a wide range of digital marketing channels. By focusing on small, high-impact changes, teams can generate significant results with minimal investment.

- Conversion Rate Optimization (CRO): The world of CRO is rich with examples of successful MVTs. The travel company Going hypothesized that the wording of its call-to-action (CTA) button was a critical lever for premium trial sign-ups. By running a simple A/B test comparing “Start My Trial” to “Claim My Free Trial,” they were able to double their premium trial starts, achieving a 104% month-over-month increase. Similarly, the project management software company WorkZone identified that colorful customer testimonial logos on their lead generation page might be distracting users from the primary goal of filling out the form. They tested a variation where the logos were converted to black and white, a simple change that resulted in a projected 34% increase in form submissions. These cases exemplify the MVT principle: isolate a single, critical variable and test it efficiently.

- Email Marketing: Email marketing provides a fertile ground for high-velocity, low-cost experimentation. Case studies from brands like PetLab Co demonstrate the impact of testing variables such as the inclusion of the brand’s name in the subject line and the optimization of send times, both of which were found to have a huge impact on engagement and revenue. Other tests have shown that using emojis in subject lines can increase open rates by up to 10% for some brands, while personalizing subject lines can yield a 1% to 5% lift. These small, iterative tests allow marketers to quickly learn their audience’s preferences and continuously refine their communication strategy for maximum impact.

- Paid Media: In the realm of paid advertising, MVTs are crucial for optimizing ad spend and maximizing ROI. The social media management tool Adspresso ran a simple test on its Facebook ad copy. Version A used a direct, benefit-oriented message: “LIKE us for pro tips…” while Version B was more committal: “Get a daily tip…” The result was stark: Version A generated 70 Facebook likes, while Version B generated zero. This simple MVT provided a clear, decisive learning about audience messaging preferences with minimal cost, preventing the company from wasting a larger budget on an ineffective ad campaign.

6.3. Common Pitfalls and How to Overcome Them: A Diagnostic for Stalled Programs

Despite the clear benefits, many organizations find that their experimentation programs fail to gain momentum or stall out after an initial period of enthusiasm. Diagnosing and addressing these common challenges is key to building a sustainable, high-velocity program.

- Resource Constraints: A frequent complaint is the lack of sufficient traffic, budget, or team size to run a high volume of tests. The solution lies in ruthless prioritization. By using a framework like PIE, teams can focus their limited resources on the highest-value pages where the potential for impact is greatest. Starting with small, high-impact MVTs can generate early wins and demonstrate ROI, which is the most effective way to secure additional resources and buy-in.

- Lack of a Common Language or Framework: In many organizations, particularly larger ones, different teams operate in silos, using their own disparate tools and processes for testing. This leads to a lack of integration and no holistic view of the overall experimentation effort, making it impossible to coordinate efforts or share learnings effectively. The remedy is to establish and enforce a single, standardized end-to-end workflow, a common prioritization model, and a centralized repository for all experiment results.

- Dynamic and Unpredictable Environment: The digital marketing landscape is characterized by rapid change, with new platforms, shifting trends, and unpredictable user behavior. Some teams view this volatility as a barrier to effective testing. However, a mature perspective recognizes that a high-velocity experimentation program is not a victim of this uncertainty, but rather the most effective solution to it. Rapid, iterative testing is precisely the mechanism that allows an organization to navigate and adapt to a constantly changing environment.

- Analysis Paralysis: A common bottleneck occurs when teams feel obligated to conduct a deep-dive analysis for every single experiment, no matter how small. This can significantly slow down the learning cycle. The solution is to develop clear guidelines that differentiate between tests that require extensive post-hoc segmentation and analysis, and simple MVTs where the primary goal is a quick, directional learning. Not every test warrants the same level of analytical rigor.

Section 7: The Next Frontier: AI-Powered Experimentation

As organizations continue to build and refine their experimentation capabilities, the next significant leap in velocity and impact is being driven by the integration of Artificial Intelligence (AI) and machine learning. AI is poised to revolutionize the field by automating complex processes, enabling personalization at an unprecedented scale, and fundamentally reshaping the role of the human marketer in the experimentation lifecycle.

7.1.

From Manual A/B Tests to Automated Optimization

Traditionally, A/B testing has been a largely manual process, requiring significant human effort in hypothesis generation, variation creation, and results analysis. AI is now streamlining and automating many of these time-consuming aspects, thereby enabling a dramatic increase in potential experiment velocity.

AI-powered tools can analyze vast amounts of user interaction data to identify patterns and suggest high-potential optimization ideas, effectively automating the hypothesis generation phase. Platforms like VWO’s Copilot can then use generative AI to automatically create multiple variations of copy and design based on a simple input, such as a webpage URL. Furthermore, advanced algorithms can analyze test data in real time and predict which variation is most likely to win, allowing marketers to make faster decisions and implement changes without waiting for lengthy test cycles to conclude. This automation of the mechanical aspects of testing frees up marketing teams to focus on higher-level strategic decisions rather than manual execution.

AI-Driven Personalization: Tailoring Experiences at the Individual Level

The most profound impact of AI on experimentation is the shift from segment-based optimization to dynamic, one-to-one personalization. Traditional A/B testing seeks to find a single “winning” variation that performs best for the average user in a broad audience segment. AI transcends this limitation by enabling the continuous, automated optimization of the user experience for each individual.

Instead of deploying a static test, AI-driven systems can dynamically adjust content, product recommendations, offers, and messaging for every user based on their real-time behavior, historical data, and contextual signals. This is the principle behind Amazon’s powerful recommendation engine, which analyzes each customer’s browsing and purchase history to surface highly relevant product suggestions, and Netflix’s content algorithm, which curates a personalized homepage for every viewer. This represents a paradigm shift from conducting discrete experiments with a clear start and end point to a state of continuous, algorithmic optimization where every user interaction is a data point that refines the model and improves the subsequent experience.

Future Implications: How AI Will Reshape the Role of the Growth Marketer

The rise of AI-powered experimentation will not make the growth marketer obsolete; rather, it will elevate their role from that of a “test operator” to a “strategy and hypothesis curator.” As AI becomes increasingly proficient at the tactical execution of experiments—creating variations, allocating traffic, and analyzing results—the core value and competitive advantage of the human marketer will shift upstream to more strategic functions.

While AI can automate the “how” of testing, it still requires human intelligence to define the “what” and the “why.” The marketer’s primary responsibility will no longer be the manual setup and monitoring of A/B tests. Instead, their value will be derived from their deep qualitative understanding of the customer, their creative problem-solving abilities, and their capacity to formulate bold, business-critical hypotheses that define the strategic “playground” within which the AI will operate.

In this new paradigm, the growth marketer becomes the architect of the experimentation program. They will be responsible for identifying the most important business problems to solve, curating the high-quality, clean data that the AI models need to function effectively, and providing the critical human oversight to interpret the AI’s outputs and ensure they align with the brand’s strategic goals. The marketer’s focus will shift from the mechanics of testing to the art and science of asking the right questions, thereby guiding the powerful AI engine toward the most valuable opportunities for growth.

Strategic Recommendations and Implementation Roadmap

Building a high-velocity experimentation engine is a strategic transformation, not a short-term project. It requires a deliberate, phased approach that systematically builds capabilities in people, process, and technology. This section provides an actionable roadmap for organizations committed to embedding rapid, data-driven experimentation into their core operational DNA.

A Phased Approach to Building Your Velocity Engine

A successful implementation follows a logical progression from foundational setup to scalable optimization. This journey can be structured into four distinct phases.

- Phase 1: Foundation (Months 1-3): The initial phase is focused on establishing the essential building blocks and securing organizational alignment.

Action Items: Secure explicit buy-in and a clear mandate from executive leadership. Assemble a core, cross-functional “founding team” comprising members from marketing, analytics, and product/engineering. Select and standardize a single prioritization framework (ICE or PIE) for evaluating all test ideas. Launch the first 1-3 high-impact Minimum Viable Tests on the company’s most critical pages (e.g., homepage, primary lead generation form, or checkout funnel).

Goal: The primary objective of this phase is to demonstrate early, tangible wins to build momentum and validate the value of the program to the wider organization.

- Phase 2: Standardization (Months 4-9): With initial successes demonstrated, the focus shifts to creating a repeatable and scalable process.

Action Items: Formally document the end-to-end experimentation workflow, from hypothesis submission to results documentation. Create a central, accessible experiment library or repository to house all learnings. Develop and deliver foundational training on experimentation principles and tools to key marketing and product teams to begin democratizing skills.

Goal: To codify best practices and create a consistent, reliable system that can handle an increasing volume of experiments without a decline in quality.

- Phase 3: Scaling (Months 10-18): This phase is about expanding the program’s reach and sophistication.

Action Items: Transition the organizational structure toward a hybrid or federated model, embedding experimentation expertise within key business units. Invest in a more advanced, integrated technology stack to reduce friction and automate processes. Begin tracking more sophisticated program metrics, such as Learning Velocity and the Experiment-to-Learning Ratio.

Goal: To move from a centrally-driven program to a distributed capability, significantly increasing the organization’s overall testing velocity and learning capacity.

- Phase 4: Optimization (Ongoing): A mature experimentation program is never “done”; it is in a state of continuous improvement.

Action Items: Regularly conduct retrospectives to identify and eliminate bottlenecks in the process. Actively explore and pilot AI-powered experimentation tools to enhance automation and personalization. Formally integrate experimentation goals and learnings into the annual strategic planning cycle and individual performance reviews.

Goal: To fully embed experimentation as a core, indispensable business function that drives long-term, sustainable growth.

Key Investments in People, Process, and Technology

This transformation requires targeted investment across three key pillars:

- People: The success of the program hinges on the skills and mindset of the team. Organizations must hire for innate curiosity, analytical aptitude, and a collaborative spirit. It is equally important to invest in ongoing training and professional development, including certifications and access to industry communities, to keep the team’s skills at the cutting edge. Creating clear career progression paths for experimentation professionals is essential for retaining top talent.

- Process: A well-defined process is the scaffolding that supports a culture of experimentation. This involves codifying and making accessible the standards for the workflow, prioritization, QA, and documentation. The process should be designed to empower teams and reduce friction, not to create bureaucracy.

- Technology: Investing in the right tools is a critical enabler of velocity. The focus should be on building an integrated stack that allows for a seamless flow of data and insights between analytics, testing, and personalization platforms. This removes manual work and shortens the cycle time from insight to action.

Concluding Insights: Embedding Experimentation as a Core Business Function

Ultimately, achieving a state of high-velocity experimentation is about more than just adopting a new set of marketing tactics. It is a fundamental shift in an organization’s approach to decision-making, risk management, and growth. It represents the operational embodiment of the scientific method, applied to the challenges of the modern marketplace.

The journey requires a steadfast commitment to replacing opinion with evidence, embracing failure as a necessary component of learning, and empowering teams at all levels to contribute to a culture of relentless curiosity. The organizations that successfully build this velocity engine will be the ones that can most effectively navigate uncertainty. They will learn faster, adapt more quickly, and consistently deliver superior value to their customers. In the digital age, the capacity for rapid, validated learning is not just a competitive advantage—it is the primary driver of enduring success.