Incrementality Testing: Measure True Ad Impact, Optimize Budgets

Section 1: The Causal Revolution in Advertising: Beyond ROAS to True Incremental Value

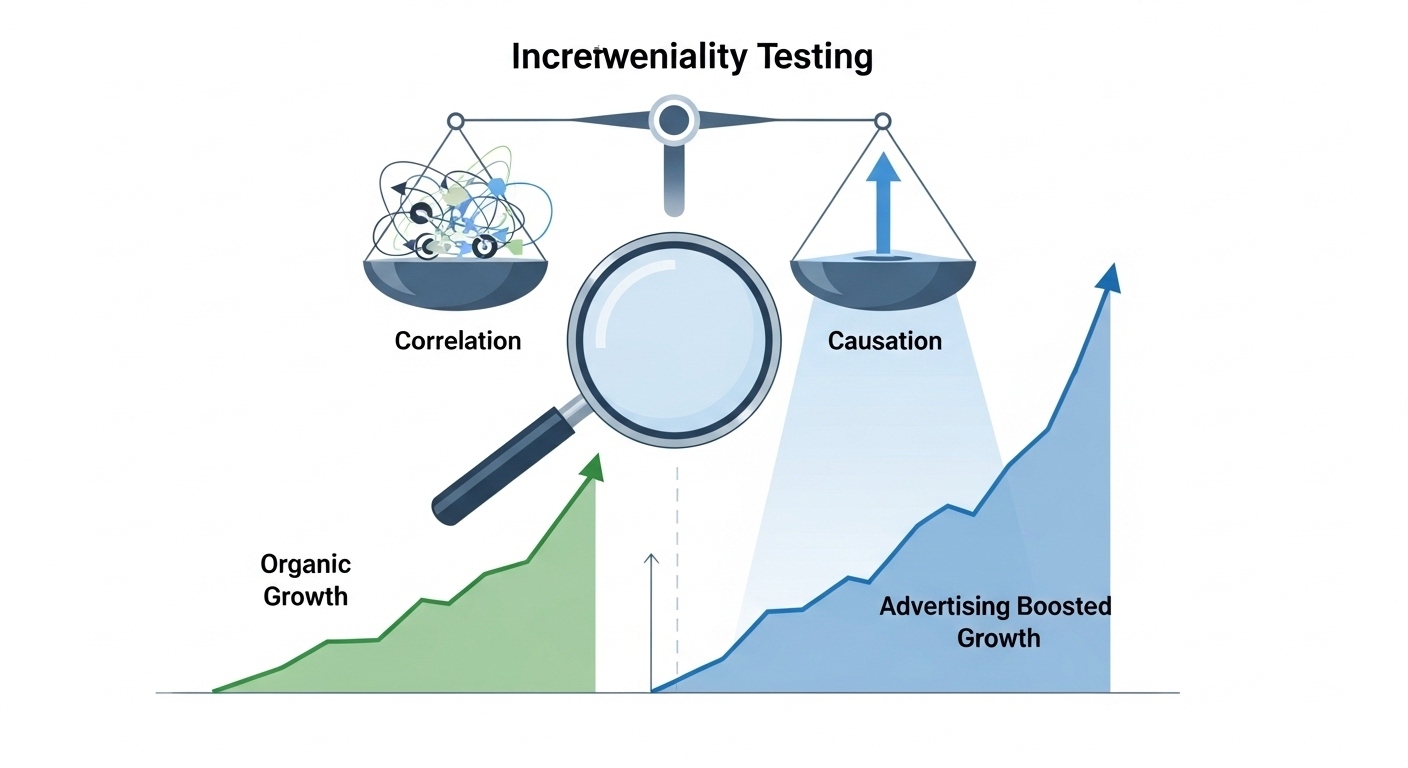

In an era of tightening budgets and increasing demands for accountability, the advertising industry is undergoing a fundamental shift in how it defines and measures success. For years, marketers have relied on metrics like Return on Ad Spend (ROAS) and Return on Investment (ROI) as the primary indicators of campaign performance. However, a growing body of evidence and a changing technological landscape have exposed a critical flaw in this approach: these metrics measure correlation, not causation.

This report provides an exhaustive analysis of incrementality testing, the scientific framework designed to measure the true, causal impact of advertising. It will deconstruct the limitations of traditional metrics, provide a detailed technical overview of modern measurement methodologies, and offer a practical playbook for agencies of all sizes to implement a more rigorous and defensible approach to proving marketing’s value.

1.1 The Attribution Trap: Why ROAS and ROI Are No Longer Enough

Traditional performance metrics such as ROAS and ROI have long been the bedrock of digital marketing reporting. They provide a straightforward, high-level view of efficiency, answering the seemingly simple question: for every dollar spent, how many dollars were returned? This simplicity, however, masks a profound analytical weakness. These metrics are fundamentally measures of correlation; they show that ad spend and revenue occurred concurrently, but they cannot prove that the ad spend caused the revenue. Attribution models, whether last-click or multi-touch, are designed to answer the question, “Who gets the credit for a conversion?” Incrementality, in contrast, answers the far more critical business question, “What did my marketing truly cause to happen?”.

The most significant danger of relying solely on attribution is the problem of organic cannibalization. A high ROAS can be dangerously misleading if a large portion of the attributed conversions would have occurred organically, even without the presence of an ad. For example, a campaign that generates 10,000 app installs may seem successful, but if 80% of those users would have installed the app anyway, the campaign’s true value is dramatically overstated. This is particularly prevalent in branded search and retargeting campaigns. A branded search campaign may report a 10x ROAS, but an incrementality test can reveal that the vast majority of those conversions came from users who were already intending to purchase and simply used the search ad as a convenient navigation tool. In one documented case, a test found that 81% of branded search conversions would have happened regardless, meaning the advertiser was spending heavily to capture demand that already existed. This illustrates the crucial distinction between taking credit for a conversion and actively creating value for the business.

This reliance on correlational data creates a perilous feedback loop. Marketers, often under pressure to demonstrate performance, observe a high ROAS on a particular channel and, assuming causation, invest more heavily in it. This can lead to a cycle of inefficient budget allocation where resources are poured into channels that are adept at capturing existing intent rather than generating new demand. The result is a marketing strategy built on a foundation of misleading data, where budgets are defended based on correlation, team confidence wavers, and leadership begins to view marketing as a cost center rather than a driver of growth.

1.2 Defining Incrementality: The Shift from Correlation to Causation

Incrementality is a measurement framework that quantifies the causal lift in a business outcome—such as sales, leads, or app installs—that is directly attributable to a specific marketing activity. It isolates the results that would not have happened without the advertising, separating them from organic behavior, brand loyalty, and other confounding factors. The core of incrementality is its ability to answer the counterfactual question that lies at the heart of all causal analysis: “What would have happened if I had not run this ad?”.

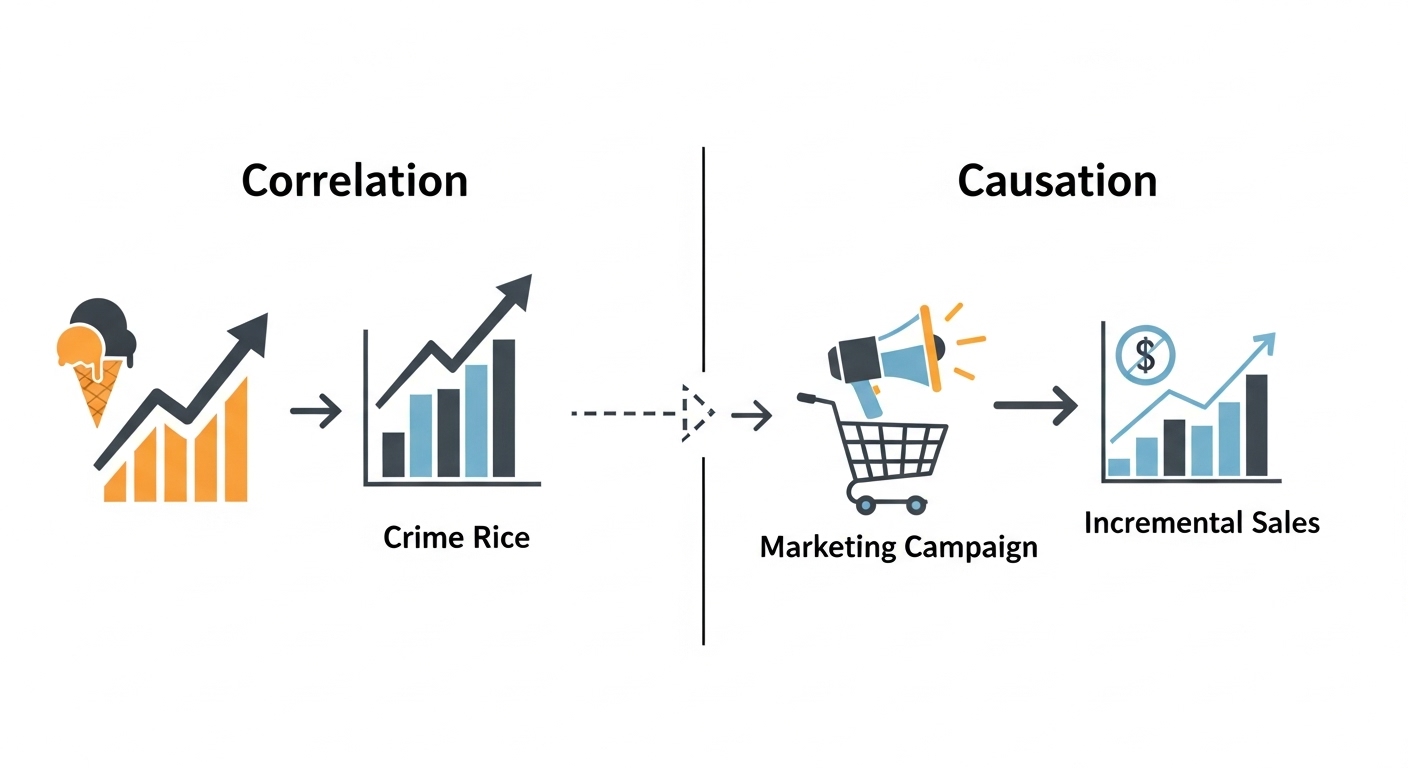

To grasp the importance of this shift, one must understand the fundamental statistical difference between correlation and causation. Correlation indicates a relationship where two variables move in tandem; when one changes, the other also changes. A famous example illustrates this distinction: ice cream sales and crime rates are highly correlated, as both rise during the summer. However, buying ice cream does not cause crime; a third variable, warm weather, drives both. In marketing, a surge in sales may be correlated with a new ad campaign, but the true cause could be seasonality, a competitor’s misstep, or a viral social media trend. Causation, on the other hand, means that a change in one variable is the direct result of a change in another. While causation always implies some form of correlation, the reverse is never guaranteed. The only way to prove causation is through rigorous, controlled experimentation—the very foundation of incrementality testing.

This causal framework introduces a new set of metrics that provide a far more accurate assessment of marketing performance. The two most critical are Lift and Incremental ROAS (iROAS).

-

Lift: This metric quantifies the percentage increase in conversions in the group exposed to advertising compared to the control group that was not. It is the direct measure of the campaign’s causal effect.

$$Lift (%) = frac{(text{Test Group Conversions} – text{Control Group Conversions})}{text{Control Group Conversions}} times 100$$

-

Incremental ROAS (iROAS): This metric measures the return specifically on the incremental revenue generated by an advertising investment. Unlike traditional ROAS, which includes all attributed revenue, iROAS isolates the additional revenue that would not have been achieved without the ad spend. It provides a true measure of a campaign’s marginal efficiency. The formula is:

$$iROAS = frac{text{Incremental Revenue}}{text{Ad Spend}}$$

1.3 The Business Imperative: Why Incrementality Matters Now More Than Ever

The shift toward incrementality is not merely an academic exercise; it is a strategic necessity driven by powerful market forces. Three converging trends have made causal measurement an indispensable component of the modern marketing toolkit.

First, economic pressure and heightened budget scrutiny have raised the bar for marketing accountability. As budgets contract, finance departments and executive leadership are no longer satisfied with correlational metrics. They demand definitive proof of value, asking the critical question: “What happens if we turn the ads off?”. Incrementality testing is the only methodology that can directly answer this question, aligning marketing performance with tangible business outcomes and building trust with the C-suite.

Second, the advertising industry is navigating a paradigm shift toward a privacy-first ecosystem. The implementation of regulations like GDPR and CCPA, coupled with platform changes such as Apple’s iOS 14 App Tracking Transparency (ATT), has severely limited the availability of user-level data. This degradation of third-party cookies and mobile identifiers is rendering traditional multi-touch attribution models, which rely on persistent user tracking, increasingly unreliable and obsolete. Incrementality experiments, which can measure lift at an aggregate level (such as a geographic region) without relying on personal data, offer a more durable and future-proof measurement solution.

Finally, the rise of walled gardens and retail media networks has created an urgent need for independent verification. In ecosystems like Amazon, Walmart, and Google, the entity selling the advertising is also the one reporting on its performance—a practice often described as “grading their own homework”. Marketers question the accuracy of platform-reported attribution, which often claims 100% credit for sales. This is especially critical in the burgeoning retail media space, where the ad exposure and the purchase transaction occur within the same closed loop, making it difficult to disentangle advertising influence from organic shopping behavior. Incrementality testing provides brands with an unbiased, scientific method to validate platform claims and understand the true contribution of their investments. Reflecting this shift, a 2024 survey by the Association of National Advertisers found that 71% of advertisers now consider incrementality the most important performance metric for their retail media network investments.

These forces are not acting in isolation. The collision of economic pressure demanding greater justification for ad spend with privacy changes making that justification harder to achieve through old methods has created a measurement vacuum. Incrementality testing is the logical and necessary framework to fill that void, providing the rigorous proof of value that modern business demands in a way that is compatible with the new privacy landscape. Adopting this framework is therefore not just a technical upgrade for an analytics team; it represents a profound cultural and strategic shift for the entire marketing organization.

It moves the focus from a defensive posture of “proving our campaigns worked” with correlated data to an offensive, growth-oriented mission of discovering “which of our campaigns are actually working” through causal evidence.

Section 2: The Gold Standard and Its Variants: A Framework for Causal Measurement

Measuring the causal impact of advertising requires a departure from passive observation and an embrace of active experimentation. The methodologies for incrementality testing range from the scientifically pristine to the pragmatically adapted, each with a distinct set of strengths, weaknesses, and applications. Understanding this spectrum is crucial for selecting the right approach to answer a specific business question. This section provides a detailed technical breakdown of the primary frameworks for causal measurement, from the theoretical benchmark of Randomized Controlled Trials to the real-world applications of geo-experiments, ghost ads, and platform-native tools.

2.1 Randomized Controlled Trials (RCTs): The Scientific Benchmark

Randomized Controlled Trials (RCTs), often referred to as A/B tests in the tech industry, are universally regarded as the “gold standard” for measuring causal effects. The methodology is rooted in the scientific method and is designed to isolate the impact of a single variable. In advertising, this involves randomly dividing a defined target audience into two statistically identical groups: a Test Group (also called the Treatment Group), whose members are eligible to be exposed to an ad campaign, and a Control Group, whose members are deliberately withheld from the campaign. Because the assignment to each group is random, any pre-existing differences in demographics, purchasing intent, or other characteristics are distributed evenly between the two groups. Consequently, the only systematic difference between them is their exposure to the advertising. Any subsequent difference in outcomes, such as conversion rates, can therefore be attributed directly to the causal effect of the ads.

The power of randomization cannot be overstated. Observational methods, which simply compare users who happened to see an ad with those who did not, are plagued by selection bias. Ad platforms are designed to show ads to users who are most likely to convert, meaning the “exposed” group is inherently different from the “unexposed” group from the outset. This fundamental difference can lead to a wild overestimation of advertising’s true effect. One large-scale analysis of 15 campaigns on Facebook found that common observational techniques inflated the measured lift by an average factor of three, with one campaign showing an apparent lift of 416% when the true, RCT-measured lift was closer to 77%. RCTs eliminate this bias, providing the most accurate measure of true impact.

A critical principle governing the analysis of advertising RCTs is “Intention-to-Treat” (ITT). Under this principle, all users in the test group are included in the analysis, even if they were never actually served an impression. The comparison is between the group intended for treatment and the control group. This approach, which is mandated by the FDA for clinical trials, prevents researchers from consciously or unconsciously biasing results by removing subjects who did not adhere to the treatment protocol. It measures the real-world effect of launching a campaign, accounting for the fact that not every targeted user will ultimately see the ad.

Despite their scientific rigor, true user-level RCTs face significant practical limitations. Many advertising platforms historically did not, and some still do not, offer the technical capability to create and maintain a pure, randomized control group. Furthermore, implementing them correctly can be time-consuming and expensive, and the rise of privacy regulations is making the practice of user-level randomization increasingly challenging.

2.2 Geo-Lift and Geo-Holdout Experiments: Measuring Impact in the Real World

When user-level randomization is not feasible—as is the case for many offline channels like TV and radio, or within certain digital walled gardens—marketers can use geography as the unit of randomization. These experiments, known as geo-tests, operate on the same control-versus-test principle but apply it to geographic markets like cities, states, or designated market areas (DMAs).

There are two primary forms of geo-experimentation:

- Geo-Lift (or Geo Test): In this design, a new marketing activity or an increased level of spend is introduced in a randomly selected set of “test” markets. The remaining, comparable markets serve as the “control” group, where business continues as usual. The goal is to measure the positive lift generated by the new initiative.

- Geo-Holdout: This design is used to measure the contribution of an existing marketing activity. The activity is paused or “held out” in a set of test markets, while it continues to run in the control markets. This method directly answers the crucial counterfactual question, “How many conversions would we lose if we turned this channel off?”.

Designing a valid geo-experiment is a complex statistical undertaking. The first step is market selection, which involves identifying and grouping geographic areas that are statistically comparable in terms of historical sales trends, seasonality, population size, demographics, and media relevancy. Advanced methodologies employ synthetic control models, which use historical data from a portfolio of control markets to create a highly accurate “prediction” of what sales in the test markets would have been without the advertising intervention. The second step is a power analysis to determine the test’s duration and its Minimum Detectable Effect (MDE)—the smallest percentage lift that the experiment has a high probability of detecting. This ensures the test is designed to yield statistically significant results.

Despite their utility, geo-tests are notoriously susceptible to a range of pitfalls that can invalidate their results:

- Regions Aren’t Twins: No two geographic markets are perfectly identical. Unforeseen local events—an economic boom, a major weather event, or a competitor’s aggressive promotional activity—can impact one group of markets but not the other, creating noise that confounds the measurement of the ad’s true effect.

- Contamination and Spillover: This is the most critical challenge. The integrity of a geo-test depends on the control group remaining a true, un-manipulated baseline. However, modern ad platforms can undermine this. If an advertiser pauses YouTube ads in California (the holdout group), the platform’s algorithm may automatically redirect that unused budget to other regions, including Texas (the control group), artificially inflating exposure there. This is known as in-channel contamination. Similarly, other marketing channels (e.g., Facebook, programmatic display) may recognize the void left in the holdout region and automatically increase their ad pressure to compensate. This cross-channel contamination means the control group is no longer a valid baseline, leading to a severe underestimation of the tested channel’s true impact.

- Low Statistical Power: Unlike user-level RCTs with millions of data points, geo-tests operate on a very small sample size—often just a handful of regions. This makes it difficult to detect subtle but meaningful effects and often results in very wide confidence intervals. A test might report a 5% incremental lift, but with a margin of error of ±4%, the true effect could be anywhere from a highly profitable 9% to a negligible 1%. This level of uncertainty makes confident budget allocation extremely difficult.

2.3 Ghost Ads (Ghost Bidding): Programmatic Precision

Ghost Ads, also known as Ghost Bidding, represent a sophisticated and highly precise method for conducting incrementality tests within real-time, auction-based advertising environments. This technique leverages the mechanics of programmatic ad buying to create a near-perfect control group on the fly.

The methodology works as follows: a target audience is randomly split into test and control groups at the user level. When a user from the test group triggers an ad opportunity, the system bids normally in the auction to serve them an ad. However, when a user from the control group triggers an ad opportunity, the system still enters the auction but is programmed to intentionally lose the bid (or not bid at all). Crucially, the system logs this event as a “ghost” impression—an instance where a specific user in the control group would have been shown an ad but was not.

The primary benefit of this approach is the creation of an exceptionally clean control group. The ghost ad methodology ensures that the test and control groups are identical in every observable way—demographics, browsing behavior, context, and real-time intent—right up to the split-second decision in the ad auction. This “apples-to-apples” comparison eliminates much of the noise and selection bias inherent in other methods. By comparing the conversion behavior of users who actually saw the ad against those who were recorded as ghost impressions, advertisers can get a highly accurate and trustworthy measure of incremental lift.

This method also avoids the significant opportunity cost of traditional holdout tests, which require sacrificing all ad exposure to a large control group.

Platform-Native Lift Studies: Leveraging Walled Garden Tools

Recognizing the growing demand for causal measurement, major advertising platforms like Google (Conversion Lift) and Meta (Facebook Lift Studies) have developed their own built-in incrementality testing tools. These tools are designed to automate the process of creating randomized experiments for campaigns running on their respective platforms.

The methodology typically involves the platform automatically splitting a campaign’s target audience into a test group that sees the ads and a control group that does not. The platform then tracks conversions across both groups (using its internal conversion tracking pixels) and reports on the incremental lift, iROAS, and statistical confidence of the results. These studies can be configured for either user-level splits, where the platform randomizes individual users, or geo-based splits, where randomization occurs at the regional level.

The primary advantages of these native tools are their accessibility and cost. They are generally offered at no additional charge beyond the media spend, are integrated directly into the familiar ad management interface, and are relatively easy to set up without requiring a dedicated data science team. This makes them an excellent entry point for advertisers looking to begin their incrementality journey.

However, these tools come with significant limitations, chief among them being the walled garden problem.

- Single-Channel View: A Facebook Lift Study can only measure the impact of Facebook ads, and a Google Conversion Lift study can only measure the impact of Google ads. They operate in a vacuum, completely blind to the user’s exposure to other marketing channels. A user in the Facebook control group is still being influenced by TV, TikTok, and Google Search ads, making it impossible to isolate the true incremental contribution of Facebook in a multi-channel marketing mix.

- Potential for Bias: There is an inherent conflict of interest when the entity with a vested financial interest in the ads’ performance is also the sole arbiter of their measurement. Independent research has suggested that some platform-based tests may not use true randomization, instead assigning users with a higher pre-existing intent to convert to the test group, a practice that would systematically inflate the reported lift and make the platform’s ads appear more effective than they truly are.

The choice of an incrementality methodology is therefore a strategic decision involving a trade-off between scientific precision, practical feasibility, and cross-channel applicability. While a user-level RCT is the theoretical ideal, its implementation is often impractical. Geo-tests offer a solution for offline and non-addressable media but are fraught with challenges related to contamination and statistical power. Ghost ads provide a highly precise solution within programmatic environments, while platform studies offer an accessible but limited and potentially biased starting point. The validity of any of these tests hinges on one central challenge: the creation and maintenance of a pure, uncontaminated control group that can serve as a true representation of the “parallel world” where no advertising occurred.

The following table provides a comparative analysis to guide the selection of the most appropriate methodology based on specific business needs.

| Methodology | Primary Use Case | Pros | Cons | Relative Cost |

|---|---|---|---|---|

| Randomized Controlled Trial (RCT) | Measuring precise user-level impact on digital platforms. | Gold standard for accuracy; eliminates selection bias. | Often costly; platform-dependent; privacy limitations. | High |

| Geo-Holdout / Geo-Lift | Measuring channel-level impact for online/offline media. | Measures broad impact; good for channels without user-level targeting. | Prone to contamination; requires careful design; less precise. | Medium-High |

| Ghost Ads / Bidding | Measuring impact in programmatic/auction-based environments. | Creates a highly accurate control group; minimizes wasted spend. | Technically complex; requires platform support. | Medium |

| Platform Lift Studies | Quick, in-platform measurement for a single channel (e.g., Meta, Google). | Easy to set up; no additional cost. | Walled garden view; potential for platform bias; no cross-channel insight. | Low |

| DIY Time-Based Tests | Directional insights for small-scale campaigns or new channels. | No direct cost; easy to implement. | Highly susceptible to external factors; low statistical rigor. | Very Low |

Section 3: Designing Low-Cost Experiments: An Incrementality Playbook for Small Agencies

For small agencies and businesses operating with limited budgets and without dedicated data science teams, the prospect of running sophisticated incrementality tests can seem daunting. However, the principles of causal measurement are not the exclusive domain of large enterprises. By adopting a disciplined experimentation mindset and employing practical, low-cost methodologies, any organization can begin to move beyond correlation-based metrics and make more informed, evidence-based decisions. This section provides a practical playbook for designing and executing effective, low-cost incrementality experiments.

3.1 The Experimentation Mindset: A Framework for Continuous Learning

The foundation of successful, low-cost measurement is not a complex tool but a structured process. Rather than engaging in ad-hoc tests, small agencies should adopt a formal experimentation framework to ensure that every test is purposeful, measurable, and contributes to a cumulative body of knowledge. A robust yet simple framework consists of the following steps:

- 1. Define the Objective: Every experiment must begin with a clear, specific, and measurable business goal. Vague objectives like “improve engagement” are insufficient. A better objective is “Increase new customer trial sign-ups by 10% within the next quarter”.

- 2. Formulate a Hypothesis: A hypothesis is a clear, testable statement that proposes a causal relationship. It should be based on research or observation and framed in a disprovable “If-Then” format. For example, based on an observation that branded search traffic has a high bounce rate, a hypothesis might be: “If we pause our branded search campaign for two weeks, then we will see no statistically significant decrease in total website conversions, because these users would have converted anyway via organic or direct channels”.

- 3. Define Key Performance Indicators (KPIs): Select the specific metrics that will be used to evaluate the hypothesis. It is crucial to focus on metrics that reflect true business value, such as incremental conversions or incremental Cost Per Acquisition (iCPA), rather than vanity metrics like clicks, impressions, or click-through rates, which can be misleading.

- 4. Prioritize Experiments: Small teams cannot test everything at once. Ideas should be prioritized using a simple framework like ICE (Impact, Confidence, Effort) to identify the “low-hanging fruit”—tests that have a high potential impact and require low effort to implement. This ensures that limited resources are focused on the most promising opportunities first.

For small businesses, the primary goal of this framework is not necessarily to achieve perfect statistical precision in every test. Instead, its value lies in instilling a culture of disciplined, evidence-based decision-making. The very act of questioning assumptions, forming a testable hypothesis, and measuring the outcome moves the organization away from marketing based on gut feelings and toward a more scientific approach. This process helps teams become less wrong over time, which is a powerful driver of long-term growth.

3.2 Scrappy but Scientific: Practical DIY Testing Methods

Several low-cost, do-it-yourself testing methods can provide strong directional insights into incrementality without requiring expensive software or deep statistical expertise.

- Time-Based Holdouts: This is the simplest form of an incrementality test. It involves pausing a specific marketing channel or campaign for a defined period (e.g., one to two weeks) and carefully measuring the change in the overall baseline conversion volume. After the holdout period, the campaign is reactivated, and the subsequent lift in conversions is measured. While this method is highly susceptible to external factors, it is an effective way to get a directional read on the impact of a single, isolated marketing activity.

- Observational “Natural Experiments”: These techniques leverage naturally occurring variations in marketing pressure to infer incrementality. While not true controlled experiments, they are free and can reveal significant patterns:

- The Weekend Method: For businesses with different activity levels on weekdays versus weekends, plot total paid and organic conversions over a 30-day period. If paid spend and attributed conversions drop significantly on weekends but total conversions remain stable (buoyed by a corresponding rise in organic conversions), it is a strong indicator that the paid activity is cannibalizing organic demand during the week.

- The End-of-Month Method: Many ad vendors operate on monthly budgets. Observe what happens to total conversion volume when a major channel’s budget is exhausted near the end of the month. If the drop in paid conversions has little to no impact on the total number of conversions, it suggests that the channel has low incrementality.

- The New Channel Method (“1st Grade Math”): When launching a new advertising channel, track the sum of all conversions (Paid + Organic) before and after the launch.

If the conversions attributed to the new channel simply seem to replace conversions from existing channels (especially organic) without increasing the total conversion volume, the new channel is likely cannibalizing existing demand rather than creating new, incremental demand.

Hacked “Charity Ad” Study

This is an ingenious, low-cost method to approximate a user-level lift study within a single campaign. The technique involves adding a “placebo” ad to a campaign’s creative rotation. This ad should be for an unrelated charity or a public service announcement (PSA). Since this ad has no connection to the brand’s product, any conversions that are attributed to it by the ad platform’s tracking pixel represent the baseline conversion rate of users who would have converted anyway, regardless of the ad they saw. This provides a rough but useful estimate of the non-incremental portion of the campaign’s reported conversions, allowing for a more realistic calculation of its true impact.

A common thread among these practical methods is that the most powerful and actionable insights for resource-constrained businesses often come from experiments designed to turn things off. While traditional A/B testing focuses on optimizing existing campaigns (e.g., testing a new headline), the biggest efficiency gains are frequently found by identifying and eliminating waste. The first and most important question for a small business is not “How can we improve our retargeting campaign?” but rather, “What would happen if we paused our retargeting campaign for a week?” This approach directly challenges core assumptions and has a much higher potential to unlock significant budget for reallocation to more impactful activities.

3.3 Avoiding Common Pitfalls in DIY Experimentation

Conducting DIY experiments is fraught with potential challenges that can lead to misleading conclusions. Awareness of these pitfalls is the first step toward mitigation.

-

Lack of Expertise & Inherent Bias: DIY marketers often lack formal training in experimental design and statistical analysis, which can lead to flawed methodologies, biased sampling, or incorrect interpretation of results.

Mitigation: Adhere strictly to a predefined experimentation framework (as outlined in 3.1). Before launching a test, pre-commit in writing to the exact analysis plan, KPIs, and success criteria. This prevents “p-hacking” or shifting the goalposts after seeing the initial results.

-

Insufficient Data & Statistical Significance: Small businesses typically have lower conversion volumes, making it difficult to collect enough data to achieve statistically significant results in a short timeframe.

Mitigation: Run tests for longer durations to accumulate a larger sample size. For directional insights, consider using higher-volume, top-of-funnel metrics (e.g., newsletter sign-ups) as a proxy for lower-volume, bottom-of-funnel metrics (e.g., purchases). It is also important to move beyond a binary view of statistical significance. A result with 65% confidence is not definitive proof, but it may still be a worthwhile bet if the potential upside is large and the downside risk is minimal.

-

Inconsistent Execution and Documentation: For many small business owners, marketing is one of many responsibilities, which can lead to inconsistent test execution, poor monitoring, and a failure to document learnings.

Mitigation: Break down experimentation tasks into small, manageable steps that can be integrated into a regular routine. Use simple, accessible tools like a shared Google Doc or spreadsheet to create a central repository for all experiment documentation, including the hypothesis, setup details, raw data, and key takeaways.

-

Ignoring Confounding Variables: DIY tests are particularly vulnerable to being skewed by external factors like seasonality, holidays, competitor promotions, or even macroeconomic shifts.

Mitigation: Avoid running tests during periods of high volatility, such as major holidays or promotional sales, unless the test is specifically designed to measure the impact of those events. Always analyze test results in the context of historical data to understand the normal baseline performance and seasonal trends. Acknowledge these limitations and treat the results as strong directional indicators rather than absolute scientific proof.

3.4 Choosing the Right Tools for the Job

While a disciplined mindset is the most important tool, several platforms can help small agencies execute and analyze incrementality tests more effectively. The key is to follow a crawl-walk-run approach.

- Starting Point (Free): The most accessible starting point is to leverage the native lift study tools offered by Google and Meta. While they have significant limitations due to their walled-garden nature and potential for bias, they are free to use and provide a valuable, hands-on introduction to the concepts of test and control groups. These should be paired with free analytics platforms like Google Analytics to monitor overall baseline metrics.

- Affordable Platforms for SMBs: As a business’s ad spend and analytical maturity grow, it may be worthwhile to invest in a dedicated measurement platform. The market offers several solutions tailored to the needs and budgets of small to medium-sized businesses. Platforms like Recast (which offers an open-source option), Rockerbox, and Haus are frequently cited as agile and affordable solutions for digital-first brands and startups. Other tools, like Stella, are explicitly positioned as low-cost alternatives to expensive enterprise measurement firms, making advanced insights more accessible.

- When to Outsource or Build: For businesses with an annual ad spend exceeding the $3-5 million threshold, the complexity of the media mix and the potential value of optimization often justify a more significant investment in measurement. At this stage, it becomes viable to either engage a specialized measurement partner or begin building a small in-house analytics team. For the vast majority of small businesses, however, the focus should remain on mastering low-cost DIY methods and leveraging affordable, accessible tools.

Conclusion

The transition from correlation-based attribution to causal incrementality measurement represents one of the most significant and necessary evolutions in modern marketing. In an environment defined by economic uncertainty, data privacy constraints, and the opacity of walled gardens, proving the true, causal value of advertising is no longer a luxury but a fundamental requirement for sustainable growth.

This report has demonstrated that traditional metrics like ROAS, while simple to calculate, can be profoundly misleading. By failing to distinguish between conversions that are caused by advertising and those that are merely correlated with it, these metrics can lead to the systematic misallocation of resources toward channels that cannibalize organic demand rather than create new value. Incrementality testing, rooted in the scientific principles of controlled experimentation, provides the necessary antidote. By comparing the outcomes of a group exposed to advertising with a control group that is not, marketers can isolate and quantify the precise incremental lift generated by their efforts.

The methodologies for achieving this vary in their precision, cost, and complexity. Randomized Controlled Trials (RCTs) remain the scientific gold standard, offering unparalleled accuracy but facing practical limitations. Geo-experiments provide a scalable solution for measuring broad, channel-level impact but demand careful design to mitigate the significant risks of contamination and low statistical power. Advanced techniques like Ghost Ads offer a highly precise measurement framework for programmatic environments, while platform-native lift studies provide an accessible, if imperfect, entry point into causal analysis. The choice of method is a strategic one, dictated by the specific business question, the channels under investigation, and the advertiser’s resources.

Crucially, the power of incrementality is not reserved for enterprises with large data science teams. By adopting a disciplined experimentation framework—starting with a clear hypothesis, defining meaningful KPIs, and rigorously documenting results—small agencies and businesses can implement low-cost, practical tests. Simple but powerful methods, such as time-based holdouts and the analysis of natural experiments, can uncover significant inefficiencies and unlock substantial budget for more productive investments. For these organizations, the ultimate goal is not to achieve perfect statistical certainty in every test, but to foster a culture of curiosity, critical thinking, and continuous, evidence-based improvement. By consistently asking “What would have happened otherwise?”, marketers can move beyond the attribution trap and begin to measure what truly matters: the incremental growth they deliver to the business.