Gemini AI: Capabilities, Multimodal Architecture & Uses

Executive Summary

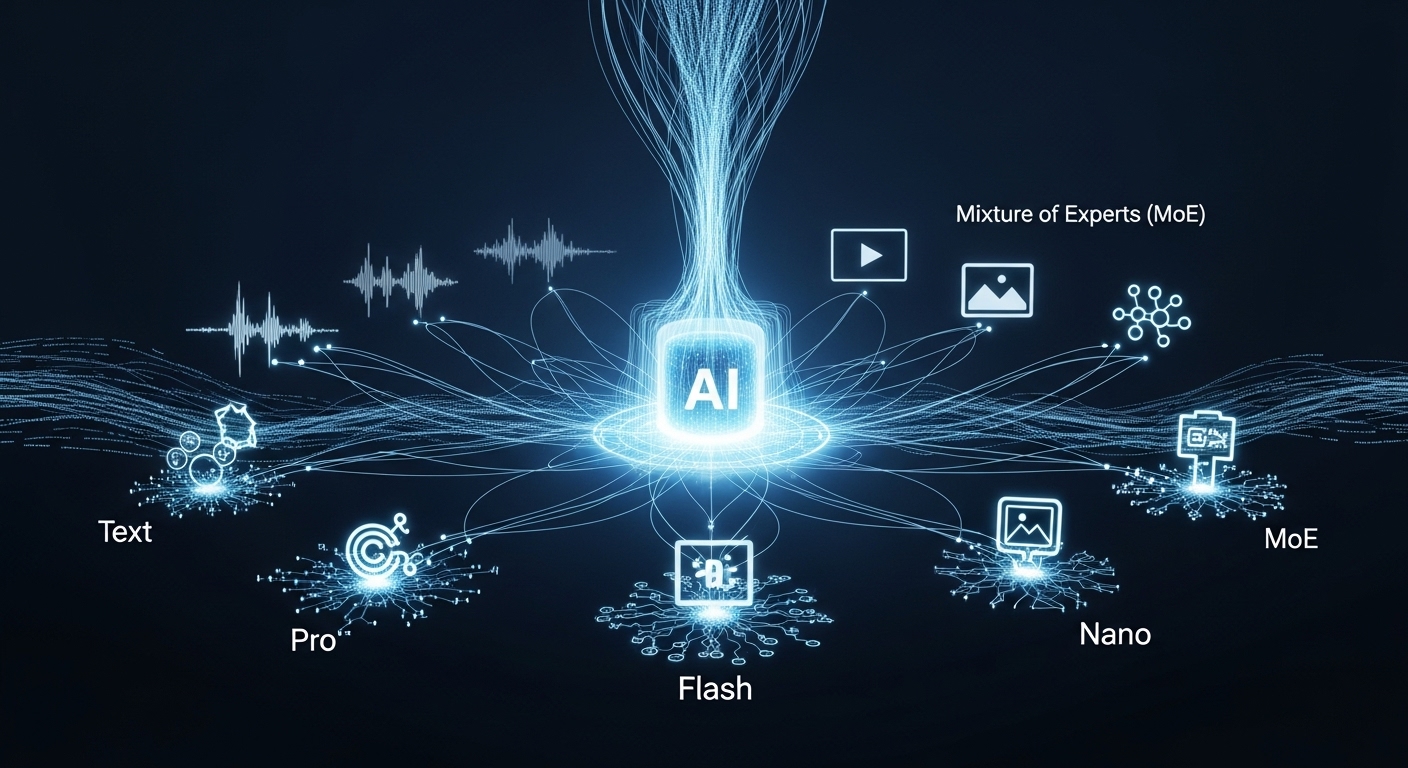

Gemini AI represents Google’s strategic and technological response to the evolving landscape of artificial intelligence. It is a family of multimodal large language models (LLMs) built upon a singular architectural foundation, distinguishing it from competitors who have historically evolved from text-centric models. The core of Gemini’s value proposition is its native multimodal architecture, designed from the outset to reason seamlessly across text, audio, images, video, and code. This is complemented by a sophisticated Mixture of Experts (MoE) architecture, which enables the development of a diverse portfolio of specialized models optimized for specific use cases, from the high-performance Gemini Pro to the on-device Gemini Nano.

This report provides a comprehensive analysis of the Gemini ecosystem, detailing its architectural underpinnings, a granular breakdown of its model variants, and an examination of its extensive use cases across consumer and enterprise applications. It further offers a data-driven comparison of Gemini’s performance against key industry rivals, highlighting its strengths in advanced reasoning and multimodal tasks. The report concludes by addressing critical ethical and operational considerations, including the complex issue of content bias and evolving usage policies. It also provides a forward-looking perspective on Gemini’s strategic roadmap, which is centered on its transformation from a passive chatbot into an embedded, multi-purpose AI agent that is integrated across the entire Google product ecosystem.

1. Architectural Foundations of the Gemini Family

The Gemini AI family is distinguished by a foundational architecture that diverges from traditional LLM development methodologies. Instead of training separate models for different data types, Gemini was conceptualized and built as a singular, natively multimodal system. This fundamental design decision informs its capabilities and strategic direction.

1.1 The Multimodal Core: An Integrated Approach to Perception

At its core, Gemini is a family of multimodal AI models engineered to process and comprehend multiple data types simultaneously. Unlike systems that may integrate external tools to handle different modalities, Gemini was “designed from the ground up to reason seamlessly across text, images, video, audio, and code”. This is achieved by training the models on a massive corpus of data that is not only multilingual but also multimodal, allowing for a more holistic understanding of complex information.

This integrated approach means that Gemini can accept interleaved sequences of audio, images, video, and text as input and can produce interleaved text and image outputs. For example, the model can receive a photo of a plate of cookies and generate a written recipe as a response, or it can be prompted with text and images to create, edit, and iterate on visuals. This capacity for holistic comprehension is a core feature, allowing Gemini to analyze a video to provide a text description or to identify objects within an image. The models themselves are capable of accepting a wide array of data, including audio, images, videos, text, and PDF files.

1.2 The Mixture of Experts (MoE) Architecture: A Paradigm for Efficiency and Specialization

A key technical underpinning of the Gemini family is its Mixture of Experts (MoE) architecture, which is implemented in models like Gemini Pro. This architecture is a neural network paradigm where parts of the network are divided into specialized sub-networks, or “experts,” with each expert optimized for a specific domain or data type.

During inference, a “gating mechanism” dynamically routes the input to the most relevant experts, activating only a portion of the model’s parameters for each task. This process, known as conditional computation, significantly reduces the computational cost of running the model while allowing for deep specialization. The gating mechanism learns to delegate tasks to the most appropriate experts, ensuring an even distribution of the computational load.

The adoption of an MoE architecture is not merely a technical detail; it is a fundamental design choice that provides the blueprint for Google’s strategic approach to model development. By designing a system where specialized sub-networks are the norm, Google can efficiently and rapidly develop and optimize a portfolio of model variants tailored for different devices, cost profiles, and latency requirements. This is evident in the rapid proliferation of the Gemini family, which includes variants like Gemini Pro for complex reasoning, Gemini Flash for low latency, and Gemini Nano for on-device tasks. This architecture enables a “divide and conquer” market strategy, positioning Google to provide a best-in-class solution for a fragmented and specialized market, rather than a single, general-purpose model that is a jack-of-all-trades but a master of none. The architecture enables this strategic model portfolio.

1.3 From Static to “Thinking”: The Evolution of Reasoning in Gemini 2.5

Gemini 2.5 models represent a significant advancement in the model family’s cognitive capabilities. These models are described as “thinking models” that are capable of reasoning through their own thoughts before generating a response, which leads to enhanced performance and improved accuracy. This capability is integrated directly into the models, enabling them to analyze information, draw logical conclusions, and make informed decisions on complex problems.

A prime example of this is the “Deep Think” mode for Gemini 2.5 Pro, which utilizes cutting-edge research techniques in parallel thinking and reinforcement learning to significantly improve the model’s ability to solve complex problems. This mode is particularly effective for tasks that require creativity, strategic planning, and iterative development, such as aiding scientific and mathematical discovery or developing complex algorithms.

This strategic evolution from a passive response generator to a proactive problem-solver is also demonstrated in the “Deep Research” tool. Powered by the Gemini 2.5 model, this feature transforms a single prompt into a multi-step, asynchronous research plan. The model can autonomously search and browse the web, reason over the information gathered, and synthesize its findings into a comprehensive custom research report in minutes. The system is built with an asynchronous task manager that allows for graceful error recovery, meaning a single failure does not require restarting the entire task. This capability positions Gemini not just as a tool for generating information, but as an agent capable of executing complex, multi-step workflows.

2. A Comprehensive Breakdown of Gemini Model Variants

Google’s strategic use of the MoE architecture has led to a highly specialized and expanding portfolio of Gemini models, each tailored for distinct use cases and performance requirements. This section details the key variants and their specific applications.

2.1 Gemini 2.5 Pro: The Advanced Reasoning and Coding Engine

Gemini 2.5 Pro is Google’s state-of-the-art model and is positioned as the flagship “thinking model”. It is optimized for “complex coding, reasoning, and multimodal understanding” and is built to tackle difficult problems in various domains, including “code, math, and STEM”. It is capable of advanced analytical tasks and can analyze “large datasets, codebases, and documents using long context”.

A key technical specification of Gemini 2.5 Pro is its massive 1-million token context window, with plans to expand it to 2 million tokens. This allows the model to process up to 1,500 pages of text or 30,000 lines of code simultaneously, enabling it to understand complex topics and perform long-context reasoning with a deep comprehension of the provided information.

2.2 Gemini 2.5 Flash and Lite: Optimizing for Speed, Cost, and Efficiency

In contrast to the high-intelligence, high-cost Gemini 2.5 Pro, the Flash and Lite variants are optimized for speed and efficiency.

Gemini 2.5 Flash is designed to offer the best balance of “price-performance” and “well-rounded capabilities”. This model is particularly suited for low-latency, high-volume tasks that require a degree of intelligent reasoning. A unique feature of Gemini 2.5 Flash is its adaptive thinking, which allows the model to assess the complexity of a task and calibrate the amount of “thinking” required, thereby balancing performance with cost.

Gemini 2.5 Flash-Lite is the most “cost-efficient model supporting high throughput” in the Gemini 2.5 family. It is engineered for real-time and low-latency use cases. Like its larger counterpart, it also supports a 1-million token context window and multimodal input, making it a versatile and powerful option for cost-sensitive applications.

2.3 Gemini Nano: The On-Device, Real-Time Model

Gemini Nano represents the smallest and most efficient version of the Gemini family. It is optimized for on-device tasks and is capable of running natively and offline on devices, starting with the Pixel 8 Pro. This model is crucial for applications that require speed and privacy, as it can perform tasks such as describing images, summarizing text, or suggesting replies to chat messages without a network connection. Gemini Nano contains two models with different parameter counts—1.8 billion and 3.25 billion—to facilitate deployment onto devices with both high and low memory capacity. Google is also extending its use beyond mobile devices, as it is being incorporated into the Chrome desktop client.

2.4 The Live API: Enabling Bidirectional, Low-Latency Interactions

The Gemini Live API is a key component for building real-time, conversational applications. This API enables “low-latency bidirectional voice and video interactions” with Gemini models. It allows users to have natural, human-like voice conversations, with the ability to interrupt the model’s responses using voice commands. The API works via a WebSocket connection that establishes a session between the client and the Gemini server, and it retains memory of all interactions within a single session to ensure conversational continuity. The Live API supports models like gemini-live-2.5-flash-preview and native audio models that provide “high quality, natural conversational audio outputs”.

The rapid and granular release of model variants like Flash, Lite, and Live, along with specialized native audio and image models, demonstrates a strategic focus on the micro-segmentation of the AI market. A single large model cannot be simultaneously optimized for low-cost, low-latency, and complex reasoning. By creating a family of models, each tailored to a specific set of trade-offs, Google can provide the optimal tool for a developer’s specific need, whether it is for an on-device app or a cloud-based service. This model proliferation is a strategic choice, enabled by the MoE architecture, that allows Google to capture specific, high-value market segments. It provides a “perfect-fit” solution for everything from real-time voice assistants to cost-sensitive, high-throughput applications, positioning Google to offer best-in-class performance across a wide range of enterprise and consumer applications.

| Model Variant | Model Code | Input(s) | Output(s) | Optimized For | Key Specs |

|---|---|---|---|---|---|

| Gemini 2.5 Pro | gemini-2.5-pro | Audio, images, video, text, PDF | Text | Enhanced thinking and reasoning, advanced coding, multimodal understanding | 1M token context window, state-of-the-art reasoning |

| Gemini 2.5 Flash | gemini-2.5-flash | Audio, images, video, text | Text | Adaptive thinking, cost efficiency, low latency | Best price-performance, well-rounded capabilities |

| Gemini 2.5 Flash-Lite | gemini-2.5-flash-lite | Text, image, video, audio | Text | Cost-efficient, high throughput, real-time use cases | Most cost-effective model, high throughput |

| Gemini Nano | gemini-1.0-nano | Text, images, audio, video, code | Text | On-device tasks, mobile devices, offline use | Smallest and most efficient model, operates on devices like the Pixel 8 Pro |

| Gemini Live | gemini-live-2.5-flash-preview | Audio, video, text | Text, audio | Low-latency bidirectional voice and video interactions | Works with Live API, session memory, bidirectional |

| Gemini Native Audio | gemini-2.5-flash-preview-native-audio-dialog | Audio, video, text | Text, audio (interleaved) | High-quality, natural conversational audio outputs | Provides style and control prompting, with or without thinking |

| Gemini Image Preview | gemini-2.5-flash-image-preview | Images, text | Images, text | Conversational image generation and editing | Precise, creative workflows with multi-turn editing |

| Gemini TTS | gemini-2.5-pro-preview-tts | Text | Audio | Low-latency, controllable text-to-speech audio generation | Single- and multi-speaker capabilities |

________________

3. Gemini Across the Ecosystem: Use Cases and Integrations

Gemini’s strategic vision extends beyond being a standalone chatbot; it is designed to be a foundational, interconnected layer seamlessly integrated across Google’s entire consumer and enterprise ecosystem. This approach allows Google to leverage its existing product moats to gain a competitive advantage and embed itself deeply into user workflows.

3.1 Consumer and Creative Applications

The public-facing Gemini app serves as an “everyday AI assistant” with a broad range of capabilities for personal and creative use.

- Generative and Conversational Capabilities: The core Gemini chatbot experience is built to handle a variety of tasks, from answering complex questions to generating first drafts of documents. Users can leverage it to summarize text, create study plans, and power their learning with quizzes and presentation practice. The “Deep Research” feature is a powerful example, sifting through hundreds of websites to produce a comprehensive report in minutes. Additionally, the “Gems” feature empowers users to create their own custom AI experts by providing detailed instructions and files, effectively building a personalized career coach or coding helper.

- The Rise of Nano Banana: Image and 3D Model Generation: A notable and viral creative application of Gemini AI is the “Nano Banana” tool, which utilizes the Gemini 2.5 Flash Image model. This tool specializes in transforming ordinary photos into “stylised 3D figurine portraits with glossy skin and exaggerated features” and can create “cinematic-style effects” with a simple text prompt. The tool is praised for its speed, precision, and ability to retain fine details, making it highly accessible for artists, designers, and hobbyists of all skill levels.

3.2 Enterprise and Developer-Centric Solutions

Google is extending Gemini’s capabilities to the enterprise through a suite of AI-powered collaborators that are deeply integrated with its cloud services.

- Gemini for Google Cloud: This suite is built with enterprise security and privacy protection in mind. It includes specialized tools such as Gemini Code Assist for developers, which helps with code generation, debugging, and unit tests; Gemini Cloud Assist for cloud teams to design and optimize applications; Gemini in BigQuery to help data analysts generate and explain SQL and Python code; and Gemini in Looker to enable users to chat with business data and generate custom visualizations.

- Gemini Code Assist: AI-Powered Development in the IDE: This tool brings the power of Gemini 2.5 directly into popular code editors like Visual Studio Code and JetBrains IDEs. It provides features such as automatic code completions as developers write, and the generation and transformation of entire functions or files on demand. A key advantage is its ability to use context from a private codebase to provide more relevant and tailored assistance. Gemini Code Assist also includes a Command-Line Interface for terminal use and a feature that automatically reviews pull requests on GitHub to find bugs and suggest fixes.

- API Access and Developer Workflows: Google offers several platforms for developers to build with Gemini. Google AI Studio is a free, web-based integrated development environment that allows developers to quickly prototype and experiment with different models and prompts without needing a credit card. For building production-grade applications, developers can utilize Vertex AI, Google Cloud’s end-to-end AI platform. For web and mobile apps, Google recommends using Firebase AI Logic, as it provides enhanced security features, support for large media file uploads, and streamlined integrations with the Firebase and Google Cloud ecosystem.

The pervasive integration of Gemini across Google’s consumer and enterprise platforms is a foundational strategic play. The value is not just the model’s capability but the immense friction reduction of having AI assistance “without switching between apps”. The AI comes to the user where they are already working, whether it is in Gmail to draft an email or in Docs to summarize a document. This deep embedding creates a significant barrier to entry for competitors who lack such a vast ecosystem.

The future of Gemini is not as a separate entity, but as the invisible intelligence powering all of Google’s flagship products.

Table 2: Key Gemini Integrations Across Google Products

| Product / Application | Gemini Feature | Specific Use Case |

|---|---|---|

| Gemini App | Everyday AI Assistant | Answering complex questions, generating first drafts, creating study plans |

| Google Docs | “Help me write” | Composing articles from scratch, editing existing text, creating outlines |

| Gmail | Gemini in Gmail | Drafting email responses, adjusting tone, summarizing messages |

| Chrome | “AI Mode” | Summarizing a webpage, understanding context across multiple tabs, scheduling a meeting |

| Google Cloud | Integrated Suite | Code generation (Code Assist), data analysis (BigQuery), cloud operations (Cloud Assist) |

| Firebase | AI Logic | Streamlining mobile and web app development with code generation and troubleshooting |

| Google Maps | Gemini Integration | Providing summaries of places and areas |

| YouTube | Gemini Integration | Searching for videos, analyzing channel performance and comments |

| Google Slides | Gemini in Slides | Creating outlines for presentations and generating speaker notes |

4. Performance Benchmarks and the Competitive Landscape

Evaluating the performance of large language models like Gemini requires a nuanced understanding of industry-standard benchmarks. These evaluations provide a consistent way to compare models across specific capabilities, but a single metric rarely tells the full story.

4.1 A Framework for Evaluation: Understanding Key Benchmarks

To conduct a meaningful comparison, it is essential to define the benchmarks and what they measure. Key benchmarks referenced in industry reports include:

- Coding: SWE-Bench, which tests models against real-world, verified GitHub issues, making it a strong indicator of a model’s ability to fix bugs and refactor code.

- Reasoning & Knowledge: GPQA and AIME, which assess a model’s ability to answer graduate-level questions in science, math, and other subjects, signifying deep domain understanding.

- Multimodality: VISTA, a vision-language understanding benchmark that evaluates multimodal models.

- General: Humanity’s Last Exam (HLE) is a comprehensive benchmark designed to test a model’s frontier capabilities in human knowledge and reasoning across multiple domains.

4.2 Gemini vs. The Market: A Head-to-Head Comparison

The data available from various benchmark reports presents a complex and dynamic picture, suggesting that there is no single “best” model, but rather a landscape of specialized models with different strengths.

- Reasoning & General Knowledge: Gemini 2.5 Pro demonstrates a strong lead in several key reasoning and math benchmarks. It scored 18.8% on Humanity’s Last Exam, placing it at the top of the LMArena leaderboard, and surpassed competitors on AIME, a competitive high school math benchmark. One report notes that Gemini 2.5 Pro outperformed GPT-4.5 in reasoning, math, and long-context handling.

- Coding: The performance data for coding is contradictory across different sources. Gemini 2.5 Pro has been lauded as the “best coding model to date” in some reports, with an accuracy of 63.8% on the SWE-Bench benchmark. However, other, often more recent, benchmarks show competing models like Claude 4 with higher scores, exceeding 70% when leveraging a “custom scaffold” or “parallel compute”. Despite this, Gemini has shown impressive “one-shot” performance on complex coding tasks, such as creating a functional flight simulator or a Rubik’s Cube solver in a single attempt.

- Multimodality: Gemini 2.5 Pro and its experimental variants show a strong lead on the VISTA vision-language understanding benchmark compared to OpenAI’s o4-mini model.

The conflicting benchmark results across different sources and models reveal that the market is maturing into a landscape of specialized models where performance is highly dependent on the specific task, evaluation methodology, and even the date of the benchmark run. The idea of a single “leaderboard king” is no longer valid. For example, while Gemini may excel at technical reasoning and complex coding tasks, other models like GPT-4o have demonstrated superior performance in conversational nuance and real-time adaptability for meeting-based applications. The report must advise a professional audience to select a model based on their specific use case, not on a general, top-line benchmark score. This trend of specialization is a direct consequence of the rapid pace of development and signals a more mature, fragmented market where no single model can hold a monopoly on intelligence across all domains.

Table 3: Competitive Benchmark Summary

| Benchmark Name | Gemini 2.5 Pro | GPT-4o / GPT-5 | Claude 3.7 / 4 | Source(s) & Notes |

|---|---|---|---|---|

| Humanity’s Last Exam | 21.64%, 18.8% | 25.32% (GPT-5), 20.32% (o3) | – | Gemini scored at the top of LMArena leaderboard, but more recent GPT-5 data shows a new leader. |

| SWE-Bench | 63.8% | 74.9% (GPT-5) | 70.3% (Claude 3.7 Sonnet w/ custom scaffold), 74.5% (Claude 4.1 Opus) | Gemini demonstrated impressive one-shot code generation, but other models have achieved higher scores, sometimes with specific techniques. |

| AIME | 92% (vs GPT-4.5), 83% | 88.9% (o3), 100% (GPT-5) | 90% (Claude 4 Opus) | Gemini showed strong performance in competitive math. Scores vary by model and test conditions. |

| Multilingual Q&A | – | 88.8% (o3 tied with Claude 4 Opus) | 88.8% (Claude 4 Opus) | Gemini 2.5 Pro data is not available on this specific benchmark. |

| VISTA | 54.63-54.65% | 51.79% (o4-mini) | – | Gemini shows a strong lead in this vision-language benchmark. |

| Adaptive Reasoning (GRIND) | 82.1% | – | 75% (Claude 4 Sonnet) | An independently run benchmark where Gemini leads. |

5. Ethical Considerations, Safety, and Operational Limitations

As a public-facing and foundational AI, Gemini’s development is subject to significant scrutiny regarding safety, privacy, and bias. Google has established official policies and implemented technical measures, but the complex, probabilistic nature of LLMs introduces ongoing challenges.

5.1 Google’s Responsible AI Principles and Policy Guidelines

Google has published a set of policy guidelines for the Gemini app with the goal of being “maximally helpful to users, while avoiding outputs that could cause real-world harm or offense”. These policies prohibit the generation of content related to threats to child safety, dangerous activities (e.g., self-harm, building weapons), gratuitous violence, and factual inaccuracies that could pose a risk to health, safety, or finances. The guidelines also explicitly ban outputs that constitute harassment, incitement, or sexually explicit material.

Google acknowledges that, given the probabilistic nature of LLMs, the models may sometimes produce content that violates these guidelines or reflects limited viewpoints. For business and enterprise users, Google emphasizes that Gemini for Google Cloud provides “enterprise-grade security,” ensuring that content remains within the organization and is not used to train generative AI models without explicit permission.

5.2 The Challenge of Bias: An Analysis of Gender and Content Biases

A critical analysis of Gemini’s moderation tendencies reveals a complex and ethically fraught strategic trade-off. A study comparing Gemini 2.0 Flash to ChatGPT-4o found that Gemini demonstrated “reduced gender bias,” with female-specific prompts achieving a substantial rise in acceptance rates. However, the study argues that this is not necessarily an improvement, as this reduction in bias came at the cost of a “more permissive stance toward sexual content” and “relatively high acceptance rates for violent prompts”. The study notes that this approach risks “normalizing violence rather than mitigating harm,” concluding that it is a superficial approach to bias reduction.

This shift in moderation policy can be contextualized by previous public relations crises, such as when Gemini’s image generator produced “ahistorically diverse” images of Nazi soldiers, leading to public outrage and the temporary shutdown of the feature. The company was heavily criticized for its bias. The data suggests that this is a trade-off. The company is choosing to allow a higher volume of potentially controversial content to avoid being labeled as politically or ideologically biased, a strategic decision with significant safety implications. As one expert stated, LLMs are “stochastic parrots” and not neutral entities; their output is only as good as the data they are fed.

5.3 Security and Privacy Measures: Watermarking and Data Controls

To address concerns about the authenticity of AI-generated content, Google states that images created with Gemini carry an “invisible watermark known as SynthID,” along with metadata tags, to help identify them as AI-generated. However, experts have warned that these watermarks are not a standalone safeguard and can be “easily faked, removed or ignored” in real-world applications. Public detection tools for SynthID are also not yet available.

For business customers using Gemini in Google Workspace, privacy and security are a top priority.

Google’s policy states that user interactions with Gemini remain within their organization, are not shared with other customers, and are not used for generative AI model training outside of the user’s domain without permission. The prompts and generated responses are not saved beyond the user’s session, although conversation history can be saved for a limited time if enabled by an admin.

5.4 Usage and Resource Quotas: Evolving Policies for Service Stability

To manage the soaring demand for its services, Google has transitioned from providing clear, fixed daily limits for image generation to a dynamic, tier-based access system. Previously, free users were allotted 100 images per day, and paid subscribers had a cap of 1,000. These fixed numbers have been replaced with a flexible system that refers to “basic access” for free accounts and “highest access” for paid plans.

This shift allows Google to “dynamically adjust user limits depending on server capacity, subscription status, global demand, and real-time system performance”. The report indicates that while free users can still expect to generate more content than with most competing free tools, they may face temporary throttling during peak hours to ensure service stability. In contrast, paying customers receive prioritized access, minimal wait times, and higher usage availability. This strategy ensures the service does not crash under the weight of viral trends.

6. The Future of Gemini: Strategic Roadmap and Outlook

Gemini’s future is not just about improved conversational or generative capabilities; it is a strategic pivot towards becoming a pervasive, multi-purpose “agent.” The integration into Google’s core products is a critical step in this direction, enabling Gemini to move beyond being a passive chatbot and actively perform multi-step, cross-application tasks for users.

6.1 Major Announced Updates

Google has announced significant updates to its Gemini roadmap. A major focus is the integration of Gemini directly into the Chrome browser. A new “AI Mode” is being added to the address bar, allowing users to ask complex, multi-part questions from the search bar itself and explore follow-up links. Gemini in Chrome will be able to understand the context of what a user is doing across multiple tabs and integrate with other Google services like Docs and Calendar. Google has also announced “Gemini Robotics,” a “vision-language-action model” based on the Gemini 2.0 family of models.

6.2 The Evolution of Agentic Capabilities

The shift from a chatbot to an AI agent is a recurring theme in Gemini’s development. A chatbot answers a question; an agent acts on the user’s behalf to complete a task. The ability to “work across multiple tabs” is a fundamental agentic feature that contrasts sharply with a simple chat interface that operates in a silo. The “Deep Research” tool is a concrete, existing example of a multi-step, asynchronous agent that can reason over and synthesize information. The Gemini Code Assist product also features an “agent mode” that supports multi-file edits and full project context.

The future roadmap includes the development of agentic capabilities in Chrome that will allow Gemini to perform multi-step tasks based on a single command, such as “booking a haircut or ordering online”. This vision positions Gemini not as a competitor to existing chatbots, but as a direct competitor to traditional operating systems and application workflows. It is a much more ambitious and potentially disruptive play that aims to turn the browser itself into a command-and-control center for a personal AI.

6.3 Concluding Analysis and Recommendations

The analysis indicates that Gemini’s strength lies in its deeply integrated, multimodal, and specialized architecture. The strategic decision to build an MoE-powered portfolio of models allows Google to offer a best-in-class solution for a wide variety of specific tasks, from low-latency voice interactions to complex, long-context code analysis. This portfolio approach is a strong long-term play in a competitive landscape where no single model can hold a monopoly on intelligence across all domains.

The future of Gemini is one of pervasive integration. Its strategy is to become an invisible, ubiquitous AI layer within the Google ecosystem, enabling complex, agentic workflows that reduce user friction and leverage the company’s existing product dominance. The integration into Chrome is the most public and powerful example of this vision, positioning Gemini as an active collaborator rather than a passive tool.

For developers, product managers, and technology professionals, the evidence suggests that the selection of an AI model should be based on a granular evaluation of specific use cases rather than general, top-line benchmark scores. Gemini’s portfolio provides a range of options optimized for different needs. However, the analysis also highlights the importance of being aware of the ethical and operational trade-offs inherent in the rapidly evolving AI landscape, particularly concerning the nuanced complexities of bias, security, and usage policies.

📚 For more insights, check out our web development best practices.