Future of Business IT Strategies: AI, Infrastructure & 2030

Executive Summary

The global business landscape stands at the precipice of a technological metamorphosis unprecedented in velocity and scale. As we traverse the latter half of the 2020s, the convergence of Agentic Artificial Intelligence (AI), distributed edge infrastructure, quantum possibilities, and the existential imperative of sustainability is rewriting the fundamental operating code of the enterprise. This report, The Future of IT in Business, provides an exhaustive strategic analysis of these forces. It moves beyond the superficial hype of “digital transformation”—a term now largely synonymous with the modernization efforts of the past decade—to explore the emerging paradigm of “Intelligent Orchestration.”

Our analysis, grounded in extensive market research and technical forecasting, suggests that by 2030, the distinction between “business strategy” and “IT strategy” will have completely evaporated. Information Technology will no longer be a support function or even an enabler; it will be the very fabric of the enterprise, acting with increasing autonomy. The novelty of Generative AI (GenAI), which defined the years 2023 and 2024, is rapidly ceding ground to the utility of Agentic AI—systems capable of autonomous planning, execution, and self-correction. Simultaneously, the monolithic centralization of cloud computing is fracturing into a distributed continuum, where processing power is pushed to the edge to support the latency demands of the Industrial Internet of Things (IIoT) and spatial computing.

However, this technological acceleration is not without its friction. It precipitates a crisis in energy consumption, with data center electricity demand projected to double by 2030, necessitating a radical shift toward Green IT and circular economy principles to avoid colliding with global climate goals. Furthermore, the security architecture of the modern enterprise is being forcibly rewritten. The traditional perimeter is obsolete; the new defense mechanism is Zero Trust 2.0, an AI-driven, continuous validation framework designed to counter increasingly sophisticated, machine-generated cyber threats.

This report dissects these trends with granular precision, offering a comprehensive roadmap for IT leaders, executives, and strategists. It explores how the integration of these technologies will not only automate tasks but redefine the concept of work itself, transitioning the workforce from operators of tools to orchestrators of agents.

Section 1: The AI Paradigm Shift – From Generative Tools to Agentic Autonomy

The evolution of Artificial Intelligence within the business context is transitioning from a phase of content generation to one of autonomous action. While the period between 2022 and 2024 was defined by the democratization of Large Language Models (LLMs) and their ability to synthesize information, the period from 2025 to 2030 will be characterized by “Agentic AI.” This shift represents a move from software that assists to software that acts, fundamentally altering the value proposition of enterprise technology.

1.1 The Rise of Agentic AI and Superagency

The defining trend for 2025 and beyond is the emergence of Agentic AI. Unlike passive chatbots or copilots that await human prompts to generate text or code, agentic systems possess the capability to set goals, formulate multi-step plans, and execute tasks across disparate systems to achieve an objective. This capability allows AI to function not merely as a tool but as a proactive entity within the enterprise, capable of navigating complex workflows with a degree of autonomy previously reserved for human employees.

Industry analysis suggests that we will eventually view AI as a foundational utility, akin to electricity or HTTP—an invisible layer that empowers functionality without requiring proactive, manual intervention. We are moving toward a world where users simply experience a reality made smarter and faster by algorithms, rather than consciously “using” AI tools. This evolution is giving rise to the concept of “Superagency” in the workplace. Superagency describes a future where employees are empowered to unlock exponential productivity not by doing more work themselves, but by directing fleets of AI agents to execute complex objectives.

1.1.1 Operational Mechanics of Agentic Systems

The operational distinction of Agentic AI lies in its autonomy and its ability to interact with the broader digital ecosystem. While a traditional LLM might write an email for a user to send, an Agentic AI system can draft the email, identify the correct recipients based on project context, schedule a follow-up meeting by accessing calendar APIs, and update the CRM system—all from a single high-level objective set by the user.

These systems leverage Application Programming Interfaces (APIs), robotic process automation (RPA) bots, and search engines independently. Crucially, they possess the ability to self-correct. If an initial strategy fails—for instance, if an API call returns an error or a search query yields irrelevant results—the agentic system analyzes the outcome and adjusts its approach in real-time without human hand-holding. This “closed-loop” operational capability is what distinguishes true agency from mere automation.

This capability is expected to drive a surge in adoption. Deloitte predicts that in 2025 alone, 25% of companies currently utilizing GenAI will launch agentic AI pilots, with adoption rates potentially reaching 50% by 2027. This rapid uptake is driven by the realization that while GenAI improves individual task efficiency, Agentic AI addresses entire workflows, unlocking value at the organizational level.

1.2 Hyperautomation: The Strategic Convergence

Hyperautomation serves as the architectural vehicle for deploying Agentic AI. It is no longer a siloed IT project but a core business strategy that combines AI, machine learning, RPA, and process intelligence to automate as many business and IT processes as possible. The shift toward hyperautomation marks a new phase where these disparate technologies converge to create smarter, faster, and more responsive enterprises.

The scope of hyperautomation is expanding beyond simple repetitive tasks to include complex decision-making processes. By 2025, hyperautomation is projected to be a dominant trend, integrating advanced analytics and low-code/no-code platforms to democratize automation capabilities across the enterprise. This democratization allows “citizen developers”—business users with little to no coding expertise—to build and deploy automation workflows, further accelerating the velocity of digital transformation.

Table 1: Evolution of Automation Technologies (2020–2030)

| Feature | Traditional Automation (RPA) | Generative AI (2023–2024) | Agentic AI & Hyperautomation (2025+) |

|---|---|---|---|

| Primary Trigger | Human-initiated or scheduled | Human prompt | Autonomous goal setting or event-driven |

| Scope | Single, repetitive task | Content creation, summarization | End-to-end process orchestration |

| Adaptability | Rigid, rule-based | Flexible within language parameters | Self-correcting, learns from outcomes |

| Integration | Screen scraping, basic APIs | Text/Code insertion | Deep API integration, Cross-system action |

| Decision Making | Deterministic (If/Then) | Probabilistic (Next token) | Strategic (Multi-step planning) |

The implications of this convergence are profound. In supply chain management, for example, hyperautomation does not just track inventory; it predicts demand using predictive analytics and automatically adjusts orders in real-time to avoid stockouts. In customer support, it evolves beyond basic chatbots to systems that can handle complex inquiries, process refunds, and update account details without human intervention, escalating only the most sensitive issues to human agents.

1.3 Governance and the “Different Horses” Strategy

As AI matures, the “one model fits all” approach is becoming obsolete. Enterprises are recognizing that AI needs “different horses for different courses”. While massive, parameter-heavy Large Language Models are suitable for broad, creative tasks, smaller, specialized models are proving more effective, faster, and significantly more energy-efficient for discrete, domain-specific tasks.

This diversification necessitates robust AI governance platforms. By 2025, a top technology trend will be the deployment of solutions specifically designed to manage the legal, ethical, and operational performance of disparate AI systems. As organizations move from experimental pilots to mature deployments, the complexity of managing a fleet of different AI models—each with its own versioning, bias profile, and performance characteristics—becomes a critical operational challenge.

Currently, only 1% of leaders classify their companies as “mature” on the AI deployment spectrum, meaning that AI is fully integrated into workflows and driving substantial business outcomes. Bridging this gap requires not just technology, but a comprehensive governance framework that ensures AI systems are reliable, explainable, and aligned with business objectives. This includes “Disinformation Security,” a burgeoning field aimed at systematically discerning trust and authenticating content in an era where AI-generated deepfakes and misinformation are rampant.

1.4 Business Use Cases of Agentic AI

The transition to Agentic AI is opening new frontiers for business application. The ability to automate and streamline processes is creating opportunities for businesses to expand their services and reach new customers in ways previously deemed cost-prohibitive.

- Financial Services: Agentic AI is revolutionizing fraud detection and compliance.

Agents can autonomously monitor transaction patterns across millions of accounts, flagging anomalies and—crucially—freezing accounts or initiating investigation protocols instantly, without waiting for human review.

- Healthcare: In the medical field, agentic systems are assisting in patient management by autonomously scheduling appointments based on treatment protocols, following up on medication adherence, and even triaging patient inquiries based on symptom severity before a doctor ever sees the chart.

- Retail: Hyperautomation in retail is enabling dynamic pricing strategies where agents analyze competitor pricing, demand signals, and inventory levels to adjust prices in real-time, maximizing margin without sacrificing sales volume.

The overarching theme is a shift from “using AI” to “employing AI.” Organizations are effectively hiring digital workers that require management, clear objective setting, and performance review, just like their human counterparts.

Section 2: The Infrastructure Evolution – The Edge-Cloud Continuum

The monolithic era of centralized cloud computing is evolving into a distributed continuum. While the cloud remains the command center, the actual processing of data is increasingly migrating to the “Edge”—the physical location where data is generated. This shift is necessitated by the laws of physics: as applications demand real-time responsiveness, the latency inherent in transmitting data to a central server becomes a prohibitive bottleneck.

2.1 The Ascendancy of Edge Computing

Edge computing is revolutionizing data processing by minimizing latency and enabling real-time analysis. By 2027, it is estimated that edge computing will account for more than 30% of enterprise IT spending. This redistribution of resources is critical for latency-sensitive applications such as autonomous vehicles, remote healthcare, and real-time industrial robotics.

The synergy between edge computing and 5G networks is a primary accelerator. The deployment of 5G infrastructure supports the high-bandwidth, low-latency requirements of edge devices, creating a feedback loop that drives adoption of both technologies. 5G enables data to be delivered at lightning speeds between devices and edge servers, making the physical distance between the data source and the processing unit negligible in terms of performance impact.

2.1.1 Distributed Cloud Architectures

The distinction between private, public, and hybrid clouds is blurring into “Distributed Cloud” architectures. In this model, public cloud services are distributed to different physical locations (including the edge and on-premises data centers) while the operation, governance, and updates remain the responsibility of the public cloud provider.

This architecture offers improved performance, regulatory compliance, and resilience. By keeping data processing closer to the user or the device, organizations can ensure compliance with data sovereignty laws that require data to remain within specific geographic borders. The number of edge data centers is projected to quadruple, growing from under 250 in 2022 to over 1,200 by 2026. This physical expansion of the cloud footprint is a tangible manifestation of the “Distributed Cloud” concept.

2.2 Industry-Specific Cloud Platforms (ICPs)

A significant trend for 2025 is the pivot from general-purpose cloud platforms (horizontal) to industry-specific clouds (vertical). Gartner projects that more than 70% of organizations globally will utilize Industry Cloud Platforms (ICPs) by 2027. This marks a departure from the “one size fits all” approach of the early cloud era.

General-purpose providers like AWS, Azure, and Google Cloud are increasingly tailoring services for sectors such as healthcare, finance, and manufacturing. These specialized clouds offer pre-built tools, compliance certifications, and data models relevant to specific verticals, thereby reducing the friction of adoption. For example, a financial services cloud would come pre-configured for high-frequency trading latency requirements and strictly enforced regulatory data governance, distinguishing it from a generic storage solution.

Table 2: Projected Growth of Industry Cloud Platforms

| Metric | 2020 Value | 2025 Projection | Growth Driver |

|---|---|---|---|

| Market Size | $82.5 Billion | $266.4 Billion | Vertical integration needs, Security compliance |

| Adoption Rate | < 40% | > 70% | Demand for specialized, pre-built tools |

| Key Sectors | Retail, Media | Healthcare, Finance, Mfg. | Regulatory complexity, Data sensitivity |

Source Data:

This verticalization is driven largely by security and compliance needs. Sectors like fintech, healthcare, and the military face unique security challenges that general-purpose public clouds cannot always address out of the box. Specialized clouds, often developed alongside or in place of new private clouds, offer the bespoke security architectures required by these high-stakes industries.

2.3 Serverless and Containerization

The operational layer of this infrastructure is increasingly serverless. Serverless computing allows developers to build and run applications without managing the underlying infrastructure, charging only for the resources consumed during execution. This model is inherently suited for the microservices architecture prevalent in modern application development.

By eliminating the need for constant server provisioning, serverless computing makes IT structures significantly more cost-effective for applications with fluctuating workloads. Coupled with the scaling of containers (Kubernetes and Docker), serverless computing lays the groundwork for a flexible IT structure that can dynamically adjust to the fluctuating workloads typical of AI and IoT applications. This “elasticity” is crucial for supporting the bursty nature of modern digital workloads, where traffic can spike unexpectedly due to a marketing campaign or a viral event.

2.4 The Convergence of Edge and AI

The intersection of Edge Computing and AI is creating a new paradigm often referred to as “Edge AI.” Instead of sending data to the cloud for inference, AI models are deployed directly on edge devices (cameras, sensors, gateways). This trend is highlighted by the focus on “Right-Sizing AI for the Edge,” which involves optimizing models for the power and processing constraints of edge hardware.

This shift is critical for privacy and bandwidth management. By processing video feeds or sensitive sensor data locally, organizations avoid transmitting vast amounts of raw data over the network, sending only the actionable insights (metadata) to the cloud. This not only reduces bandwidth costs but also enhances privacy by keeping raw personal data (like faces in a video stream) on the local device.

Section 3: The Quantum Leap – Preparing for the Next Computational Era

While AI and Cloud represent the immediate horizon, Quantum Computing represents the strategic long game. The period between 2025 and 2030 will likely be viewed as the “tipping point” where quantum technologies transition from theoretical physics experiments to practical business advantages, albeit in specific, high-value niches.

3.1 The Valuation and Revenue Reality

The quantum market is characterized by a dichotomy between high valuation and modest current revenue. While the global quantum computing market is projected to grow aggressively—reaching over $20 billion by 2030 with a Compound Annual Growth Rate (CAGR) of over 40%—current revenues are comparatively low. For instance, despite billion-dollar valuations, leading pure-play quantum companies are expected to generate revenues in the range of $36 million to $100 million in 2025.

This “bifurcated path” suggests that while broad commercialization may be distant (post-2035), niche applications in finance, materials science, and drug discovery will generate meaningful revenue and strategic advantage by 2030. Investors and strategists must navigate this hype cycle carefully. The low return on investment projected for 2030 (only 6% despite rapid deployment) indicates that quantum computing will likely remain a capital-intensive, specialized sector rather than a mass-market technology in the short to medium term.

3.2 Practical Use Cases: Finance and Logistics

Despite the revenue lag, early adopters are already exploring practical use cases. The financial services sector is leading the charge, leveraging quantum algorithms for complex optimization problems that choke classical computers.

- Credit Scoring & Fraud Detection: Quantum computers can analyze irregular behavior patterns across massive datasets with a dimensionality that classical computers cannot handle.

- Portfolio Optimization: Banks are exploring quantum algorithms to find the optimal risk/reward balance in investment portfolios in milliseconds rather than hours.

- Pricing Models: Enhancing the accuracy of derivatives pricing through Monte Carlo simulations accelerated by quantum processing results in more competitive and accurate pricing.

In logistics, quantum computing is poised to solve the “Traveling Salesman Problem” at global scale. Optimizing travel routes and resource allocation for global shipping fleets involves variables (weather, fuel costs, port congestion, vehicle maintenance) that grow exponentially with the size of the fleet. Quantum computing can process these variables to identify the most efficient solutions, potentially saving billions in fuel and operational costs.

3.3 The Encryption Threat: Post-Quantum Cryptography

The most urgent implication of quantum advancement for IT leaders is the threat to encryption. Quantum computers are likely to eventually break current public-key encryption standards (like RSA and ECC) using Shor’s algorithm.

This has birthed the field of Post-Quantum Cryptography (PQC).

Updating encryption protocols is described as “urgent,” with PQC listed as a top technology trend for 2025. The threat is not just in the future; it is present today due to the “Harvest Now, Decrypt Later” strategy employed by state actors and cybercriminals. These bad actors are capturing and storing encrypted traffic now, with the intent of decrypting it later when quantum computers become powerful enough.

Consequently, IT strategies in 2025 must include a roadmap for cryptographic agility—the ability to easily swap out encryption algorithms as standards evolve. Organizations dealing with data that has a long shelf life (e.g., healthcare records, government secrets, intellectual property) are at the highest risk and must prioritize the transition to quantum-resistant algorithms immediately.

The Intelligent Supply Chain & Manufacturing

The convergence of 5G, IoT, and AI is producing a “digital nervous system” for global supply chains and manufacturing floors. The goal is no longer just visibility but predictability and autonomy.

The Impact of 5G and IoT

5G is the connective tissue of the modern industrial environment. Its high bandwidth and ultra-low latency allow for the widespread deployment of IoT sensors that monitor everything from the location of a shipping container to the vibration of a bearing in a factory turbine. In supply chain management, 5G enhances visibility by enabling precise tracking of goods and materials. This real-time tracking reduces the risk of disruptions and improves inventory management by providing a “single source of truth” for the location and status of assets.

For example, “smart shelves” in warehouses, connected via 5G, can flag when a product is running low and automatically trigger reordering via agentic AI systems. This ensures continuous availability without the capital cost of overstocking, optimizing working capital for the enterprise. The integration of IoT sensors allows for the monitoring of perishable goods’ conditions (temperature, humidity) during transit, reducing spoilage and insurance claims.

Digital Twins and Prescriptive Analytics

The integration of these data streams facilitates the creation of “Digital Twins“—virtual replicas of physical systems. In manufacturing, digital twins allow operators to simulate production scenarios, predict bottlenecks, and optimize processes in a risk-free virtual environment before implementing changes on the shop floor.

By 2025, the industry will have moved from predictive analytics (what will happen) to prescriptive analytics (what should we do). These tools use historical and real-time data to not only predict disruptions (e.g., a weather event delaying a shipment) but to suggest the optimal response (e.g., rerouting to a specific alternative port).

| Stage | Question Answered | Technology Enabler | Status in 2025 |

|---|---|---|---|

| Descriptive | What happened? | Basic Reporting, Dashboards | Commodity |

| Diagnostic | Why did it happen? | Root Cause Analysis Tools | Standard Practice |

| Predictive | What will happen? | Machine Learning, Digital Twins | Widespread Adoption |

| Prescriptive | What should we do? | AI Agents, Optimization Algos | Emerging Competitive Edge |

Source Data:

A digital twin network is a virtual replica of the hardware and software comprising a physical network. Data streams from the operating network are continuously incorporated to mirror the latest network conditions. This allows for an infinite number of configurations and traffic profiles to be emulated, providing unprecedented prototyping and self-optimizing capabilities.

Autonomous Logistics and Private 5G

The combination of 5G and AI is also the key enabler for autonomous vehicles in logistics. While autonomous trucks on public roads face regulatory hurdles, 5G is already revolutionizing controlled environments like warehouses and ports. Automated Mobile Robots (AMRs) and self-driving forklifts leverage the low latency of private 5G networks to navigate complex environments safely and efficiently.

Private 5G networks are becoming the gold standard for industrial connectivity. Unlike Wi-Fi, which can struggle with interference and handovers in dense metal environments like factories, Private 5G offers deterministic reliability and security. It enables “Real-time automation,” where split-second decisions are non-negotiable. For instance, when a computer vision system detects a defect on an assembly line, it must instantly communicate with control systems to remove the faulty product—a process that requires the ultra-low latency that only 5G can provide.

The Human-Machine Workforce

The introduction of agentic AI and advanced automation is precipitating a workforce transformation as significant as the industrial revolution. The challenge for 2025 and beyond is not merely “replacing” humans, but orchestrating a collaborative workflow between human intelligence and machine autonomy.

The Skills Gap and AI Literacy

The rapid pace of technological change has created a widening skills gap. By 2025, “AI fluency“—the ability to use and manage AI tools—is expected to be a critical requirement across industries. Demand for this skill has grown sevenfold in just two years. Furthermore, data literacy, defined as the ability to read, work with, analyze, and communicate with data, is predicted to be the most in-demand skill by 2030. 85% of executives believe it will become as vital in the future as the ability to use a computer is today.

Employers are prioritizing the development of a “future-ready” workforce. This involves reskilling and upskilling employees to move from routine tasks (which will be automated) to higher-value activities such as problem-solving, creative strategy, and empathetic communication—skills that AI struggles to replicate. The “Skill Change Index” suggests that while digital and information-processing skills will be most affected by automation, skills related to assisting and caring are likely to change the least.

Agent Orchestration and New Organizational Designs

A new paradigm of work is emerging: Agent Orchestration. Workforce planning is shifting from a headcount exercise to the management of a “living, adaptive workforce” composed of both humans and AI agents. In this model, humans act as supervisors and strategists, defining the goals for autonomous agents and intervening only when the system encounters “edge cases” or ethical ambiguities.

This requires a fundamental redesign of workflows. The McKinsey Global Institute notes that by 2030, $2.9 trillion in economic value could be unlocked if organizations redesign workflows around this human-machine partnership rather than simply automating individual tasks. This involves breaking down silos between HR and IT, as workforce planning becomes inextricably linked with technology deployment.

Hybrid Work and Employee Well-being

The structure of work itself continues to evolve towards hybrid models. Technology is the enabler of this flexibility, with advancements in spatial computing and virtual reality (VR) promising to make remote collaboration more immersive and effective. “Spatial Computing” is taking center stage, with real-time simulations and new use cases reshaping industries from healthcare to entertainment, allowing for seamless workforce collaboration across geographies.

However, this shift brings challenges regarding company culture and employee well-being. A key trend for 2025 is a sharpened focus on mental health and the “employee experience” to prevent burnout in an always-on digital environment. Organizations are leveraging metrics not just to measure performance, but to monitor well-being and engagement, ensuring that the efficiency gains of AI do not come at the cost of human resilience.

Security in an AI-First World

As businesses adopt AI to enhance operations, cybercriminals are adopting AI to enhance attacks. This adversarial dynamic renders traditional security models obsolete. The perimeter-based security of the past is being replaced by data-centric, identity-aware models that assume breach.

Zero Trust 2.0: Continuous AI Validation

The concept of Zero Trust (“Never trust, always verify“) is evolving into “Zero Trust 2.0.” Traditional Zero Trust relied on static rules; if a user had the right password and token, they were granted access. In the age of AI, where credentials can be easily phished or spoofed, this is insufficient.

Zero Trust 2.0 integrates AI and Machine Learning to establish trust in real-time based on behavior. It continuously monitors user and device activity. If a user accesses a database they ostensibly have permission for, but does so at 3:00 AM from an unusual IP address and downloads an unprecedented volume of data, the system automatically revokes access. It moves from “verify once” to “verify continuously“.

This system relies on three core pillars:

- Identity and Access Validation: Monitoring behavioral patterns over time to detect anomalies.

- Network Activity Monitoring: Detecting abnormal traffic patterns, such as unanticipated data transfers.

- Workload Behavior Monitoring: Ensuring applications and workloads do not deviate from their standard operational parameters.

The AI Threat Landscape and Automated Defense

The threat landscape in 2025 is dominated by AI-augmented attacks.

Adversaries use AI to automate social engineering, create convincing deepfakes for phishing, and obfuscate malware code to evade detection.

- Disinformation Security: A newly emerging technology category aimed at systematically discerning trust and identifying deepfakes or manipulated content is becoming essential for brand protection.

- Insider Threats: As AI makes data more portable, the risk of insider threats increases. Zero Trust strategies are expanding to include behavioral understanding to mitigate damage from malicious insiders who already have legitimate access.

To combat AI-speed attacks, defense must also be automated. Security teams are increasingly leveraging AI-powered analytics to detect anomalous behavior and automate threat containment. By 2025, the race to identify software vulnerabilities will intensify, requiring defenders to use AI to find and patch holes in their own software stacks before AI-powered automated scanners utilized by attackers can exploit them. This creates a “machine vs. machine” conflict dynamic where human intervention is often too slow to be effective.

Section 7: Sustainable IT – The Green Imperative

Perhaps the most critical, yet frequently underestimated, trend is the collision between digital expansion and environmental limits. The narrative that digital is “clean” is being challenged by the physical reality of data centers.

7.1 The Energy Crisis of AI

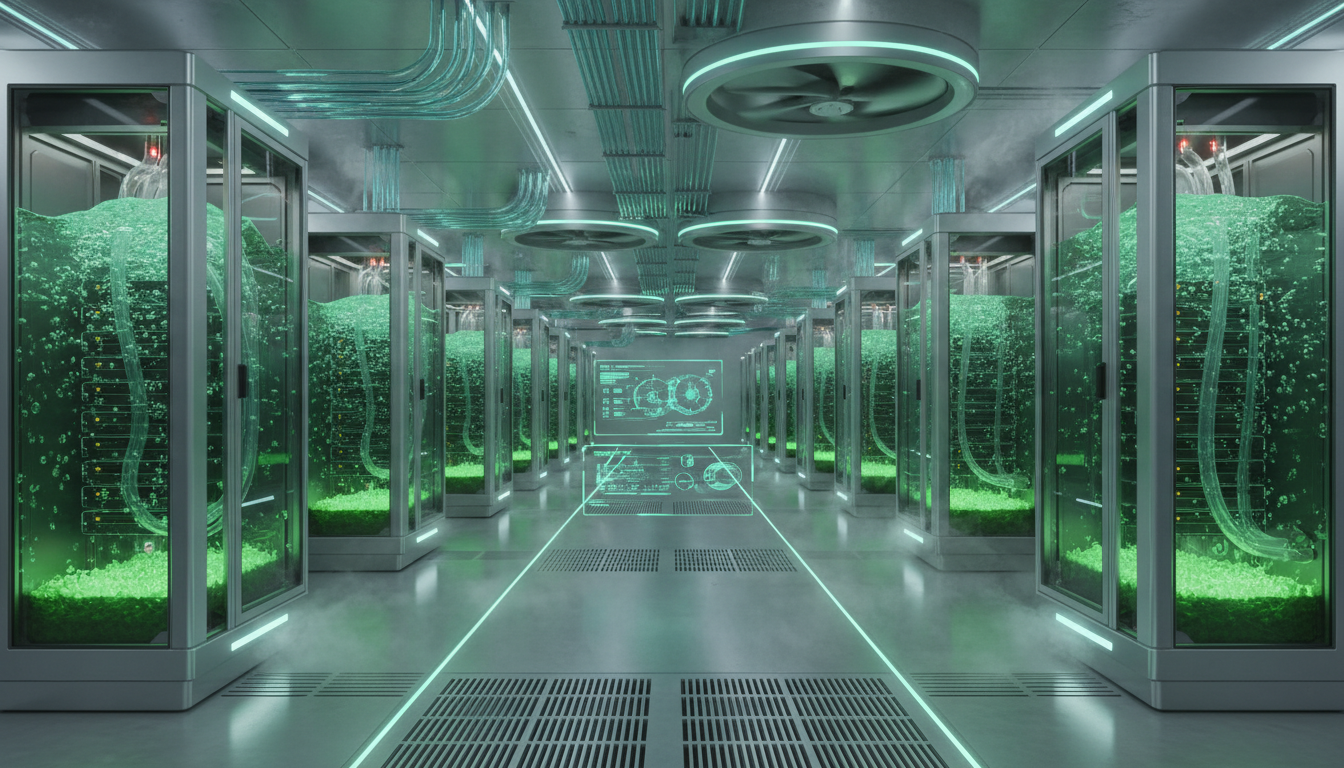

The AI revolution is a massive energy consumer. “Hardware is eating the world,” and the infrastructure required to train and run GenAI models is demanding unprecedented power.

- Power Consumption: Data center electricity consumption is projected to double from roughly 536 TWh in 2025 to over 1,000 TWh by 2030. If efficiency gains do not materialize, this could rise to 1,300 TWh, directly challenging global climate-neutrality ambitions.

- Chip Density: The power density of server racks is skyrocketing. AI-ready GPUs run at significantly higher wattages (up to 1,200 watts per chip) compared to traditional CPUs (150-200 watts), creating immense cooling challenges. The average power density per rack is anticipated to increase from 36 kW in 2023 to 50 kW by 2027.

7.2 Green Data Centers and Circular IT

To mitigate this, the industry is pivoting toward Sustainable Data Centers. This market is expected to grow from $43.6 billion in 2024 to $96.5 billion by 2030. Innovations in this space are critical for the continued growth of the IT sector.

- Cooling Innovation: Liquid immersion cooling and free-air cooling are becoming standard to manage the thermal loads of high-density AI compute clusters, reducing dependency on energy-intensive traditional HVAC systems.

- Waste Heat Recovery: In Europe and other regions, data centers are beginning to direct waste heat to district heating systems or nearby facilities (like swimming pools), turning a waste product into a valuable resource.

Simultaneously, CIOs are adopting “Circular IT” strategies. Instead of the traditional “take-make-dispose” model, companies are focusing on extending device lifespans, buying remanufactured hardware, and ensuring ethical disposal of e-waste through certified IT Asset Disposition (ITAD) services. The global e-waste crisis, exacerbated by the rapid turnover of tech, is forcing this shift, with stricter regulations and “Right to Repair” movements gaining momentum. By 2025, circular economy principles—”Refurbish -> Resell -> Recycle”—will be the new hierarchy for IT asset management.

Section 8: Ethics, Governance, and Regulation

As AI becomes autonomous and pervasive, the “black box” nature of these systems poses significant ethical and legal risks. 2025 marks the transition from voluntary ethical guidelines to mandatory regulatory compliance.

8.1 Algorithmic Accountability and Transparency

Regulators are increasingly demanding “Transparency and Explainability.” Businesses must be able to explain how an algorithmic decision was made, especially in high-stakes areas like hiring, lending, or healthcare.

- Bias and Fairness: There is a growing legal obligation to conduct bias audits and prove that AI systems do not reinforce societal inequalities. AI systems can inherit and amplify biases present in their training data, resulting in discriminatory outcomes that expose companies to legal action.

- Liability: Determining who is responsible when an AI agent makes a mistake (e.g., a financial loss caused by an autonomous trading bot) is a complex legal frontier. 2025 will see more rigorous frameworks defining the liability of developers versus deployers.

8.2 The Boardroom Agenda

AI governance has graduated from the IT department to the Boardroom. It is viewed as a “mission-critical” operation, where failure to oversee risks can lead to personal liability for directors. Organizations are establishing AI Governance Platforms to systematically manage these risks, ensuring that innovation does not outpace control.

The implementation of ethical AI governance practices is no longer just a moral obligation but a necessity for avoiding financial and reputational risks. Existing legal precedents maintain legal liability for boards and executives to provide sufficient oversight of risks tied to “mission-critical” company operations, which now undeniably include AI systems.

Conclusion: Strategic Roadmap to 2030

The future of IT in business is not merely about adopting the next tool; it is about restructuring the enterprise to survive and thrive in a hyper-connected, autonomous, and resource-constrained world. The convergence of Agentic AI, Distributed Infrastructure, Quantum Readiness, and Sustainability creates a complex matrix of opportunities and threats.

Key Strategic Takeaways for the Enterprise Leader:

- Embrace Agency: Move beyond “using” AI to “employing” AI. Prepare the organizational structure for the integration of digital agents as a new class of workforce.

- Decentralize Infrastructure: Audit current cloud strategies. Identify latency-sensitive workloads and move them to the edge, leveraging 5G and distributed cloud architectures to gain speed and resilience.

- Prioritize Energy Efficiency: Treat energy consumption as a core design constraint. Invest in green software principles and sustainable infrastructure before regulatory mandates force the issue.

- Modernize Security: Abandon perimeter defense. Implement Zero Trust 2.0 with AI-driven behavioral monitoring to counter the AI threat vector.

- Prepare for Quantum: Begin the inventory of encrypted data and assess vulnerability to post-quantum decryption. Identify high-value optimization problems suitable for early quantum adoption.

By 2030, the successful enterprise will be one that has seamlessly integrated these disparate threads—intelligence, infrastructure, and sustainability—into a cohesive, resilient, and ethical digital fabric. The future of IT is not about technology; it is about the business of tomorrow, built on the code of today.