Gemini 3: Technical Analysis of AI’s New System 2 Era

The Gemini 3 Paradigm: A Technical Analysis of Agentic Architectures, Generative Ecosystems, and the Shift to System 2 Intelligence

Executive Summary

The trajectory of artificial intelligence development has historically been marked by discrete epochs: the era of statistical learning, the rise of the transformer, and the proliferation of conversational Large Language Models (LLMs). In November 2025, Google DeepMind signaled the commencement of a new epoch with the release of the Gemini 3 model family. This release represents not merely an iterative increase in parameter counts or training data volume, but a fundamental architectural pivot toward “System 2” thinking—characterized by slow, deliberate reasoning, parallel cognitive pathing, and deep agentic integration.

Gemini 3, alongside its open-weight counterpart Gemma 3 and the accompanying Google Antigravity development platform, constitutes a full-stack effort to transition AI from a passive retrieval interface to an active economic agent capable of long-horizon planning and execution. The introduction of “vibe coding”—a development paradigm prioritizing natural language intent over rigid syntax—coupled with the “Deep Think” reasoning mode, suggests a strategic monopolization of the software development lifecycle and high-value knowledge work.

This report provides an exhaustive technical analysis of the Gemini 3 ecosystem. It evaluates the bifurcated model architecture, comparing the standard “Pro” variants against the reasoning-intensive “Deep Think” models. It examines the performance differentials against frontier competitors such as OpenAI’s GPT-5.1 and Anthropic’s Claude Sonnet 4.5, utilizing data from benchmarks like SWE-Bench Verified and ARC-AGI-2.6. Furthermore, it dissects the practical implementation of these models within enterprise environments, detailing the new API parameters for thought signatures, the economic implications of context-tiered pricing, and the emergence of Generative UI (GenUI) as a new frontend standard.

1. The Architectural Evolution of Gemini 3

The Gemini 3 architecture reflects a recognition that the “one-size-fits-all” approach to LLM design—where a single dense model handles everything from chit-chat to theorem proving—is reaching an efficiency plateau. Instead, Google has moved toward a specialized family of models that decouple “reflexive” intelligence from “deliberate” reasoning, supported by a native multimodal foundation that no longer relies on bolting separate vision or audio encoders onto a text-based trunk.

1.1 Native Multimodality and Sensorimotor Integration

Unlike early multimodal models that utilized separate tower architectures to process vision and audio before projecting them into a text embedding space, Gemini 3 is natively multimodal from the pre-training stage. This means the model was trained on interleaved sequences of text, image, audio, and video, allowing it to learn cross-modal dependencies that are invisible to disjointed architectures.

The practical implication of this native integration is “depth and nuance.” For instance, in analyzing a video of a sports match, Gemini 3 does not merely identify objects (e.g., “a ball,” “a player”); it understands the kinematics of movement and the temporal logic of the game, allowing it to suggest training improvements. Similarly, its ability to process audio alongside visual inputs enables complex tasks like deciphering a handwritten recipe while simultaneously listening to a voice note describing modifications to that recipe, synthesizing both into a coherent output.

This capability is quantified in the benchmarks, where Gemini 3 Pro achieves an 87.6% score on Video-MMMU, a test of expert-level video understanding. The architecture supports a high frame-rate understanding, ensuring that rapid actions in video content are not lost due to aggressive down-sampling, a common optimization in previous generations. This suggests that the internal representation of video within Gemini 3 is likely utilizing a form of spatiotemporal tokenization that preserves motion vectors better than static frame embedding methods.

1.2 System 2 Reasoning: The Deep Think Architecture

The most significant divergence in the Gemini 3 family is the introduction of Gemini 3 Deep Think. While standard LLMs operate on a “System 1” basis—generating tokens sequentially based on immediate probability distributions—Deep Think implements a “System 2” architecture analogous to human deliberation.

1.2.1 Parallel Thinking and MCTS Integration

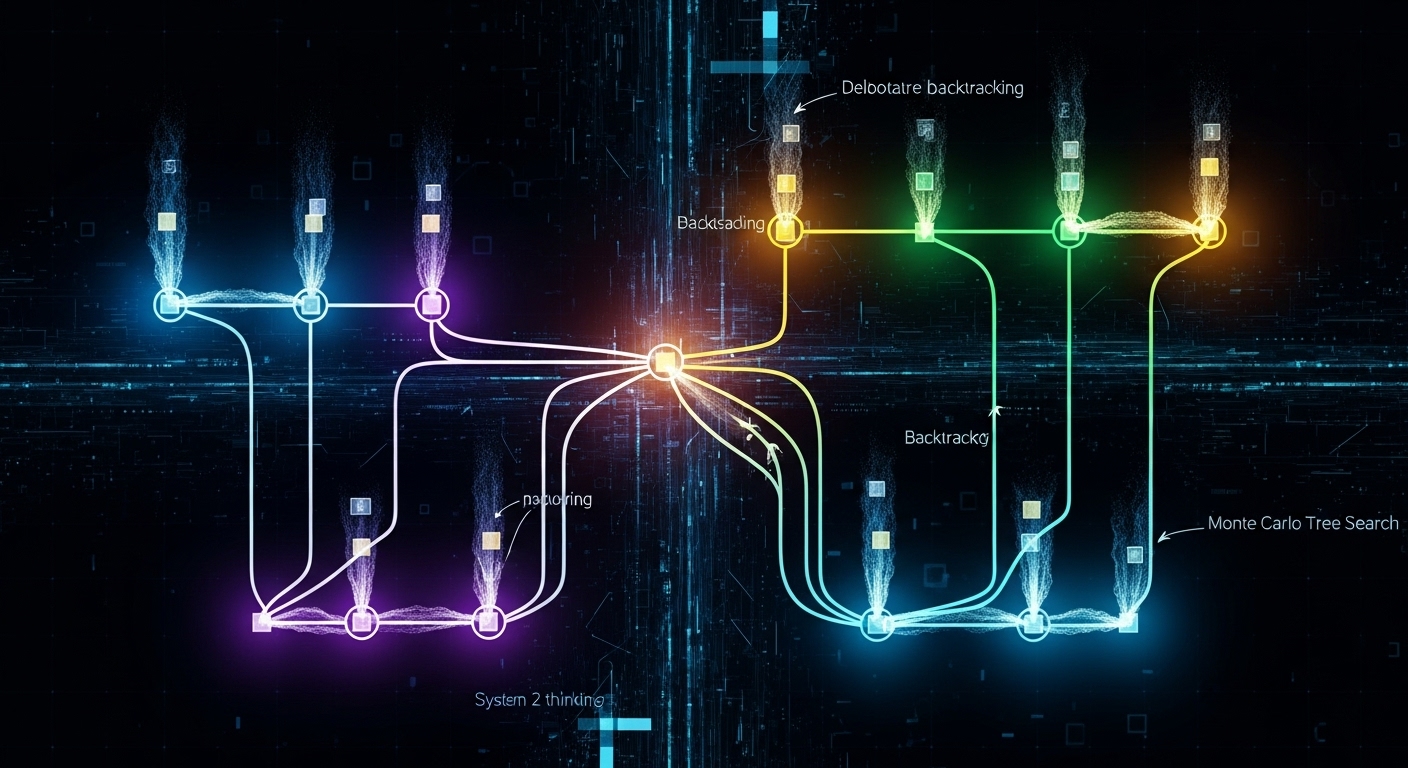

Technical analysis suggests that Deep Think employs a Parallel Thinking architecture. Rather than committing to a single chain of thought, the model explores multiple reasoning trajectories simultaneously. This behavior can be conceptualized as an integration of Monte Carlo Tree Search (MCTS) directly into the transformer’s decoding process.

In this framework, the model generates a “tree” of possible reasoning steps. It evaluates the validity of intermediate states—effectively “checking its work”—and prunes branches that lead to logical contradictions or dead ends. This allows the model to backtrack, a capability sorely missing from standard autoregressive models which are prone to error cascading.

1.2.2 The Thinking Level Parameter

Access to this capability is controlled via the API’s thinking_level parameter, which replaces the legacy thinking_budget.

- Low: Forces the model to rely on heuristic, fast pathways, minimizing latency and cost. This is suitable for chat and simple instruction following.

- High (Default): Allocates a substantial token budget for internal reasoning. The model may generate thousands of “hidden” tokens—representing the internal monologue and tree search—before outputting the first visible token to the user.

The architectural distinction is so profound that Google essentially treats Deep Think as a separate class of service, currently gating it behind the Google AI Ultra subscription and subjecting it to extended safety testing before general release. This caution likely stems from the “agentic” risks; a model that can plan deeply is also a model that can deceive or circumvent safety guardrails more effectively if not properly aligned.

1.3 Thought Signatures and State Persistence

One of the persistent challenges in building agents with LLMs has been state amnesia. When a model makes a tool call or completes a step in a multi-turn workflow, the internal “activations”—the nuances of why it made that decision—are typically lost, leaving only the text output.

Gemini 3 introduces Thought Signatures to solve this. These are encrypted tokens returned by the API that represent the model’s internal reasoning state at the end of a turn. Developers are required to pass these signatures back to the model in the subsequent request.

- Mechanism: The signature acts as a compressed, secure “save state” of the model’s cognitive process.

- Validation: The API enforces strict validation of these signatures. Missing thought signatures in multi-step function calls will result in a 400 Bad Request error, while omitting them in chat contexts leads to degraded performance.

- Implication: This feature drastically improves the coherence of long-horizon agents. An agent can now “remember” the discarded alternatives or the specific constraints identified ten steps ago, without needing to re-derive them from the transcript history.

2. The Gemma 3 Open-Weight Family

While Gemini 3 represents the closed, proprietary frontier, Google has simultaneously updated its open-weight offerings with Gemma 3. This release is critical for the ecosystem, as it allows developers to deploy distilled versions of the Gemini architecture on local hardware or private clouds.

2.1 Architectural Distillation and Specs

Gemma 3 is not merely a smaller version of Gemini; it features specific architectural optimizations to support long contexts on constrained hardware. The family ranges from 1 billion to 27 billion parameters, with the majority of models supporting a 128k token context window.

| Model Variant | Parameters | Context Window | Vision Encoder |

|---|---|---|---|

| Gemma 3 1B | 1 Billion | 32k Tokens | SigLIP (Tailored) |

| Gemma 3 4B | 4 Billion | 128k Tokens | SigLIP (Tailored) |

| Gemma 3 12B | 12 Billion | 128k Tokens | SigLIP (Tailored) |

| Gemma 3 27B | 27 Billion | 128k Tokens | SigLIP (Tailored) |

2.2 Interleaved Attention Mechanisms

To achieve a 128k context window without the quadratic memory scaling of standard attention, Gemma 3 utilizes interleaved attention.

- Global Layers: These layers attend to the entire context window. The RoPE (Rotary Positional Embedding) base frequency in these layers is increased from 10,000 to 1,000,000, allowing the model to maintain resolution over vast distances.

- Local Layers: Interspersed between global layers, these attend only to a local sliding window. They retain the standard 10k RoPE frequency.

This hybrid approach reduces the KV-cache size significantly, enabling the 27B model to run on high-end consumer GPUs while processing documents as long as a novel.

2.3 Adaptive Vision Processing

For multimodal tasks, Gemma 3 employs a specialized SigLIP vision encoder. A key innovation here is the adaptive windowing algorithm. When processing high-resolution images or non-square aspect ratios, the model dynamically segments the image into non-overlapping crops (tiles) of 896×896 pixels. This “windowing” is applied only when necessary to preserve small details (like text in documents) that would otherwise be lost in resizing, addressing a common failure mode in smaller multimodal models.

Benchmarking and Comparative Performance Analysis

The release of Gemini 3 occurs in a hyper-competitive environment, landing almost simultaneously with OpenAI’s GPT-5.1 and amidst strong performance from Anthropic’s Claude Sonnet 4.5. A granular analysis of benchmarks reveals that while the models are close in general capabilities, Gemini 3 has carved out a distinct advantage in agentic reliability and complex multimodal reasoning.

Agentic Coding: SWE-Bench and Terminal-Bench

The SWE-Bench Verified benchmark has become the gold standard for evaluating AI software engineers. It tasks models with resolving real GitHub issues, requiring them to navigate a codebase, reproduce bugs, and generate passing tests.

- Gemini 3 Pro: 76.2%

- GPT-5.1: 76.3%

- Claude Sonnet 4.5: 77.2%

While Gemini 3 Pro is statistically tied with GPT-5.1 and slightly behind Claude, this metric tells only part of the story. The Terminal-Bench 2.0 benchmark, which measures a model’s ability to use a command-line interface to perform tasks (a proxy for “devops” and system administration capabilities), shows a different picture.

- Gemini 3 Pro: 54.2%

- GPT-5.1: 47.6%

This variance suggests that while Claude and GPT-5.1 may be superior at pure code synthesis (writing the function), Gemini 3 is superior at system manipulation—running the build, checking file permissions, and managing the environment. This aligns with Google’s “Antigravity” strategy, positioning Gemini as an agent that does rather than just writes.

Multimodal Reasoning: The MMMU-Pro Lead

In the domain of multimodal understanding, Gemini 3 Pro establishes a clear dominance. The MMMU-Pro benchmark evaluates expert-level reasoning across disciplines requiring visual input (e.g., diagnosing a medical X-ray, analyzing a stock chart, interpreting a circuit diagram).

| Benchmark | Gemini 3 Pro | GPT-5.1 | Claude Sonnet 4.5 |

|---|---|---|---|

| MMMU-Pro | 81.0% | 76.0% | 68.0% |

| Video-MMMU | 87.6% | 80.4% | N/A |

| ScreenSpot-Pro | 72.7% | 3.5% | N/A |

The disparity in ScreenSpot-Pro (72.7% vs 3.5%) is particularly striking. This benchmark measures screen understanding—the ability to look at a UI screenshot and identify interactable elements. This massive lead explains why Gemini 3 is the engine behind the new “App-to-Code” and “Vibe Coding” features; it literally “sees” user interfaces with a fidelity that competitors currently lack.

General Intelligence and The “Hard” Problems

For general reasoning, LMArena (formerly Chatbot Arena) remains the most trusted aggregate of human preference. Gemini 3 Pro has achieved an Elo score of 1501, effectively topping the leaderboard.

However, the true test of the “Deep Think” architecture is found in ARC-AGI (Abstraction and Reasoning Corpus). This benchmark consists of visual puzzles that require abstracting a rule from a few examples, a task easy for humans but notoriously difficult for LLMs which rely on pattern matching from training data.

- Gemini 3 Deep Think: 45.1% (ARC-AGI-2)

- Human Baseline: ~98%

- Previous SOTA: ~20-30%

While still far from human performance, a score of 45.1% represents a qualitative leap, validating the hypothesis that search-based inference (MCTS) is necessary to break the “memorization wall” of standard transformers. Similarly, on Humanity’s Last Exam—a test designed to be impossible for current models—Gemini 3 Pro scores 37.5%, and Deep Think pushes this to 41.0%.

Comparative Summary Table

The following table synthesizes the competitive landscape as of November 2025:

| Feature / Benchmark | Gemini 3 Pro | GPT-5.1 | Claude Sonnet 4.5 |

|---|---|---|---|

| Context Window | 1 Million (Native) | 128k – 200k | 200k |

| Coding (SWE-Bench) | 76.2% | 76.3% | 77.2% |

| Tool Use (Terminal-Bench) | 54.2% | 47.6% | N/A |

| Multimodal (MMMU-Pro) | 81.0% | 76.0% | 68.0% |

| Reasoning (GPQA Diamond) | 91.9% | 88.1% | ~85% |

| Pricing (Input/Output) | $2/$12 (Low Context) | $3/$15 (Est.) | $3/$15 |

The “Vibe Coding” Era and Google Antigravity

Google has coined the term “Vibe Coding” to describe a new abstraction layer in software development. In this paradigm, the developer’s role shifts from writing syntax to defining intent (“vibes”) via natural language, images, or even loose sketches. The AI handles the implementation details. To support this, Google has launched a dedicated Integrated Development Environment (IDE) called Google Antigravity.

Google Antigravity: The Agentic IDE

Antigravity is described as an “evolution of the IDE toward an agent-first future”. It is a standalone application available for macOS, Windows, and Linux. While functionally similar to a fork of VS Code (and compatible with VS Code extensions), its architecture is fundamentally different in how it treats the AI model.

In traditional IDEs with AI assistants (like GitHub Copilot), the AI is a plugin that injects text into the editor. In Antigravity, the AI is an autonomous agent with direct privileges to:

- Edit Files: It can create, delete, and modify files across the project directory.

- Execute Terminal Commands: It can run builds, install dependencies (npm install, pip install), and run tests.

- Browse the Web: It utilizes a headless Chrome instance to read documentation or verify frontend changes.

The “Artifacts” Workflow and Multi-Agent Orchestration

A key innovation in Antigravity is the concept of “Artifacts”. When the agent performs a complex task—such as refactoring a legacy codebase or building a new feature—it generates an Artifact. This is a structured document containing:

- A list of tasks the agent plans to execute.

- Screenshots of the application state (if applicable).

- Recordings of the browser interactions.

- Diffs of the code changes.

This system replaces the overwhelming stream of raw logs with a digestible report, allowing the human developer to review the logic of the changes rather than just the syntax.

Furthermore, Antigravity supports Multi-Agent Orchestration. A developer can spawn parallel agents: one to write the code, another to review it for security vulnerabilities, and a third to update the documentation. The user interface allows for managing these agents as if they were a team of junior developers.

Model Agnosticism and Developer Experience

Surprisingly, Google has opened Antigravity to third-party models. Developers can choose to power their agents with Claude Sonnet 4.5 or GPT-OSS, alongside Gemini 3. This move is likely a strategic play to capture the “IDE market share” even from users who prefer non-Google models, similar to how VS Code supports all languages.

However, early user reports indicate teething issues common to beta software. Users have reported “Setting Up Your Account” loops, issues with authentication via Edge browser, and rapid depletion of free usage credits (approx. 20 minutes of intense agentic work). Despite these friction points, the integration of Chrome DevTools MCP (Model Context Protocol) allows agents to inspect the DOM and debug pages directly, a feature currently unmatched by competitors like Cursor.

Generative UI (GenUI): The Death of Static Interfaces

Gemini 3 introduces a capability that may render traditional frontend development obsolete for a large class of applications: Generative UI (GenUI). This technology allows the model to generate interactive, graphical user interfaces on the fly, tailored to the specific context of the user’s query.

The A2UI Protocol and Architecture

GenUI is not merely generating HTML strings. It is built upon the A2UI (Agent-to-UI) protocol, a JSON-based serialization format developed by Google Labs.

- Intent Analysis: The user asks a question (e.g., “Help me plan a trip to Tokyo”).

- Component Selection: Instead of writing text, the Gemini 3 model selects from a library of abstract widgets (calendars, maps, product carousels).

- Serialization: The model outputs a structured JSON response describing the UI layout and data binding.

- Client-Side Rendering: The client application—using the GenUI SDK for Flutter or React Server Components—deserializes this JSON and renders native widgets.

This approach creates a “high-bandwidth loop.” As the user interacts with the generated UI (e.g., selecting dates on the calendar), these state changes are fed back to the model as context for the next turn.

SDK Support and Case Studies

Google has released official SDKs to support this paradigm:

- GenUI SDK for Flutter: Allows Flutter apps to render AI-generated UIs dynamically.

- React Server Components (RSC) Support: Facilitates streaming UI components from the server in Next.js applications.

Real-World Examples:

- Mortgage Calculator: In Google Search (AI Mode), asking about loans triggers Gemini 3 to code and render a custom interactive calculator where users can adjust interest rates and see real-time amortization charts.

- Landscape Design: A demo app allows users to upload a photo of their yard. Gemini 3 analyzes the terrain and generates a custom UI form for the user to input their budget and style preferences, which then drives the generation of a 3D landscape plan.

- Physics Simulations: Students asking about the “three-body problem” receive an interactive physics simulation rather than a text explanation.

This shift implies that for many utility applications, the “interface” is no longer a fixed asset built by a frontend team, but an ephemeral artifact generated by the AI at runtime.

Implementation Strategy and API Economics

For organizations looking to deploy Gemini 3, navigating the API configuration and pricing models is critical for balancing performance and cost.

Access Channels and Versioning

Gemini API (Google AI Studio): Best for prototyping and individual developers.

- Offers a free tier with rate limits.

- Vertex AI (Google Cloud): Required for enterprise deployments needing SLA, data residency, and VPC peering.

The primary model identifier at launch is gemini-3-pro-preview. Developers should note that previous models like gemini-2.0-flash-thinking-exp are scheduled for deprecation in December 2025, necessitating a migration strategy.

6.2 Configuring Intelligence: Thinking Levels

Migrating from Gemini 2.5 requires code changes regarding how “thinking” is handled. The thinking_budget parameter is deprecated for Gemini 3 in favor of thinking_level.

Python

# Python Example using google-genai SDK

from google import genai

from google.genai import types

client = genai.Client(api_key="YOUR_API_KEY")

response = client.models.generate_content(

model="gemini-3-pro-preview",

contents="Derive the time complexity of this recursive function.",

config=types.GenerateContentConfig(

thinking_config=types.ThinkingConfig(

thinking_level=types.ThinkingLevel.HIGH

)

)

)

print(response.text)

Setting thinking_level=”HIGH” is recommended for complex instruction following, coding, and math. It allows the model to engage in deep internal reasoning, which consumes more tokens (and time) but yields significantly higher accuracy. LOW is optimized for latency-sensitive chat applications.

6.3 Handling Thought Signatures

For agentic workflows, handling Thought Signatures is mandatory. In the Gemini 3 API, the model returns an encrypted signature field in the response object.

JavaScript

// Node.js Example

const result = await model.generateContent({

contents: prompt,

// Ensure signatures are requested/handled

});

const signature = result.response.thoughtSignature;

// In the next turn

const nextResponse = await model.generateContent({

contents: nextPrompt,

thoughtSignature: signature // Pass it back!

});

Failure to pass this signature back results in the model losing its “chain of thought” context. In strict function calling modes, the API will throw a 400 error if the signature is missing.

6.4 The Economics of Context: Pricing Analysis

Google has introduced a bifurcated pricing model based on context length, acknowledging the super-linear cost of processing massive contexts.

| Usage Tier | Input Price (per 1M tokens) | Output Price (per 1M tokens) |

|---|---|---|

| Standard (< 200k context) | $2.00 | $12.00 |

| Long Context (> 200k context) | $4.00 | $18.00 |

| Cache Storage | $4.50 / 1M tokens / hour | N/A |

Cost Analysis:

- Document Analysis: Processing a 150k token document falls into the standard tier. Input cost: $0.30.

- Video Analysis: Processing a 1-hour video (approx. 700k tokens) triggers the higher tier. Input cost: $2.80.

- Implication: For high-volume, long-context applications (like video archives), utilizing Context Caching is essential. By paying the storage fee ($4.50/hour), repeated queries against the same video or codebase avoid the repetitive input processing cost, reducing the marginal cost of interaction by ~95%.

7. Ecosystem Integration and Consumer Rollout

The Gemini 3 launch is a coordinated rollout across Google’s entire product surface, affecting billions of users.

7.1 Search and Consumer Apps

- Gemini App: The consumer app has been updated with a modern, cleaner interface featuring a “My Stuff” folder for managing generated artifacts. Gemini 3 Pro is available immediately to free users in many regions, while Deep Think is reserved for Google AI Ultra subscribers.

- Search AI Mode: For the first time, a frontier model is integrated into Google Search on “Day One.” Google AI Pro/Ultra subscribers can toggle “Thinking” mode in Search to have Gemini 3 handle complex queries (e.g., “Compare mortgage rates considering current inflation trends”).

- Jio Partnership: In a massive expansion of access, Google partnered with Jio in India. Users on eligible 5G plans receive free access to Gemini 3 Pro for 18 months, instantly bringing the model to potentially hundreds of millions of mobile-first users.

7.2 Enterprise Grounding and RAG

For enterprise customers using Vertex AI, Gemini 3 offers Grounding with Google Search. This feature allows the model to query the live web to verify facts before generating a response. Unlike the consumer version, the enterprise grounding service provides citations without using user data for model training. Additionally, the integration with the Vertex AI RAG Engine allows enterprises to ground the model in their own private documents (PDFs, Intranets) securely.

8. Strategic Implications and Future Outlook

The release of Gemini 3 defines the “Agentic Era” of AI. By solving the context persistence problem (Thought Signatures) and the reasoning depth problem (Deep Think/MCTS), Google has created a substrate capable of performing actual labor rather than just answering questions.

8.1 The Battle for the Developer Workflow

Google Antigravity is a defensive maneuver against the commoditization of the model layer. If developers act as the “kingmakers” of AI, owning the environment where they work (the IDE) is crucial. By vertically integrating the model (Gemini 3), the tool (Antigravity), and the deployment target (Google Cloud), Google is attempting to recreate the “walled garden” success of Android in the generative AI space. The support for third-party models in Antigravity suggests confidence: Google believes its DX (Developer Experience) will win, even if users occasionally swap the engine.

8.2 The Commoditization of Implementation

“Vibe coding” and GenUI threaten to collapse the distinction between “product manager,” “designer,” and “engineer.” If a high-fidelity application can be generated and deployed from a description of its intent, the economic value of rote coding skills will decline, while the value of system architecture and problem definition will rise. Gemini 3 is the tool that facilitates this transition.

8.3 Safety as a Differentiator

The cautious rollout of Deep Think—restricted to safety testers and Ultra subscribers—highlights the dual-use risk of System 2 agents. A model that can plan complex software architectures can also plan complex cyberattacks. Google’s emphasis on “safety evaluations” and “input from safety testers” is not just PR; it is a recognition that as models move from saying to doing, the blast radius of errors expands exponentially.

In conclusion, Gemini 3 is a technical tour de force that reasserts Google’s position at the frontier of AI research. Through native multimodality, parallel reasoning architectures, and a reimagined developer ecosystem, it offers a glimpse into a future where AI is not an oracle to be consulted, but a partner that perceives, thinks, and acts alongside us.