DeepSeek AI: Disrupting Global AI with Efficiency & New Models

Introduction: The DeepSeek Shock and the Divergence from Scaling Laws

The trajectory of artificial intelligence development, particularly throughout the early 2020s, was defined by a singular, capital-intensive orthodoxy: the Scaling Laws. This paradigm posits that model performance is strictly correlated with the exponential increase of computational power, dataset size, and parameter count. Under this regime, the barrier to entry for frontier-level artificial intelligence became purely financial, creating a moat that ostensibly protected United States hyperscalers—OpenAI, Google, Microsoft, and Anthropic—from smaller, resource-constrained competitors. This narrative was shattered in early 2025 by the ascent of Hangzhou DeepSeek Artificial Intelligence Basic Technology Research Co., Ltd. (DeepSeek).

DeepSeek’s emergence represents a pivotal inflection point in the global technology landscape, characterized not by brute-force scaling, but by algorithmic efficiency and architectural arbitrage. By achieving performance parity with OpenAI’s GPT-4o and o1 series at a fraction of the training cost—reported at approximately $5.5 million versus the hundreds of millions invested by Western incumbents—DeepSeek has exposed the inefficiencies inherent in the prevailing hardware-centric approach. The company’s “DeepSeek-R1” reasoning model and its V3 general-purpose model have not only democratized access to high-level machine intelligence but have also precipitated a severe reassessment of capital expenditure strategies across Silicon Valley and Wall Street.

This report provides an exhaustive analysis of DeepSeek as of late 2025. It dissects the company’s unique origin within the quantitative hedge fund High-Flyer, its radical architectural innovations (such as Multi-Head Latent Attention and native sparse attention), the resulting economic shockwaves that triggered a global API price war, and the severe geopolitical backlash manifesting in legislative bans and regulatory investigations across the United States and the European Union. The analysis suggests that DeepSeek is not merely a competitor but a market corrective, shifting the industry’s focus from raw scale to architectural optimization, while simultaneously accelerating the fragmentation of the global AI ecosystem into distinct geopolitical spheres.

Corporate Genesis: The Quantitative Finance Synergy

To fully comprehend DeepSeek’s disruptive capacity, one must look beyond the traditional Silicon Valley venture capital model. DeepSeek did not emerge from the established software engineering lineage of Beijing’s Tech District or the academic labs of Tsinghua University alone; it was born from the high-frequency trading (HFT) sector, specifically the quantitative hedge fund High-Flyer Capital Management.

The High-Flyer Capital Connection

Founded in 2023 by Liang Wenfeng, who also established High-Flyer, DeepSeek is structurally unique. High-Flyer manages approximately RMB 60 billion ($8 billion) in assets, utilizing sophisticated AI-driven algorithms to execute trades based on pattern recognition in financial markets. This lineage provides DeepSeek with a distinctive “quant DNA“—a corporate culture that prioritizes extreme computational efficiency, latency reduction, and the maximization of output per watt of energy consumed. Unlike generative AI researchers who often prioritize creativity and emergent behavior, quantitative traders prioritize speed and cost-effectiveness, traits that Liang Wenfeng successfully transplanted into DeepSeek’s engineering philosophy.

This relationship provides DeepSeek with a formidable strategic advantage: financial sovereignty. The company is wholly funded by High-Flyer and its founder, raising zero dollars in traditional venture capital as of 2025. Liang retains an estimated 84% ownership stake, with the remainder held by employees and High-Flyer associates. This insulates DeepSeek from the short-term pressures of external shareholders or the need to demonstrate immediate profitability. While Western competitors like OpenAI and Anthropic must justify multi-billion dollar valuations to corporate backers like Microsoft and Amazon, DeepSeek operates with the agility of a private research lab, able to sustain “loss-leader” pricing strategies to capture market share without facing quarterly earnings scrutiny.

Infrastructure and Hardware Legacy

A critical, often overlooked factor in DeepSeek’s rise is High-Flyer’s legacy hardware acquisition. Long before the imposition of strict US export controls on advanced semiconductors (specifically the Nvidia H100 and H200 series), High-Flyer had amassed a significant cluster of Nvidia A100 GPUs—reported to be around 10,000 units—originally intended for its trading algorithms. This accumulated compute reserve allowed DeepSeek to train its initial foundation models without being immediately hamstrung by the trade restrictions that crippled other Chinese startups.

However, the necessity of operating on older hardware (A100s vs. the H100s used by US rivals) forced innovation. DeepSeek could not rely on the raw throughput of the latest chips; they were compelled to innovate at the software and kernel level to squeeze maximum performance from existing silicon. This constraint-driven innovation led to the development of their proprietary FlashMLA kernels and sparse attention mechanisms, which now allow them to punch significantly above their weight class in terms of inference efficiency.

Valuation and Market Standing

By November 2025, DeepSeek’s impact on the market had translated into substantial implied value, although the company remains private. Liang Wenfeng’s personal net worth was estimated at approximately $4.5 billion, with the company’s valuation crossing $3.4 billion. This valuation, while modest compared to OpenAI’s $157 billion, belies the company’s market influence. DeepSeek’s release of the R1 model was cited as a primary catalyst for a massive sell-off in US technology stocks in early 2025, wiping nearly $600 billion from Nvidia’s market cap in a single week as investors questioned the durability of the hardware boom. This “DeepSeek Moment” demonstrated that a relatively small, capital-efficient player could destabilize the financial assumptions underpinning the trillion-dollar generative AI economy.

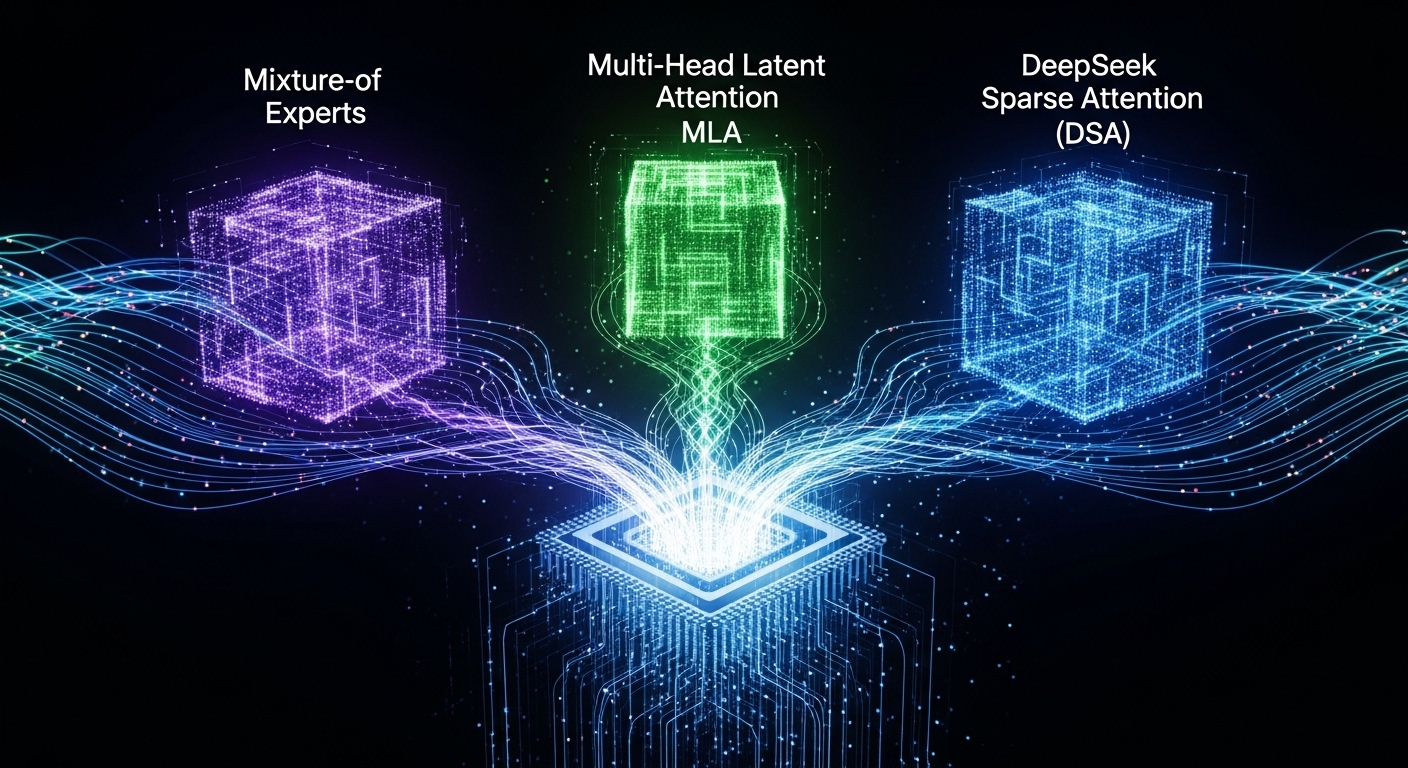

Technical Architecture: The Optimization Triad

DeepSeek’s ability to rival GPT-4o and Claude 3.5 Sonnet is not a function of superior hardware resources, but rather a result of aggressive architectural optimization. The company has systematically dismantled the standard Transformer architecture to remove memory bottlenecks and computational redundancies. The technical core of their advantage rests on three pillars: Mixture-of-Experts (MoE), Multi-Head Latent Attention (MLA), and the recently introduced DeepSeek Sparse Attention (DSA).

Mixture-of-Experts (MoE) and Fine-Grained Routing

Standard “dense” Large Language Models (LLMs) activate every parameter in the neural network for every token generated. This leads to linear scaling of compute costs relative to model size—a financially unsustainable trajectory for massive models. DeepSeek-V2 and V3 utilize a highly optimized Mixture-of-Experts (MoE) architecture that decouples model size from active compute.

- Parameter Efficiency: DeepSeek-V3 boasts a total parameter count of 671 billion, yet it activates only approximately 37 billion parameters per token. This allows the model to store a vast amount of knowledge (capacity) while maintaining the inference speed and cost profile of a much smaller model (efficiency).

- Fine-Grained Segmentation: Unlike traditional MoE models that might use a small number of large experts (e.g., Mixtral 8x7B), DeepSeek employs fine-grained expert segmentation. The architecture utilizes 160 routed experts, of which only a small subset (e.g., 6) are activated for any given token. This granularity allows for highly specialized sub-networks that can handle specific nuances of logic, syntax, or domain knowledge without engaging the entire network.

- Shared Experts: To mitigate the “expert routing” problem where context is lost between experts, DeepSeek implements “shared experts” that are always activated. These shared experts handle fundamental linguistic structures and common knowledge, ensuring coherence while the routed experts handle specialized tasks. This hybrid approach resolves the stability issues often faced by sparse models.

Multi-Head Latent Attention (MLA)

Perhaps the most significant bottleneck in serving LLMs at scale is the Key-Value (KV) cache—the memory required to store the context of the conversation. As context windows expand to 128k tokens and beyond, the KV cache can grow to occupy terabytes of VRAM, exceeding the capacity of even the most advanced GPU clusters.

DeepSeek introduced Multi-Head Latent Attention (MLA) to solve this memory bottleneck. Unlike traditional Multi-Head Attention (MHA) which stores full key and value matrices, MLA utilizes low-rank joint compression.

- Mechanism: MLA projects the Key and Value vectors into a lower-dimensional latent space during inference. It effectively “zips” the attention memory.

- Impact: This compression reduces the KV cache memory requirements by approximately 93% compared to standard MHA.

- Operational Implication: This massive reduction in memory footprint allows DeepSeek to serve the 671B parameter model on significantly less hardware (e.g., Nvidia H800s or even aggregated consumer cards) with much larger batch sizes.

This is the technical foundation of their ability to offer API pricing at orders of magnitude lower than their competitors.

3.3 DeepSeek Sparse Attention (DSA) and V3.2

In September 2025, DeepSeek released the experimental V3.2 model, which introduced DeepSeek Sparse Attention (DSA), further optimizing long-context processing. DSA addresses the computational cost of attention, which typically scales quadratically with sequence length.

- Lightning Indexer: DSA employs a “Lightning Indexer,” a lightweight scanning mechanism that rapidly scores preceding tokens using FP8 precision to determine their relevance to the current query.

- Top-K Selection: Based on this scoring, the model selects only the top-K (e.g., 2048) most relevant key-value pairs for the main attention computation. This means the model effectively “ignores” irrelevant parts of the context window during the heavy compute phase, allowing for massive context windows with linear rather than quadratic compute penalties.

3.4 Kernel-Level Innovations: FlashMLA

DeepSeek’s optimization extends down to the bare metal. The company released “FlashMLA,” a library of optimized CUDA kernels designed specifically for their architecture on Nvidia Hopper GPUs.

- Performance: FlashMLA achieves memory bandwidth utilization of up to 3000 GB/s on H800 SXM5 GPUs, approaching the theoretical hardware limit.

- bf16 Optimization: The kernels are optimized for bfloat16 (BF16) matrix multiplication, achieving up to 580 TFLOPS, significantly surpassing the standard theoretical peaks through efficient instruction scheduling and memory access patterns. This software-hardware co-design allows DeepSeek to extract 100% of the value from their restricted hardware supply.

| Feature | DeepSeek-V3/R1 | OpenAI GPT-4o | Meta Llama 3.1 405B |

|---|---|---|---|

| Architecture | Mixture-of-Experts (MoE) | Dense (Presumed MoE) | Dense |

| Total Parameters | 671 Billion | ~1.8 Trillion (Est.) | 405 Billion |

| Active Parameters | ~37 Billion | Unknown | 405 Billion |

| Attention Mechanism | MLA (Low-Rank Compression) | MHA / GQA | Grouped-Query Attention |

| Context Window | 128k Tokens | 128k Tokens | 128k Tokens |

| KV Cache Size | ~93% Reduction vs MHA | Standard | Standard |

| Training Cost | ~$5.5 Million | ~$100 Million+ | ~$100 Million+ |

| License | MIT (Open Weights) | Proprietary | Open Weights |

4. The Reasoning Revolution: DeepSeek-R1

In January 2025, DeepSeek fundamentally altered the competitive landscape with the release of DeepSeek-R1, a model designed specifically for complex reasoning, coding, and mathematical problem-solving. This model utilizes a “Chain of Thought” (CoT) processing method comparable to OpenAI’s o1 series, but with a distinct training methodology that challenges Western dominance in reinforcement learning.

4.1 Reinforcement Learning (RL) Without Supervised Fine-Tuning

The most groundbreaking aspect of the R1 development was the “DeepSeek-R1-Zero” experiment. DeepSeek researchers demonstrated that advanced reasoning capabilities could emerge purely through large-scale Reinforcement Learning (RL) without the traditional preliminary step of Supervised Fine-Tuning (SFT) using thousands of human-labeled examples.

- Emergent Behavior: By providing the model with a rule-based reward system (e.g., “Does this code compile and pass tests?” or “Is this math answer correct?”), the model essentially taught itself to “think.” It learned to backtrack, verify its own steps, and generate long chains of thought to solve complex problems through trial and error.

- The “Aha” Moment: The technical report describes moments where the model spontaneously learned to re-evaluate its strategy when stuck, a behavior previously thought to require explicit human instruction.

- Refinement: While R1-Zero was powerful, it suffered from readability issues and “language mixing” (switching between languages mid-thought). The final R1 model incorporated a small amount of “cold start” data to stabilize these behaviors while retaining the reasoning power of the pure RL approach.

4.2 Distillation and Democratization

DeepSeek did not stop at releasing the massive 671B parameter R1 model. They employed a technique called “model distillation” to transfer the reasoning patterns of the large model into smaller, more efficient architectures, specifically the Llama and Qwen distinct codebases.

- Edge Intelligence: DeepSeek released distilled versions ranging from 1.5B to 70B parameters. These smaller models, such as the DeepSeek-R1-Distill-Qwen-32B, demonstrated the ability to outperform massive models like OpenAI’s o1-mini on benchmark tasks.

- Impact: This effectively democratized “reasoning” capabilities, allowing developers to run near-frontier-level logic models on consumer hardware (e.g., high-end laptops or gaming PCs) via local inference tools like Ollama, completely bypassing the need for cloud APIs.

5. Comparative Performance and Benchmarking

The performance of DeepSeek models has been validated not just by internal marketing but by independent benchmarks and widespread developer adoption.

5.1 Quantitative Benchmarks

In standardized testing, DeepSeek-V3 and R1 consistently punch above their weight class, particularly in “hard” sciences—mathematics and coding—where objective verification is possible.

- Mathematics: On the MATH-500 benchmark, DeepSeek-R1 achieved a score of 97.3%, performing on par with OpenAI’s o1 and significantly outperforming traditional dense models. On the AIME 2024 (American Invitational Mathematics Examination), the distilled 32B model scored 72.6%, a remarkable feat for a model of its size.

- Coding: DeepSeek-Coder-V2 has been widely cited as the first open-source model to arguably surpass GPT-4 Turbo in coding tasks, achieving superior Elo ratings on platforms like Codeforces (Rating: 2029), placing it in the top percentile of human participants.

- General Reasoning: While GPT-4o maintains a slight edge in broad, creative writing and nuance, and Gemini 2.0 leads in multimodal consistency (video/image), DeepSeek is statistically equivalent for technical reasoning tasks.

5.2 The “Language Mixing” Phenomenon

One unique characteristic observed in DeepSeek models is “language mixing.” Due to its training data being heavily sourced from both English and Chinese internet corpora, the model’s internal “Chain of Thought” often switches between English and Chinese, even when the user query is in English. While the final output is usually correctly localized, this peek into the model’s “subconscious” highlights its dual-cultural origins and has been a point of fascination for researchers.

6. Economic Disruption: The API Price War

DeepSeek’s most immediate impact on the global AI market was economic. The combination of the architectural efficiencies described above (MoE, MLA, FlashMLA) allowed DeepSeek to undercut the market pricing of Large Language Models to a degree that can be described as predatory.

6.1 The Race to the Bottom

DeepSeek’s API pricing strategy is aggressive. By charging approximately $0.028 per million input tokens (cache hit) and $0.28 for cache misses—prices orders of magnitude lower than OpenAI’s GPT-4o or Anthropic’s Claude 3.5—DeepSeek triggered a price war.

- Domestic Reaction: In China, the reaction was instantaneous. Tech giants Alibaba (Qwen), Baidu (Ernie), and ByteDance were forced to slash their API prices, in some cases by 97% or offering free tiers, to prevent a mass exodus of developers to the DeepSeek ecosystem.

- Global Pressure: While US hyperscalers did not immediately drop prices to match DeepSeek’s floor, the availability of a high-performance, ultra-cheap model pressured the margins of the entire “AI Wrapper” economy—startups whose primary business model involved reselling GPT-4 capabilities with a thin UI layer found their value proposition evaporated by DeepSeek’s direct-to-developer economics.

6.2 Disruption of the CapEx Narrative

The “DeepSeek Shock” reverberated through Wall Street by challenging the capital expenditure (CapEx) narratives of the “Mag 7” tech companies.

- Hardware Doubt: DeepSeek proved that state-of-the-art intelligence could be trained for ~$5.5 million, contrasting sharply with the $100 million+ price tags of GPT-4 or Gemini Ultra. This cast doubt on the necessity of the massive, multi-billion dollar data center build-outs planned by Microsoft and Meta.

- Nvidia Volatility: Consequently, Nvidia’s stock experienced significant volatility in early 2025. Investors feared that if software efficiency (via MoE and MLA) outpaced hardware demand, the insatiable appetite for H100/Blackwell clusters might plateau sooner than expected. DeepSeek effectively signaled that the future of AI might be “compute-optimal” rather than “compute-maximal.”

7. Developer Ecosystem and Integration

Beyond the models themselves, DeepSeek has successfully cultivated a robust developer ecosystem, effectively becoming the default “engine” for open-source AI development.

7.1 Tooling Integration

DeepSeek has been rapidly integrated into the most popular AI coding tools.

- Aider and Cursor: Tools like Aider (a command-line AI pair programmer) report that DeepSeek-V3 is now writing a significant portion of code in open-source contributions, rivaling Anthropic’s Claude 3.5 Sonnet. Developers prefer DeepSeek for its cost-effectiveness in “agentic” loops, where a coding agent might need to run dozens of prompts to fix a single bug—a process that is cost-prohibitive with GPT-4o but negligible with DeepSeek.

- Local Deployment: The availability of quantized and distilled versions of DeepSeek R1 has made it a favorite for local deployment via Ollama.

This is particularly critical for privacy-conscious developers who cannot send proprietary code to a cloud API.

Hardware Optimization on CPU

DeepSeek is not limited to GPUs. Collaborations and open-source optimizations have enabled DeepSeek models to run efficiently on CPUs. The SGLang project, for instance, supports native CPU backends on Intel Xeon processors with AMX (Advanced Matrix Extensions), achieving up to 14x speedups for inference. This further lowers the barrier to entry, allowing enterprise servers without dedicated GPUs to run reasoning agents.

Cybersecurity Posture and Vulnerabilities

Despite its algorithmic prowess, DeepSeek’s maturity as an enterprise software provider has been questioned, particularly regarding security.

The January 2025 Cyberattack

In late January 2025, DeepSeek suffered a catastrophic cyberattack. A vulnerability in their implementation of the ClickHouse database exposed over 1 million sensitive log streams, including user chat histories and API keys.

- Mechanism: Security researchers at Wiz identified that the database was publicly accessible, granting “full control over database operations.”

- Impact: The breach, combined with a simultaneous DDoS attack and supply chain malware injection, severely damaged the company’s reputation for reliability. It highlighted a critical disparity: while DeepSeek’s models are world-class, their infrastructure security lagged behind the hardened standards of AWS or Azure.

Jailbreaking and Safety

Independent analyses have found that DeepSeek models are easier to “jailbreak” (bypass safety filters) than their Western counterparts. Research by KELA demonstrated that the models could be manipulated to generate malicious code or phishing templates more readily than GPT-4. This lack of robust safety alignment, while appealing to some researchers desiring “uncensored” models, poses significant liability risks for enterprise deployment.

Geopolitical Risk, Regulation, and Sovereignty

DeepSeek exists at the volatile intersection of high-performance technology and US-China geopolitical rivalry. Its rapid adoption has triggered a swift and severe regulatory immune response from Western governments.

The China Mobile Nexus and Data Privacy

In February 2025, a report by Feroot Security linked DeepSeek’s infrastructure to China Mobile, a state-owned telecommunications company banned from operating in the US due to national security concerns.

- The Evidence: Researchers found heavily obfuscated code in the DeepSeek login process that appeared to send user telemetry and account data to China Mobile servers.

- Implication: While DeepSeek’s privacy policy acknowledges data storage in the PRC, the direct conduit to a state-owned entity linked to the Chinese military raised immediate red flags in Washington. This contradicted the company’s attempts to position itself as a neutral, open-source player.

US Legislative and Executive Action

The United States government has moved to quarantine the US federal ecosystem from DeepSeek’s influence.

- H.R. 1121: Introduced by Representatives Gottheimer and LaHood in February 2025, the “No DeepSeek on Government Devices Act” explicitly prohibits the use of DeepSeek by US executive agencies.

- Entity List Expansion: As of late 2025, the US Department of Commerce is aggressively expanding the Entity List to include Chinese AI entities. DeepSeek’s ability to bypass hardware sanctions via software efficiency makes it a primary target for future controls, potentially cutting off its access to cloud compute providers or app stores.

- State Bans: Texas and other states have proactively banned the application on state networks, citing the risk of critical infrastructure infiltration.

European Union Compliance and Fragmentation

In Europe, DeepSeek faces a different set of hurdles centered on the EU AI Act and GDPR.

- Italy Ban: The Italian Data Protection Authority (Garante) banned DeepSeek in early 2025, citing GDPR violations regarding data processing transparency.

- COMPL-AI Findings: Technical evaluations under the new EU AI Act framework (COMPL-AI) revealed a mixed compliance profile. While DeepSeek models excelled in toxicity prevention, they ranked lowest in the leaderboard for cybersecurity robustness and bias mitigation, showing high susceptibility to “goal hijacking”.

- Investigations: Investigations are ongoing in Ireland, Greece, and South Korea regarding the company’s lack of local representation and data handling practices.

Future Outlook and Conclusion

As 2025 draws to a close, DeepSeek stands as the most disruptive force in artificial intelligence. Its roadmap includes the release of “DeepSeek-XL,” a 70B+ parameter foundation model designed for enterprise on-premise use, and the continued refinement of its sparse attention kernels.

The Bifurcation of the AI Stack

DeepSeek has accelerated the bifurcation of the global AI ecosystem.

- The Western Stack: Characterized by massive scale, proprietary closed-source models (OpenAI, Anthropic), high capital expenditure, strict safety alignment, and rigorous adherence to US/EU compliance standards.

- The Open/Eastern Stack: Characterized by DeepSeek and its derivatives—prioritizing architectural efficiency, open weights, aggressive pricing, and run-anywhere flexibility (including consumer hardware).

Conclusion

DeepSeek has proven that the “moat” of capital-intensive AI development is more permeable than previously believed. By substituting capital with code—leveraging MoE, MLA, and RL to achieve frontier performance at 5% of the cost—DeepSeek has fundamentally altered the economics of intelligence. However, its origins and operational nexus within China create an insurmountable trust barrier for Western governments and regulated enterprises. The future suggests a scenario where DeepSeek dominates the open-source research community and cost-sensitive markets in the Global South and Asia, while Western enterprises continue to pay a premium for the security, compliance, and legal indemnification offered by Silicon Valley. For the industry at large, DeepSeek is a permanent wake-up call: the era of lazy scaling is over; the era of algorithmic efficiency has begun.